A Cooperative Q-learning Approach for Real-time Power Allocation in Femtocell Networks

Paper and Code

Mar 12, 2013

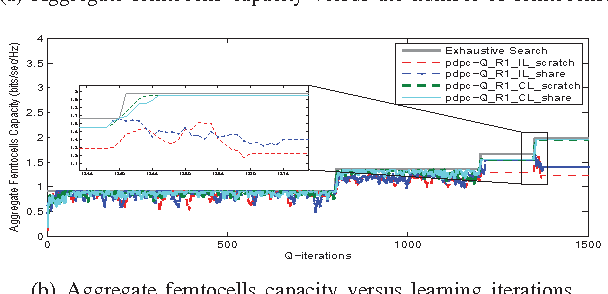

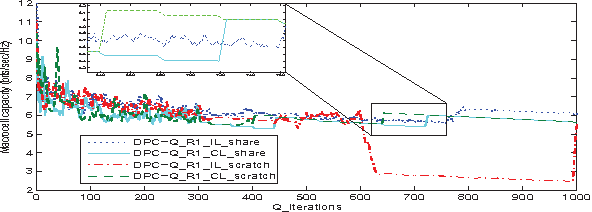

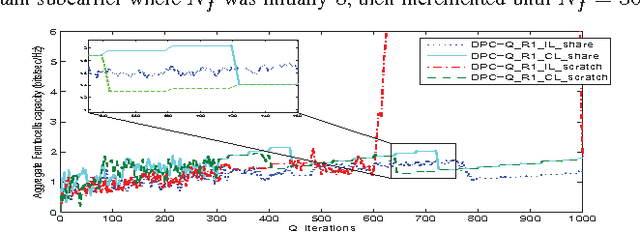

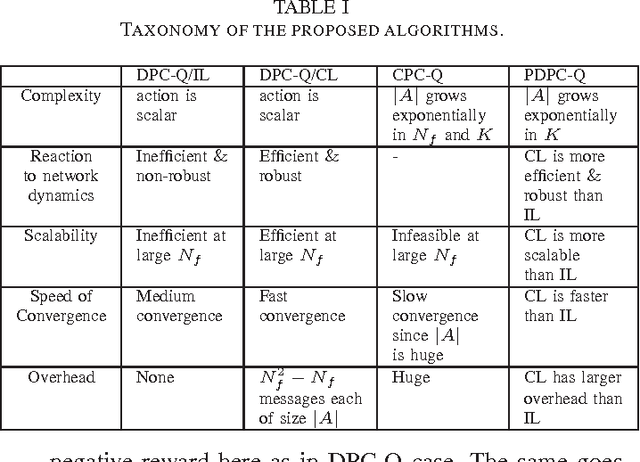

In this paper, we address the problem of distributed interference management of cognitive femtocells that share the same frequency range with macrocells (primary user) using distributed multi-agent Q-learning. We formulate and solve three problems representing three different Q-learning algorithms: namely, centralized, distributed and partially distributed power control using Q-learning (CPC-Q, DPC-Q and PDPC-Q). CPCQ, although not of practical interest, characterizes the global optimum. Each of DPC-Q and PDPC-Q works in two different learning paradigms: Independent (IL) and Cooperative (CL). The former is considered the simplest form for applying Qlearning in multi-agent scenarios, where all the femtocells learn independently. The latter is the proposed scheme in which femtocells share partial information during the learning process in order to strike a balance between practical relevance and performance. In terms of performance, the simulation results showed that the CL paradigm outperforms the IL paradigm and achieves an aggregate femtocells capacity that is very close to the optimal one. For the practical relevance issue, we evaluate the robustness and scalability of DPC-Q, in real time, by deploying new femtocells in the system during the learning process, where we showed that DPC-Q in the CL paradigm is scalable to large number of femtocells and more robust to the network dynamics compared to the IL paradigm

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge