A Comprehensive Survey on Joint Resource Allocation Strategies in Federated Edge Learning

Paper and Code

Oct 10, 2024

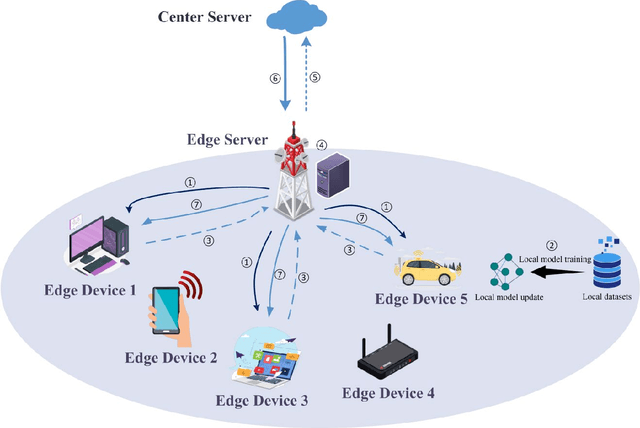

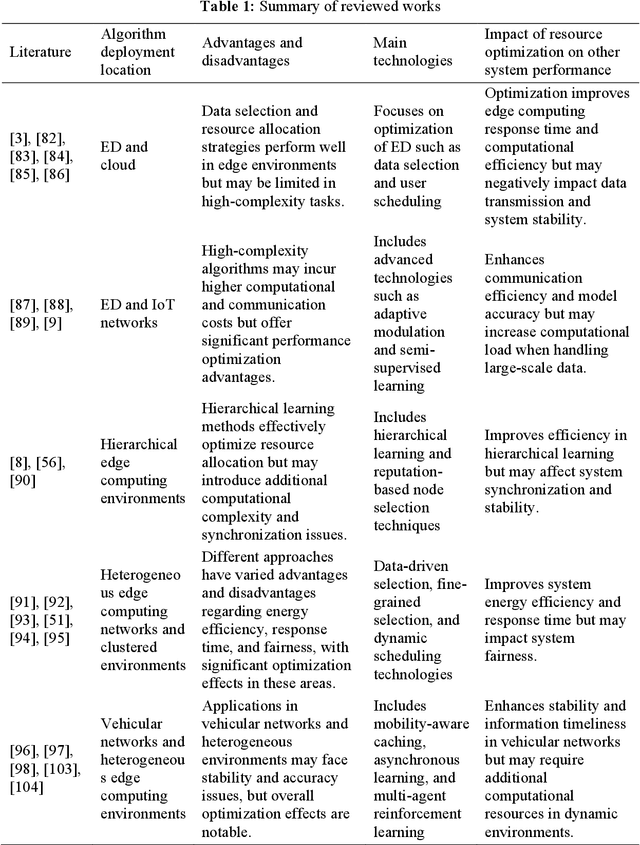

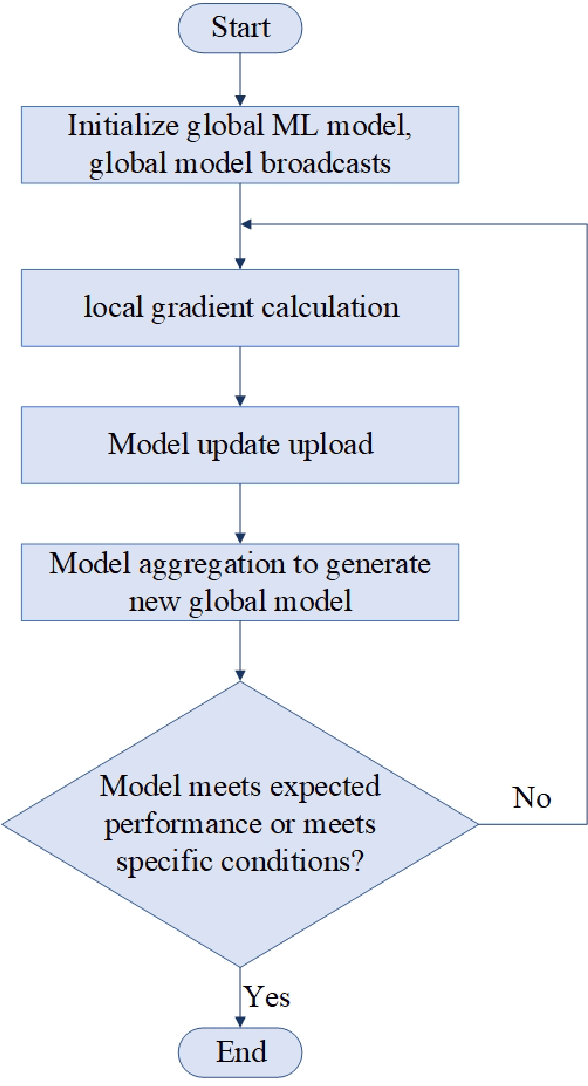

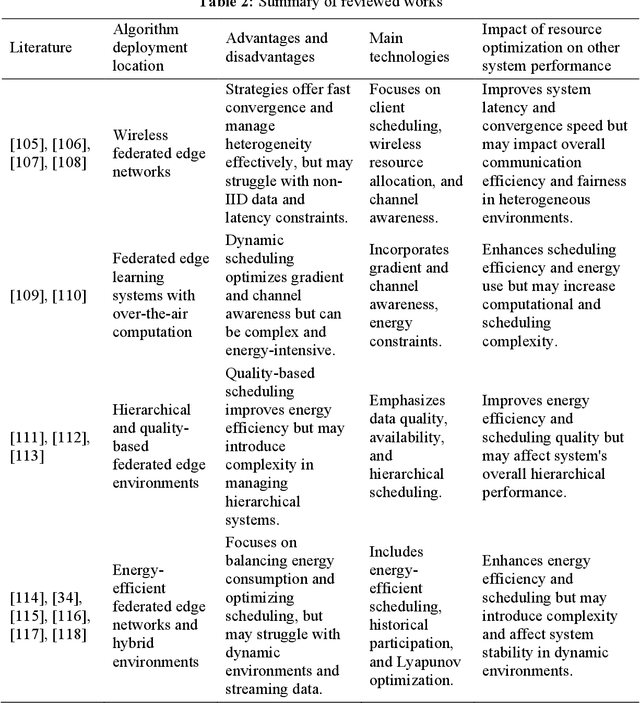

Federated Edge Learning (FEL), an emerging distributed Machine Learning (ML) paradigm, enables model training in a distributed environment while ensuring user privacy by using physical separation for each user data. However, with the development of complex application scenarios such as the Internet of Things (IoT) and Smart Earth, the conventional resource allocation schemes can no longer effectively support these growing computational and communication demands. Therefore, joint resource optimization may be the key solution to the scaling problem. This paper simultaneously addresses the multifaceted challenges of computation and communication, with the growing multiple resource demands. We systematically review the joint allocation strategies for different resources (computation, data, communication, and network topology) in FEL, and summarize the advantages in improving system efficiency, reducing latency, enhancing resource utilization and enhancing robustness. In addition, we present the potential ability of joint optimization to enhance privacy preservation by reducing communication requirements, indirectly. This work not only provides theoretical support for resource management in federated learning (FL) systems, but also provides ideas for potential optimal deployment in multiple real-world scenarios. By thoroughly discussing the current challenges and future research directions, it also provides some important insights into multi-resource optimization in complex application environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge