A coarse space acceleration of deep-DDM

Paper and Code

Dec 07, 2021

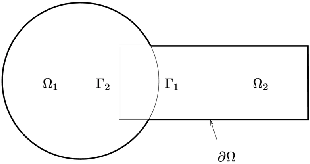

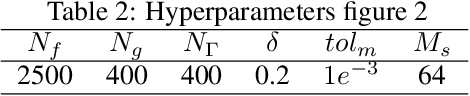

The use of deep learning methods for solving PDEs is a field in full expansion. In particular, Physical Informed Neural Networks, that implement a sampling of the physical domain and use a loss function that penalizes the violation of the partial differential equation, have shown their great potential. Yet, to address large scale problems encountered in real applications and compete with existing numerical methods for PDEs, it is important to design parallel algorithms with good scalability properties. In the vein of traditional domain decomposition methods (DDM), we consider the recently proposed deep-ddm approach. We present an extension of this method that relies on the use of a coarse space correction, similarly to what is done in traditional DDM solvers. Our investigations shows that the coarse correction is able to alleviate the deterioration of the convergence of the solver when the number of subdomains is increased thanks to an instantaneous information exchange between subdomains at each iteration. Experimental results demonstrate that our approach induces a remarkable acceleration of the original deep-ddm method, at a reduced additional computational cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge