6-DoF Grasp Planning using Fast 3D Reconstruction and Grasp Quality CNN

Paper and Code

Sep 18, 2020

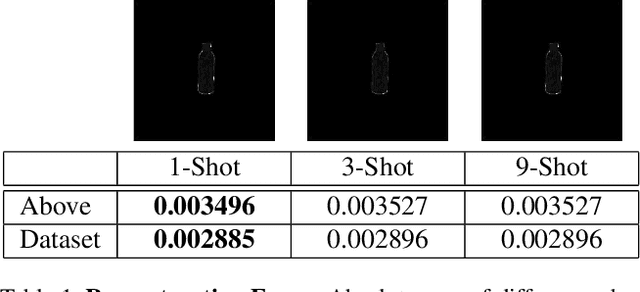

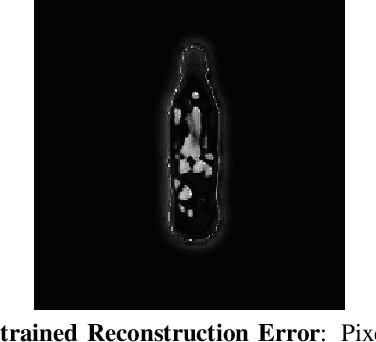

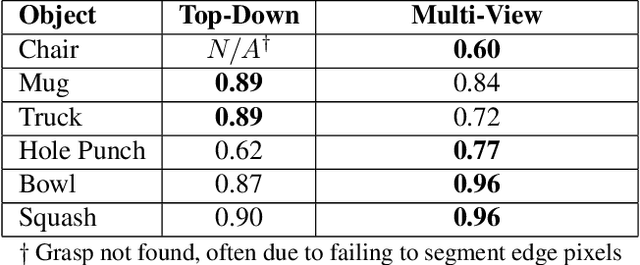

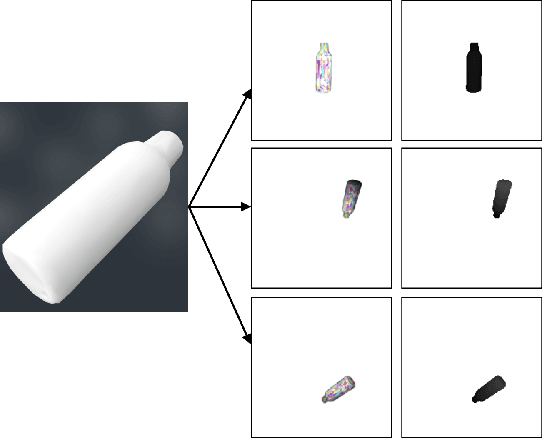

Recent consumer demand for home robots has accelerated performance of robotic grasping. However, a key component of the perception pipeline, the depth camera, is still expensive and inaccessible to most consumers. In addition, grasp planning has significantly improved recently, by leveraging large datasets and cloud robotics, and by limiting the state and action space to top-down grasps with 4 degrees of freedom (DoF). By leveraging multi-view geometry of the object using inexpensive equipment such as off-the-shelf RGB cameras and state-of-the-art algorithms such as Learn Stereo Machine (LSM\cite{kar2017learning}), the robot is able to generate more robust grasps from different angles with 6-DoF. In this paper, we present a modification of LSM to graspable objects, evaluate the grasps, and develop a 6-DoF grasp planner based on Grasp-Quality CNN (GQ-CNN\cite{mahler2017dex}) that exploits multiple camera views to plan a robust grasp, even in the absence of a possible top-down grasp.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge