Zsófia Kallus

Hybrid Quantum-Classical Autoencoders for End-to-End Radio Communication

Jan 06, 2023

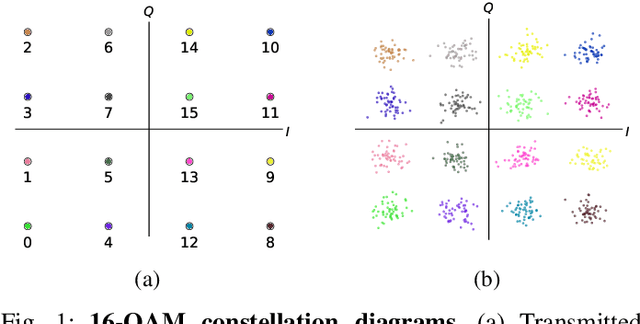

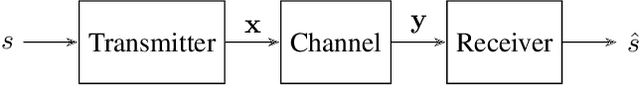

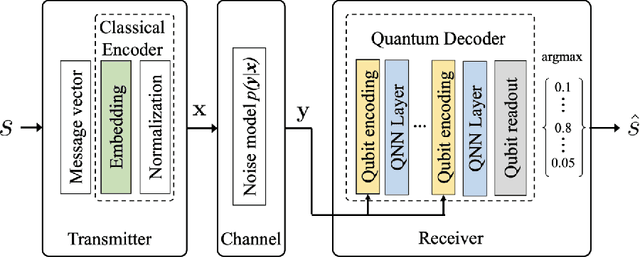

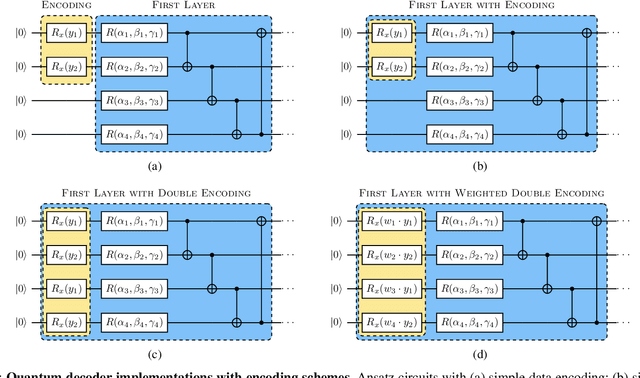

Abstract:Quantum neural networks are emerging as potential candidates to leverage noisy quantum processing units for applications. Here we introduce hybrid quantum-classical autoencoders for end-to-end radio communication. In the physical layer of classical wireless systems, we study the performance of simulated architectures for standard encoded radio signals over a noisy channel. We implement a hybrid model, where a quantum decoder in the receiver works with a classical encoder in the transmitter part. Besides learning a latent space representation of the input symbols with good robustness against signal degradation, a generalized data re-uploading scheme for the qubit-based circuits allows to meet inference-time constraints of the application.

* 6 pages, 8 figures

Photonic Quantum Policy Learning in OpenAI Gym

Aug 29, 2021

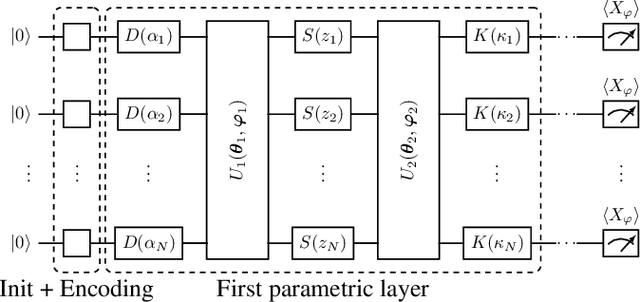

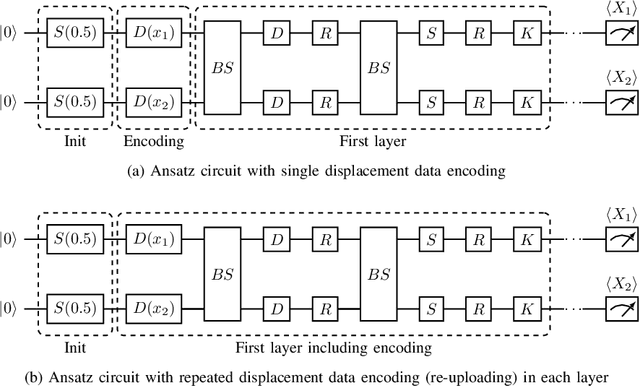

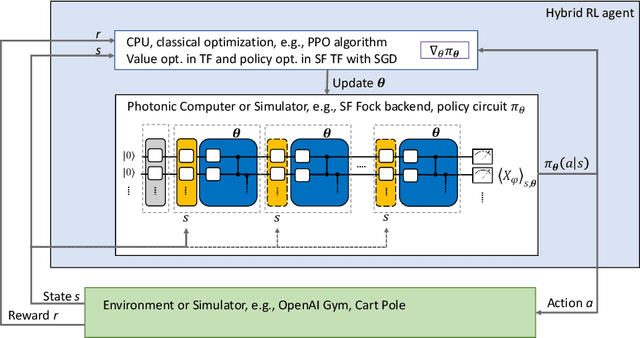

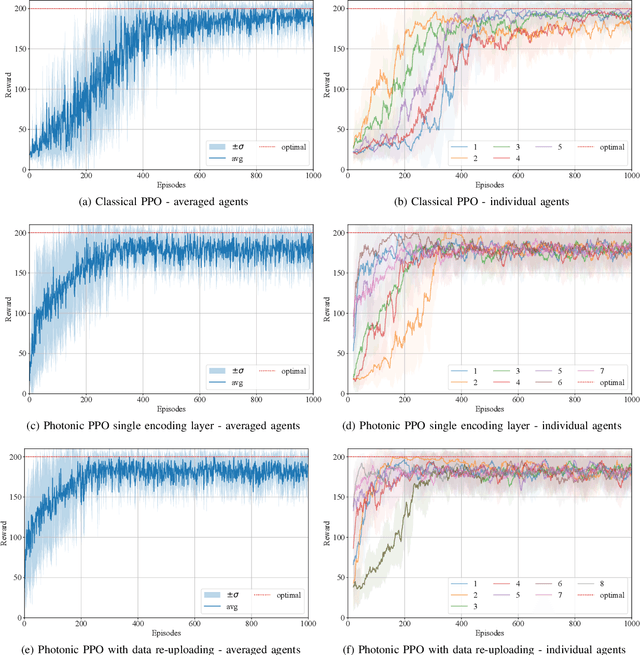

Abstract:In recent years, near-term noisy intermediate scale quantum (NISQ) computing devices have become available. One of the most promising application areas to leverage such NISQ quantum computer prototypes is quantum machine learning. While quantum neural networks are widely studied for supervised learning, quantum reinforcement learning is still just an emerging field of this area. To solve a classical continuous control problem, we use a continuous-variable quantum machine learning approach. We introduce proximal policy optimization for photonic variational quantum agents and also study the effect of the data re-uploading. We present performance assessment via empirical study using Strawberry Fields, a photonic simulator Fock backend and a hybrid training framework connected to an OpenAI Gym environment and TensorFlow. For the restricted CartPole problem, the two variations of the photonic policy learning achieve comparable performance levels and a faster convergence than the baseline classical neural network of same number of trainable parameters.

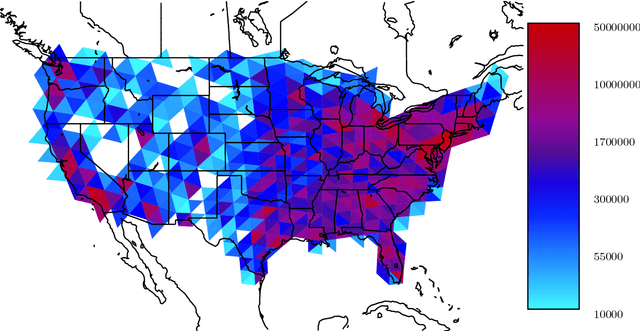

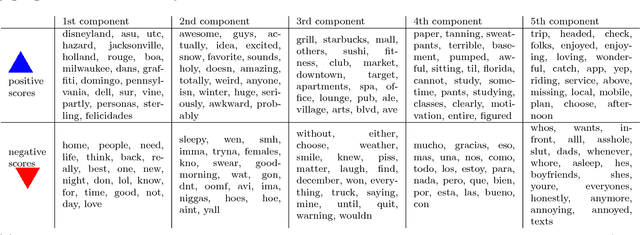

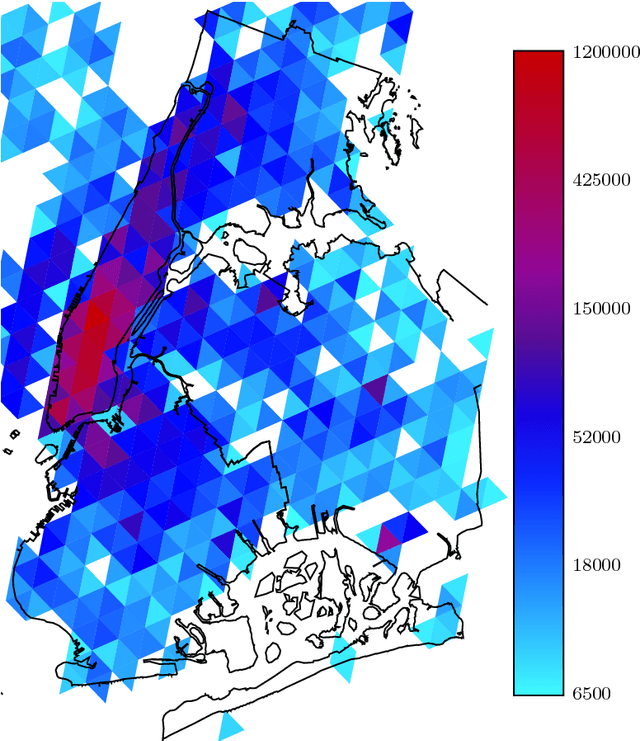

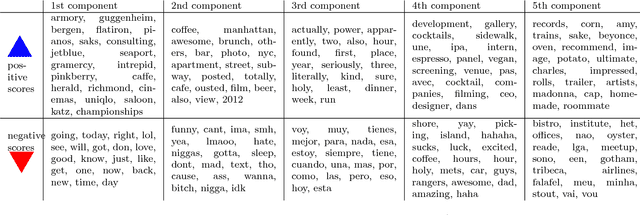

Using Robust PCA to estimate regional characteristics of language use from geo-tagged Twitter messages

Nov 05, 2013

Abstract:Principal component analysis (PCA) and related techniques have been successfully employed in natural language processing. Text mining applications in the age of the online social media (OSM) face new challenges due to properties specific to these use cases (e.g. spelling issues specific to texts posted by users, the presence of spammers and bots, service announcements, etc.). In this paper, we employ a Robust PCA technique to separate typical outliers and highly localized topics from the low-dimensional structure present in language use in online social networks. Our focus is on identifying geospatial features among the messages posted by the users of the Twitter microblogging service. Using a dataset which consists of over 200 million geolocated tweets collected over the course of a year, we investigate whether the information present in word usage frequencies can be used to identify regional features of language use and topics of interest. Using the PCA pursuit method, we are able to identify important low-dimensional features, which constitute smoothly varying functions of the geographic location.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge