Zilong Ji

Vision at A Glance: Interplay between Fine and Coarse Information Processing Pathways

Aug 23, 2020

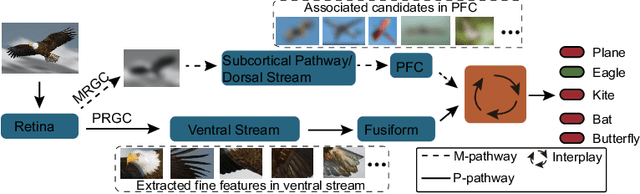

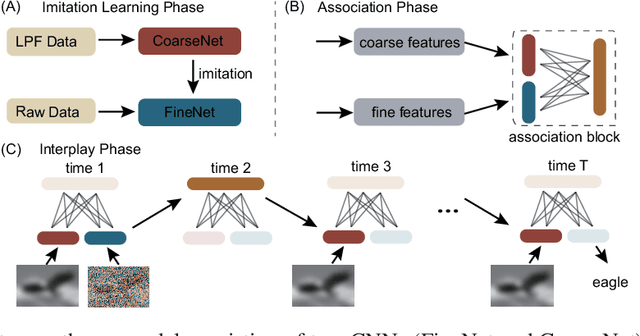

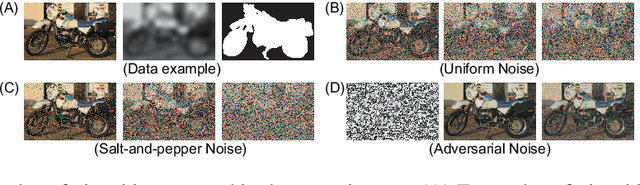

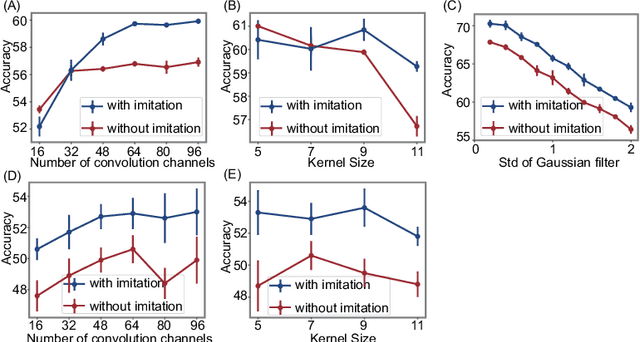

Abstract:Object recognition is often viewed as a feedforward, bottom-up process in machine learning, but in real neural systems, object recognition is a complicated process which involves the interplay between two signal pathways. One is the parvocellular pathway (P-pathway), which is slow and extracts fine features of objects; the other is the magnocellular pathway (M-pathway), which is fast and extracts coarse features of objects. It has been suggested that the interplay between the two pathways endows the neural system with the capacity of processing visual information rapidly, adaptively, and robustly. However, the underlying computational mechanisms remain largely unknown. In this study, we build a computational model to elucidate the computational advantages associated with the interactions between two pathways. Our model consists of two convolution neural networks: one mimics the P-pathway, referred to as FineNet, which is deep, has small-size kernels, and receives detailed visual inputs; the other mimics the M-pathway, referred to as CoarseNet, which is shallow, has large-size kernels, and receives low-pass filtered or binarized visual inputs. The two pathways interact with each other via a Restricted Boltzmann Machine. We find that: 1) FineNet can teach CoarseNet through imitation and improve its performance considerably; 2) CoarseNet can improve the noise robustness of FineNet through association; 3) the output of CoarseNet can serve as a cognitive bias to improve the performance of FineNet. We hope that this study will provide insight into understanding visual information processing and inspire the development of new object recognition architectures.

Unsupervised Few-shot Learning via Self-supervised Training

Dec 20, 2019

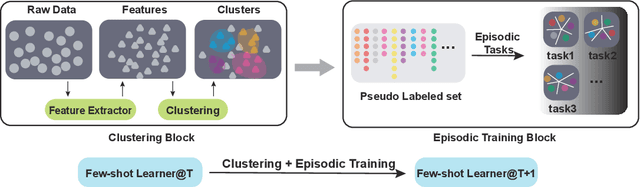

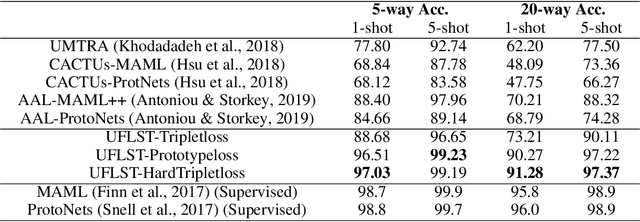

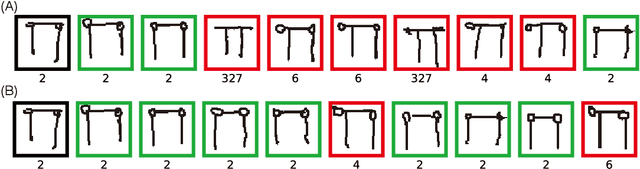

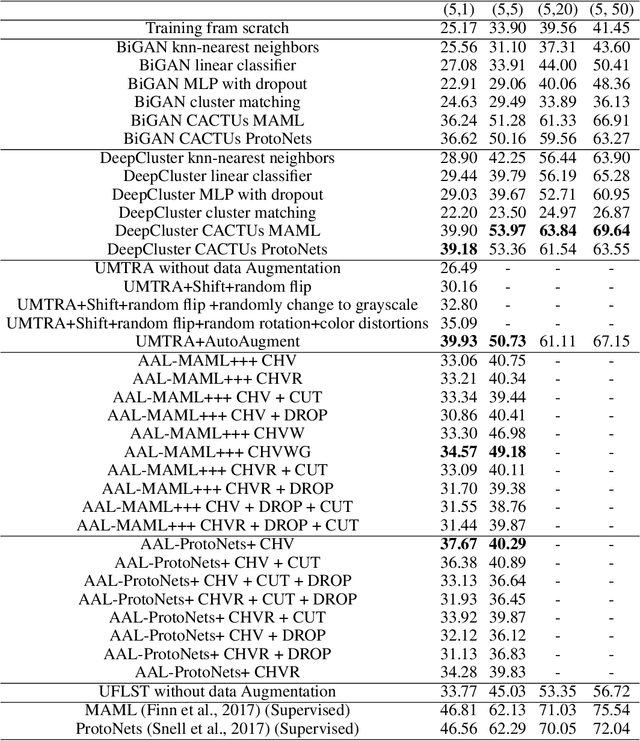

Abstract:Learning from limited exemplars (few-shot learning) is a fundamental, unsolved problem that has been laboriously explored in the machine learning community. However, current few-shot learners are mostly supervised and rely heavily on a large amount of labeled examples. Unsupervised learning is a more natural procedure for cognitive mammals and has produced promising results in many machine learning tasks. In the current study, we develop a method to learn an unsupervised few-shot learner via self-supervised training (UFLST), which can effectively generalize to novel but related classes. The proposed model consists of two alternate processes, progressive clustering and episodic training. The former generates pseudo-labeled training examples for constructing episodic tasks; and the later trains the few-shot learner using the generated episodic tasks which further optimizes the feature representations of data. The two processes facilitate with each other, and eventually produce a high quality few-shot learner. Using the benchmark dataset Omniglot and Mini-ImageNet, we show that our model outperforms other unsupervised few-shot learning methods. Using the benchmark dataset Market1501, we further demonstrate the feasibility of our model to a real-world application on person re-identification.

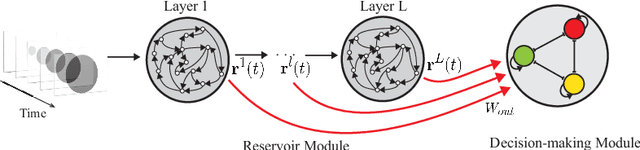

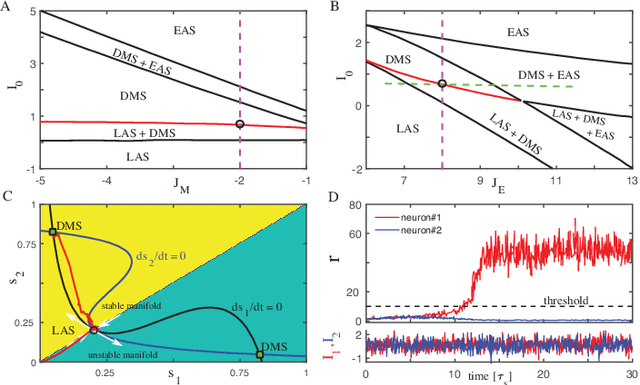

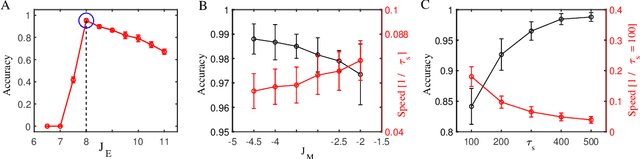

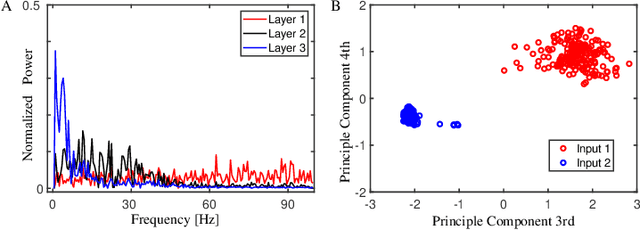

Spatiotemporal Information Processing with a Reservoir Decision-making Network

Jul 28, 2019

Abstract:Spatiotemporal information processing is fundamental to brain functions. The present study investigates a canonic neural network model for spatiotemporal pattern recognition. Specifically, the model consists of two modules, a reservoir subnetwork and a decision-making subnetwork. The former projects complex spatiotemporal patterns into spatially separated neural representations, and the latter reads out these neural representations via integrating information over time; the two modules are combined together via supervised-learning using known examples. We elucidate the working mechanism of the model and demonstrate its feasibility for discriminating complex spatiotemporal patterns. Our model reproduces the phenomenon of recognizing looming patterns in the neural system, and can learn to discriminate gait with very few training examples. We hope this study gives us insight into understanding how spatiotemporal information is processed in the brain and helps us to develop brain-inspired application algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge