Zifeng Hou

A New Data Normalization Method to Improve Dialogue Generation by Minimizing Long Tail Effect

May 04, 2020

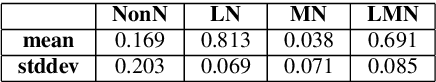

Abstract:Recent neural models have shown significant progress in dialogue generation. Most generation models are based on language models. However, due to the Long Tail Phenomenon in linguistics, the trained models tend to generate words that appear frequently in training datasets, leading to a monotonous issue. To address this issue, we analyze a large corpus from Wikipedia and propose three frequency-based data normalization methods. We conduct extensive experiments based on transformers and three datasets respectively collected from social media, subtitles, and the industrial application. Experimental results demonstrate significant improvements in diversity and informativeness (defined as the numbers of nouns and verbs) of generated responses. More specifically, the unigram and bigram diversity are increased by 2.6%-12.6% and 2.2%-18.9% on the three datasets, respectively. Moreover, the informativeness, i.e. the numbers of nouns and verbs, are increased by 4.0%-7.0% and 1.4%-12.1%, respectively. Additionally, the simplicity and effectiveness enable our methods to be adapted to different generation models without much extra computational cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge