Zhuoran Ji

ILP-M Conv: Optimize Convolution Algorithm for Single-Image Convolution Neural Network Inference on Mobile GPUs

Oct 03, 2019

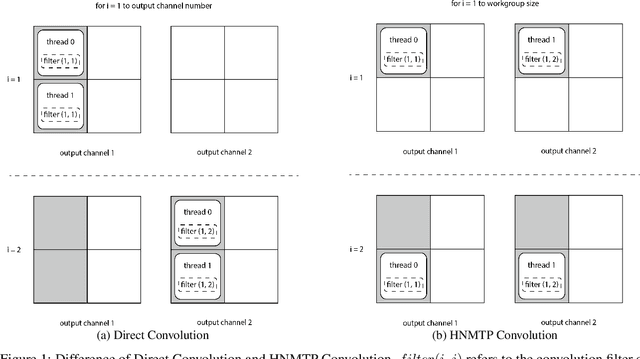

Abstract:Convolution neural networks are widely used for mobile applications. However, GPU convolution algorithms are designed for mini-batch neural network training, the single-image convolution neural network inference algorithm on mobile GPUs is not well-studied. After discussing the usage difference and examining the existing convolution algorithms, we proposed the HNTMP convolution algorithm. The HNTMP convolution algorithm achieves $14.6 \times$ speedup than the most popular \textit{im2col} convolution algorithm, and $2.30 \times$ speedup than the fastest existing convolution algorithm (direct convolution) as far as we know.

HG-Caffe: Mobile and Embedded Neural Network GPU Inference Engine with FP16 Supporting

Jan 03, 2019

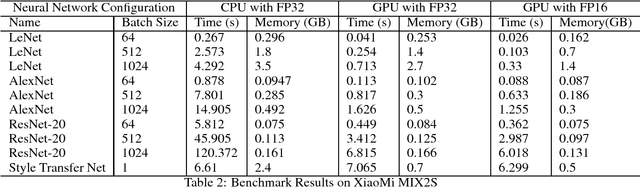

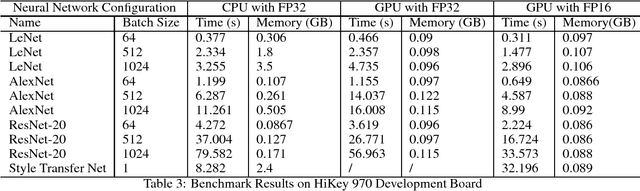

Abstract:Breakthroughs in the fields of deep learning and mobile system-on-chips are radically changing the way we use our smartphones. However, deep neural networks inference is still a challenging task for edge AI devices due to the computational overhead on mobile CPUs and a severe drain on the batteries. In this paper, we present a deep neural network inference engine named HG-Caffe, which supports GPUs with half precision. HG-Caffe provides up to 20 times speedup with GPUs compared to the original implementations. In addition to the speedup, the peak memory usage is also reduced to about 80%. With HG-Caffe, more innovative and fascinating mobile applications will be turned into reality.

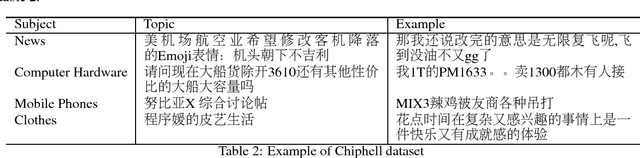

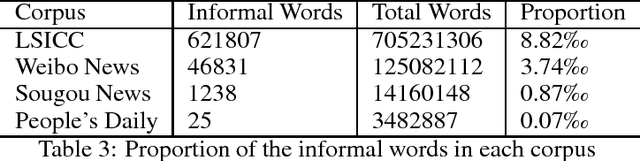

LSICC: A Large Scale Informal Chinese Corpus

Nov 26, 2018

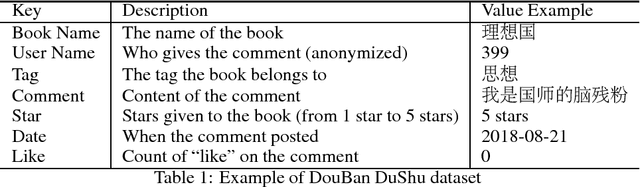

Abstract:Deep learning based natural language processing model is proven powerful, but need large-scale dataset. Due to the significant gap between the real-world tasks and existing Chinese corpus, in this paper, we introduce a large-scale corpus of informal Chinese. This corpus contains around 37 million book reviews and 50 thousand netizen's comments to the news. We explore the informal words frequencies of the corpus and show the difference between our corpus and the existing ones. The corpus can be further used to train deep learning based natural language processing tasks such as Chinese word segmentation, sentiment analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge