HG-Caffe: Mobile and Embedded Neural Network GPU Inference Engine with FP16 Supporting

Paper and Code

Jan 03, 2019

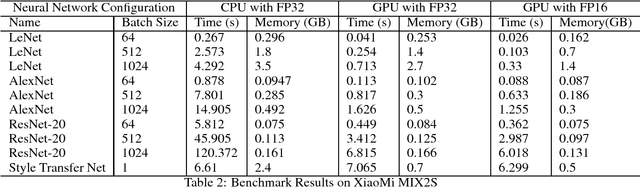

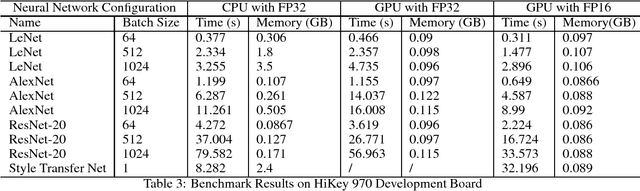

Breakthroughs in the fields of deep learning and mobile system-on-chips are radically changing the way we use our smartphones. However, deep neural networks inference is still a challenging task for edge AI devices due to the computational overhead on mobile CPUs and a severe drain on the batteries. In this paper, we present a deep neural network inference engine named HG-Caffe, which supports GPUs with half precision. HG-Caffe provides up to 20 times speedup with GPUs compared to the original implementations. In addition to the speedup, the peak memory usage is also reduced to about 80%. With HG-Caffe, more innovative and fascinating mobile applications will be turned into reality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge