Zhong Shao

Novelty Detection via Network Saliency in Visual-based Deep Learning

Jun 09, 2019

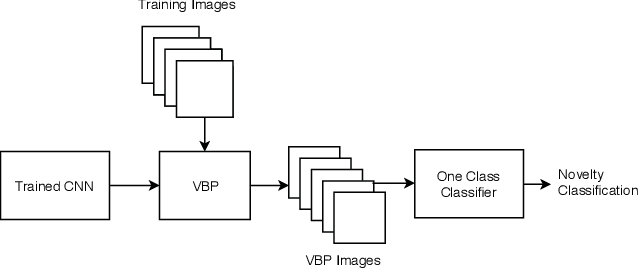

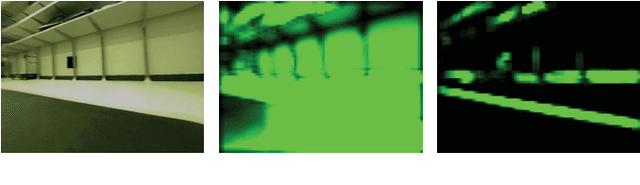

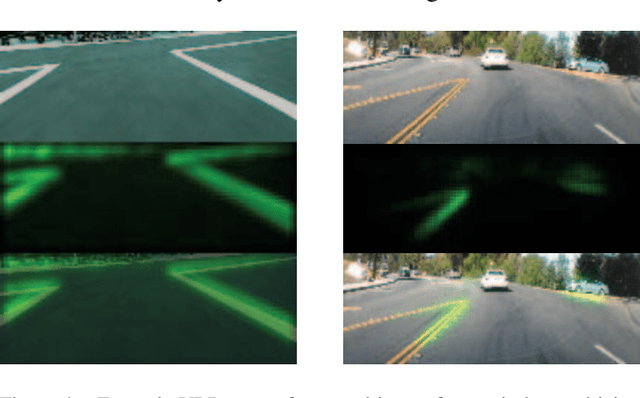

Abstract:Machine-learning driven safety-critical autonomous systems, such as self-driving cars, must be able to detect situations where its trained model is not able to make a trustworthy prediction. Often viewed as a black-box, it is non-obvious to determine when a model will make a safe decision and when it will make an erroneous, perhaps life-threatening one. Prior work on novelty detection deal with highly structured data and do not translate well to dynamic, real-world situations. This paper proposes a multi-step framework for the detection of novel scenarios in vision-based autonomous systems by leveraging information learned by the trained prediction model and a new image similarity metric. We demonstrate the efficacy of this method through experiments on a real-world driving dataset as well as on our in-house indoor racing environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge