Zhi Lai

Multi-Dimensional Self Attention based Approach for Remaining Useful Life Estimation

Dec 12, 2022Abstract:Remaining Useful Life (RUL) estimation plays a critical role in Prognostics and Health Management (PHM). Traditional machine health maintenance systems are often costly, requiring sufficient prior expertise, and are difficult to fit into highly complex and changing industrial scenarios. With the widespread deployment of sensors on industrial equipment, building the Industrial Internet of Things (IIoT) to interconnect these devices has become an inexorable trend in the development of the digital factory. Using the device's real-time operational data collected by IIoT to get the estimated RUL through the RUL prediction algorithm, the PHM system can develop proactive maintenance measures for the device, thus, reducing maintenance costs and decreasing failure times during operation. This paper carries out research into the remaining useful life prediction model for multi-sensor devices in the IIoT scenario. We investigated the mainstream RUL prediction models and summarized the basic steps of RUL prediction modeling in this scenario. On this basis, a data-driven approach for RUL estimation is proposed in this paper. It employs a Multi-Head Attention Mechanism to fuse the multi-dimensional time-series data output from multiple sensors, in which the attention on features is used to capture the interactions between features and attention on sequences is used to learn the weights of time steps. Then, the Long Short-Term Memory Network is applied to learn the features of time series. We evaluate the proposed model on two benchmark datasets (C-MAPSS and PHM08), and the results demonstrate that it outperforms the state-of-art models. Moreover, through the interpretability of the multi-head attention mechanism, the proposed model can provide a preliminary explanation of engine degradation. Therefore, this approach is promising for predictive maintenance in IIoT scenarios.

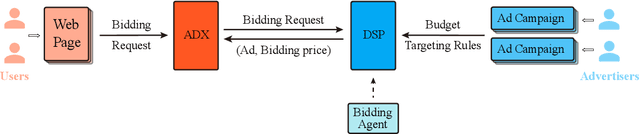

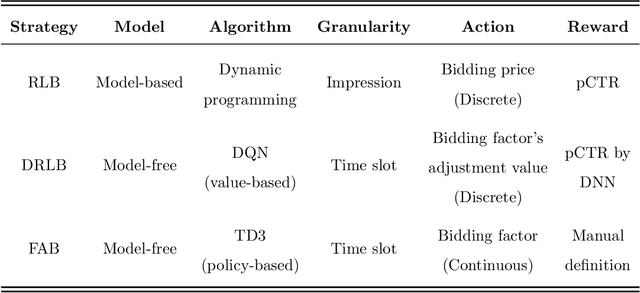

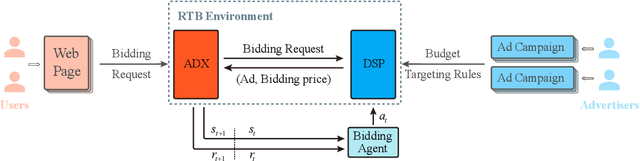

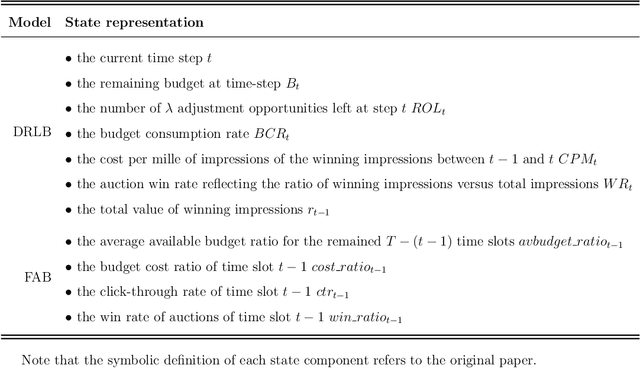

Real-time Bidding Strategy in Display Advertising: An Empirical Analysis

Nov 30, 2022

Abstract:Bidding strategies that help advertisers determine bidding prices are receiving increasing attention as more and more ad impressions are sold through real-time bidding systems. This paper first describes the problem and challenges of optimizing bidding strategies for individual advertisers in real-time bidding display advertising. Then, several representative bidding strategies are introduced, especially the research advances and challenges of reinforcement learning-based bidding strategies. Further, we quantitatively evaluate the performance of several representative bidding strategies on the iPinYou dataset. Specifically, we examine the effects of state, action, and reward function on the performance of reinforcement learning-based bidding strategies. Finally, we summarize the general steps for optimizing bidding strategies using reinforcement learning algorithms and present our suggestions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge