Zhexin Shen

Proficiency Aware Multi-Agent Actor-Critic for Mixed Aerial and Ground Robot Teaming

Feb 10, 2020

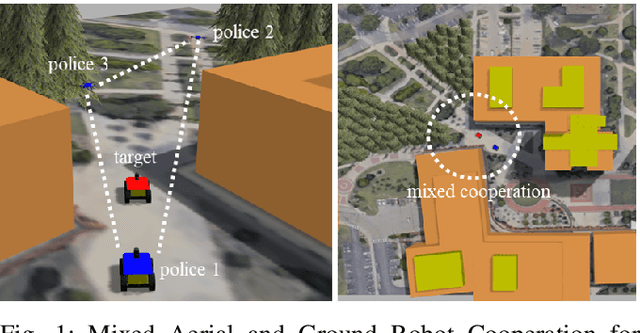

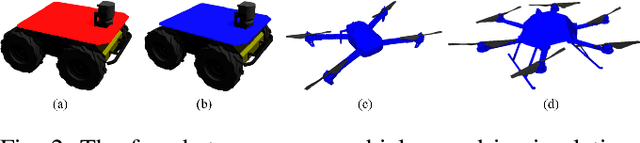

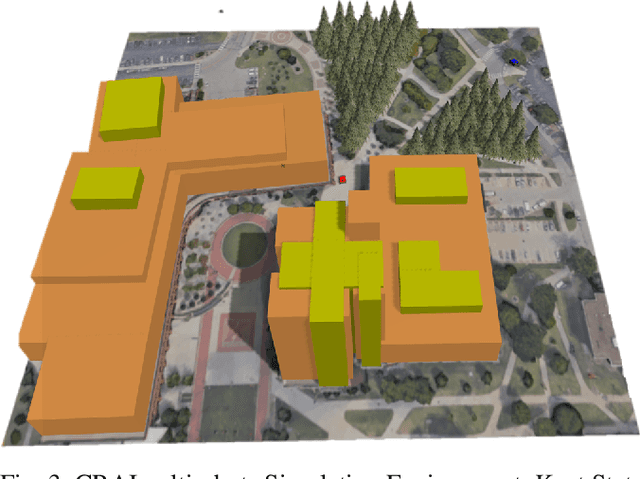

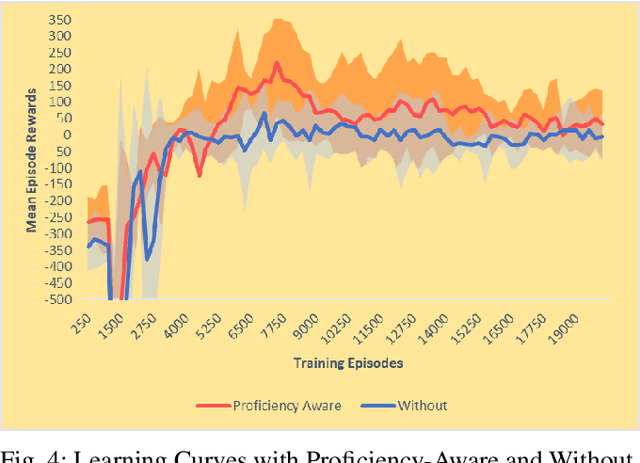

Abstract:Mixed Cooperation and competition are the actual scenarios of deploying multi-robot systems, such as the multi-UAV/UGV teaming for tracking criminal vehicles and protecting important individuals. Types and the total number of robot are all important factors that influence mixed cooperation quality. In various real-world environments, such as open space, forest, and urban building clusters, robot deployments have been influenced largely, as different robots have different configurations to support different environments. For example, UGVs are good at moving on the urban roads and reach the forest area while UAVs are good at flying in open space and around the high building clusters. However, it is challenging to design the collective behaviors for robot cooperation according to the dynamic changes in robot capabilities, working status, and environmental constraints. To solve this question, we proposed a novel proficiency-aware mixed environment multi-agent deep reinforcement learning (Mix-DRL). In Mix-DRL, robot capability and environment factors are formalized into the model to update the policy to model the nonlinear relations between heterogeneous team deployment strategies and the real-world environmental conditions. Mix-DRL can largely exploit robot capability while staying aware of the environment limitations. With the validation of a heterogeneous team with 2 UAVs and 2 UGVs in tasks, such as social security for criminal vehicle tracking, the Mix-DRL's effectiveness has been evaluated with $14.20\%$ of cooperation improvement. Given the general setting of Mix-DRL, it can be used to guide the general cooperation of UAVs and UGVs for multi-target tracking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge