Zhenzhen Qian

Exemplar-based Generative Facial Editing

May 31, 2020

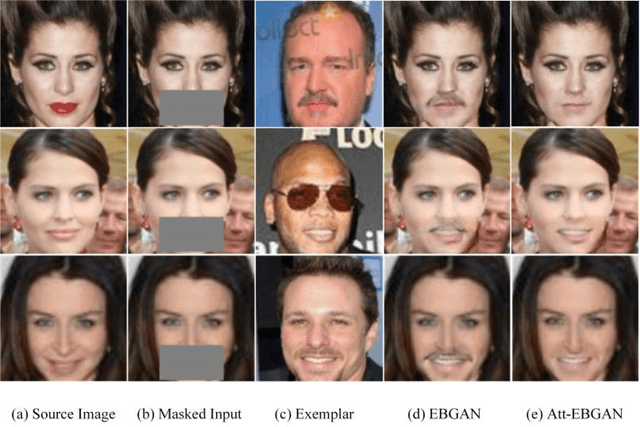

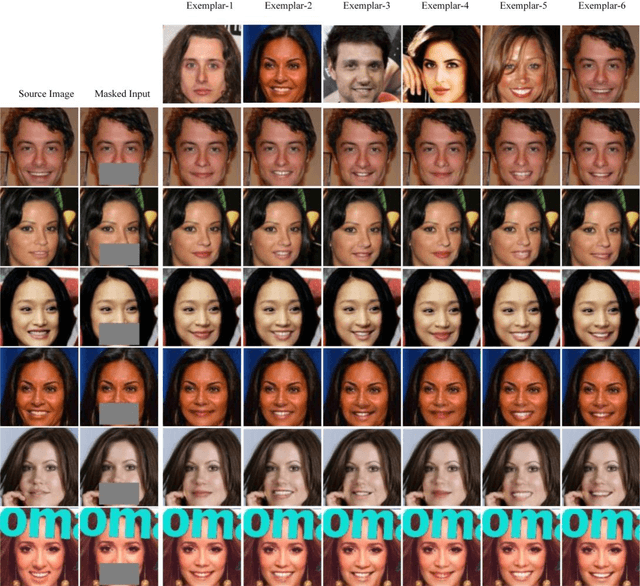

Abstract:Image synthesis has witnessed substantial progress due to the increasing power of generative model. This paper we propose a novel generative approach for exemplar based facial editing in the form of the region inpainting. Our method first masks the facial editing region to eliminates the pixel constraints of the original image, then exemplar based facial editing can be achieved by learning the corresponding information from the reference image to complete the masked region. In additional, we impose the attribute labels constraint to model disentangled encodings in order to avoid undesired information being transferred from the exemplar to the original image editing region. Experimental results demonstrate our method can produce diverse and personalized face editing results and provide far more user control flexibility than nearly all existing methods.

MulGAN: Facial Attribute Editing by Exemplar

Dec 28, 2019

Abstract:Recent studies on face attribute editing by exemplars have achieved promising results due to the increasing power of deep convolutional networks and generative adversarial networks. These methods encode attribute-related information in images into the predefined region of the latent feature space by employing a pair of images with opposite attributes as input to train model, the face attribute transfer between the input image and the exemplar can be achieved by exchanging their attribute-related latent feature region. However, they suffer from three limitations: (1) the model must be trained using a pair of images with opposite attributes as input; (2) weak capability of editing multiple attributes by exemplars; (3) poor quality of generating image. Instead of imposing opposite-attribute constraints on the input image in order to make the attribute information of images be encoded in the predefined region of the latent feature space, in this work we directly apply the attribute labels constraint to the predefined region of the latent feature space. Meanwhile, an attribute classification loss is employed to make the model learn to extract the attribute-related information of images into the predefined latent feature region of the corresponding attribute, which enables our method to transfer multiple attributes of the exemplar simultaneously. Besides, a novel model structure is designed to enhance attribute transfer capabilities by exemplars while improve the quality of the generated image. Experiments demonstrate the effectiveness of our model on overcoming the above three limitations by comparing with other methods on the CelebA dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge