Zhanxin Gao

Consistent Prompting for Rehearsal-Free Continual Learning

Mar 14, 2024

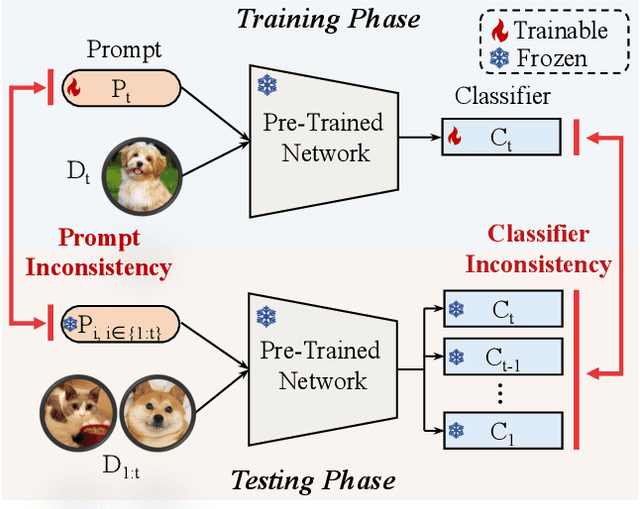

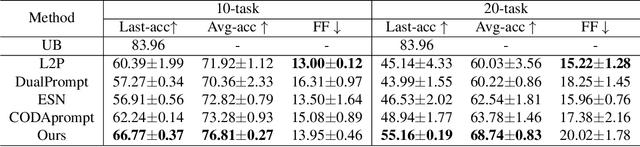

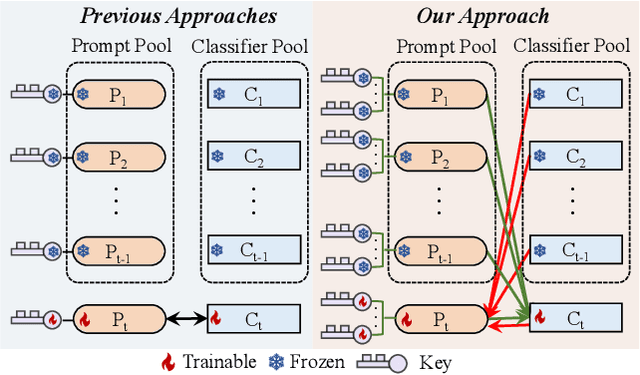

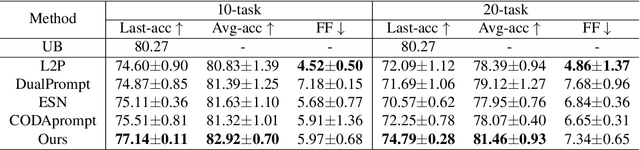

Abstract:Continual learning empowers models to adapt autonomously to the ever-changing environment or data streams without forgetting old knowledge. Prompt-based approaches are built on frozen pre-trained models to learn the task-specific prompts and classifiers efficiently. Existing prompt-based methods are inconsistent between training and testing, limiting their effectiveness. Two types of inconsistency are revealed. Test predictions are made from all classifiers while training only focuses on the current task classifier without holistic alignment, leading to Classifier inconsistency. Prompt inconsistency indicates that the prompt selected during testing may not correspond to the one associated with this task during training. In this paper, we propose a novel prompt-based method, Consistent Prompting (CPrompt), for more aligned training and testing. Specifically, all existing classifiers are exposed to prompt training, resulting in classifier consistency learning. In addition, prompt consistency learning is proposed to enhance prediction robustness and boost prompt selection accuracy. Our Consistent Prompting surpasses its prompt-based counterparts and achieves state-of-the-art performance on multiple continual learning benchmarks. Detailed analysis shows that improvements come from more consistent training and testing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge