Zhangde Song

Generative Design of Functional Metal Complexes Utilizing the Internal Knowledge of Large Language Models

Oct 21, 2024

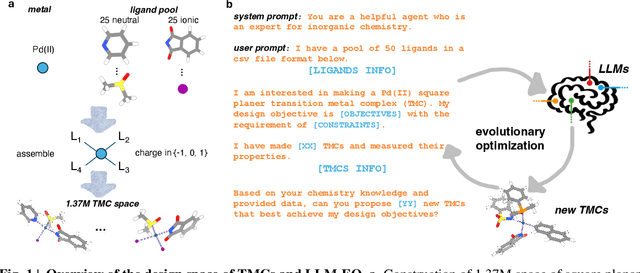

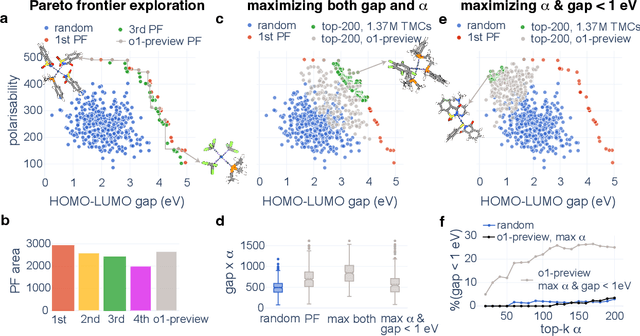

Abstract:Designing functional transition metal complexes (TMCs) faces challenges due to the vast search space of metals and ligands, requiring efficient optimization strategies. Traditional genetic algorithms (GAs) are commonly used, employing random mutations and crossovers driven by explicit mathematical objectives to explore this space. Transferring knowledge between different GA tasks, however, is difficult. We integrate large language models (LLMs) into the evolutionary optimization framework (LLM-EO) and apply it in both single- and multi-objective optimization for TMCs. We find that LLM-EO surpasses traditional GAs by leveraging the chemical knowledge of LLMs gained during their extensive pretraining. Remarkably, without supervised fine-tuning, LLMs utilize the full historical data from optimization processes, outperforming those focusing only on top-performing TMCs. LLM-EO successfully identifies eight of the top-20 TMCs with the largest HOMO-LUMO gaps by proposing only 200 candidates out of a 1.37 million TMCs space. Through prompt engineering using natural language, LLM-EO introduces unparalleled flexibility into multi-objective optimizations, thereby circumventing the necessity for intricate mathematical formulations. As generative models, LLMs can suggest new ligands and TMCs with unique properties by merging both internal knowledge and external chemistry data, thus combining the benefits of efficient optimization and molecular generation. With increasing potential of LLMs as pretrained foundational models and new post-training inference strategies, we foresee broad applications of LLM-based evolutionary optimization in chemistry and materials design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge