Zeyu Luan

FedOC: Optimizing Global Prototypes with Orthogonality Constraints for Enhancing Embeddings Separation in Heterogeneous Federated Learning

Feb 22, 2025

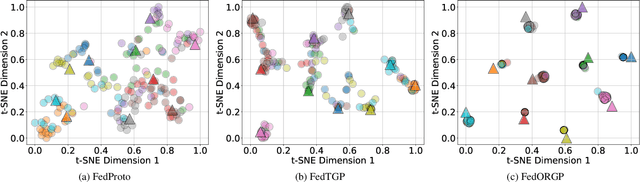

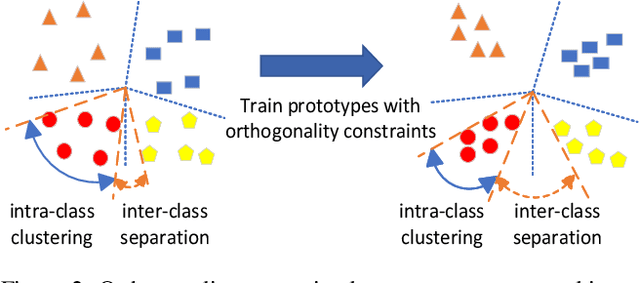

Abstract:Federated Learning (FL) has emerged as an essential framework for distributed machine learning, especially with its potential for privacy-preserving data processing. However, existing FL frameworks struggle to address statistical and model heterogeneity, which severely impacts model performance. While Heterogeneous Federated Learning (HtFL) introduces prototype-based strategies to address the challenges, current approaches face limitations in achieving optimal separation of prototypes. This paper presents FedOC, a novel HtFL algorithm designed to improve global prototype separation through orthogonality constraints, which not only increase intra-class prototype similarity but also significantly expand the inter-class angular separation. With the guidance of the global prototype, each client keeps its embeddings aligned with the corresponding prototype in the feature space, promoting directional independence that integrates seamlessly with the cross-entropy (CE) loss. We provide theoretical proof of FedOC's convergence under non-convex conditions. Extensive experiments demonstrate that FedOC outperforms seven state-of-the-art baselines, achieving up to a 10.12% accuracy improvement in both statistical and model heterogeneity settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge