Yvette Machowski

"There Is Not Enough Information": On the Effects of Explanations on Perceptions of Informational Fairness and Trustworthiness in Automated Decision-Making

May 11, 2022

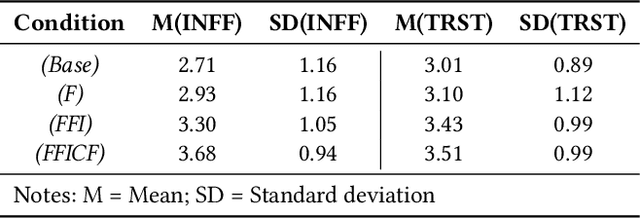

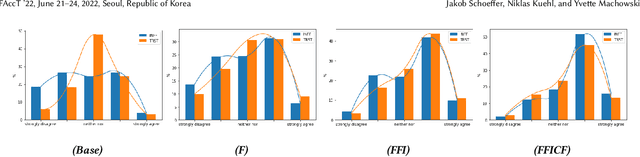

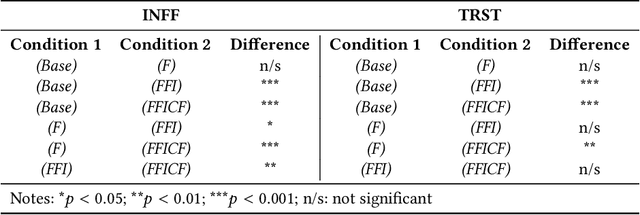

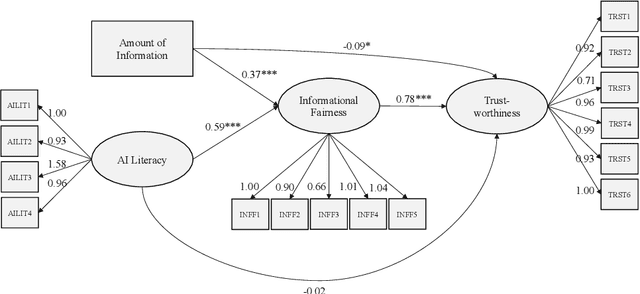

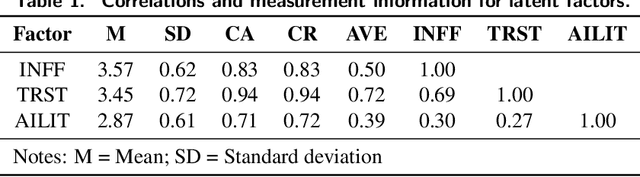

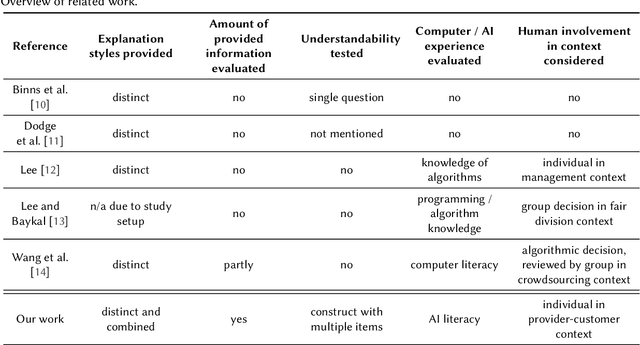

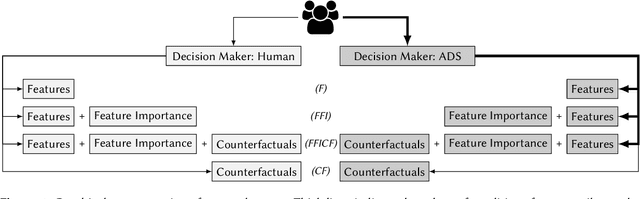

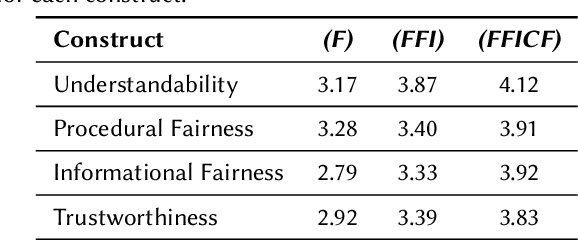

Abstract:Automated decision systems (ADS) are increasingly used for consequential decision-making. These systems often rely on sophisticated yet opaque machine learning models, which do not allow for understanding how a given decision was arrived at. In this work, we conduct a human subject study to assess people's perceptions of informational fairness (i.e., whether people think they are given adequate information on and explanation of the process and its outcomes) and trustworthiness of an underlying ADS when provided with varying types of information about the system. More specifically, we instantiate an ADS in the area of automated loan approval and generate different explanations that are commonly used in the literature. We randomize the amount of information that study participants get to see by providing certain groups of people with the same explanations as others plus additional explanations. From our quantitative analyses, we observe that different amounts of information as well as people's (self-assessed) AI literacy significantly influence the perceived informational fairness, which, in turn, positively relates to perceived trustworthiness of the ADS. A comprehensive analysis of qualitative feedback sheds light on people's desiderata for explanations, among which are (i) consistency (both with people's expectations and across different explanations), (ii) disclosure of monotonic relationships between features and outcome, and (iii) actionability of recommendations.

Perceptions of Fairness and Trustworthiness Based on Explanations in Human vs. Automated Decision-Making

Sep 13, 2021

Abstract:Automated decision systems (ADS) have become ubiquitous in many high-stakes domains. Those systems typically involve sophisticated yet opaque artificial intelligence (AI) techniques that seldom allow for full comprehension of their inner workings, particularly for affected individuals. As a result, ADS are prone to deficient oversight and calibration, which can lead to undesirable (e.g., unfair) outcomes. In this work, we conduct an online study with 200 participants to examine people's perceptions of fairness and trustworthiness towards ADS in comparison to a scenario where a human instead of an ADS makes a high-stakes decision -- and we provide thorough identical explanations regarding decisions in both cases. Surprisingly, we find that people perceive ADS as fairer than human decision-makers. Our analyses also suggest that people's AI literacy affects their perceptions, indicating that people with higher AI literacy favor ADS more strongly over human decision-makers, whereas low-AI-literacy people exhibit no significant differences in their perceptions.

A Study on Fairness and Trust Perceptions in Automated Decision Making

Mar 08, 2021

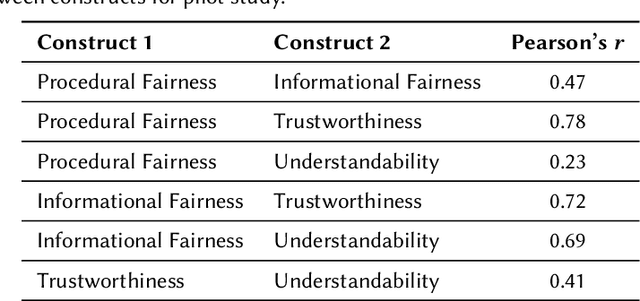

Abstract:Automated decision systems are increasingly used for consequential decision making -- for a variety of reasons. These systems often rely on sophisticated yet opaque models, which do not (or hardly) allow for understanding how or why a given decision was arrived at. This is not only problematic from a legal perspective, but non-transparent systems are also prone to yield undesirable (e.g., unfair) outcomes because their sanity is difficult to assess and calibrate in the first place. In this work, we conduct a study to evaluate different attempts of explaining such systems with respect to their effect on people's perceptions of fairness and trustworthiness towards the underlying mechanisms. A pilot study revealed surprising qualitative insights as well as preliminary significant effects, which will have to be verified, extended and thoroughly discussed in the larger main study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge