"There Is Not Enough Information": On the Effects of Explanations on Perceptions of Informational Fairness and Trustworthiness in Automated Decision-Making

Paper and Code

May 11, 2022

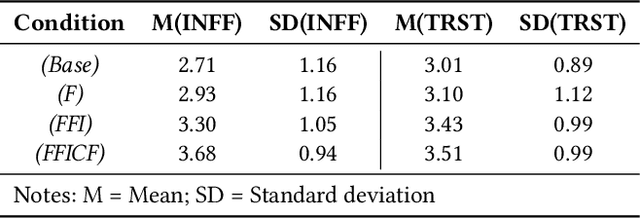

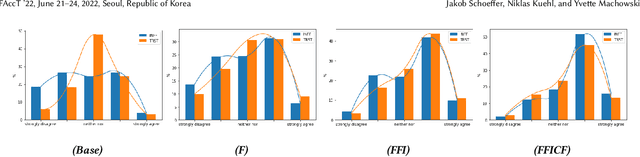

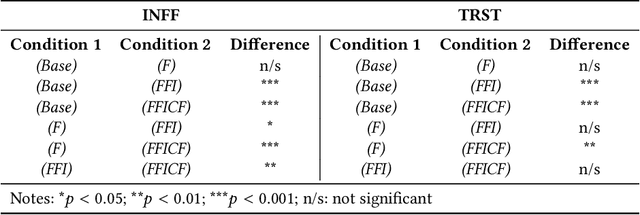

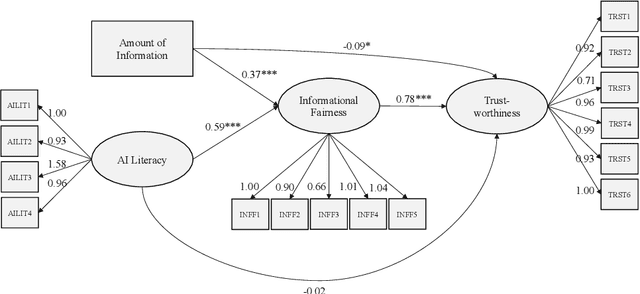

Automated decision systems (ADS) are increasingly used for consequential decision-making. These systems often rely on sophisticated yet opaque machine learning models, which do not allow for understanding how a given decision was arrived at. In this work, we conduct a human subject study to assess people's perceptions of informational fairness (i.e., whether people think they are given adequate information on and explanation of the process and its outcomes) and trustworthiness of an underlying ADS when provided with varying types of information about the system. More specifically, we instantiate an ADS in the area of automated loan approval and generate different explanations that are commonly used in the literature. We randomize the amount of information that study participants get to see by providing certain groups of people with the same explanations as others plus additional explanations. From our quantitative analyses, we observe that different amounts of information as well as people's (self-assessed) AI literacy significantly influence the perceived informational fairness, which, in turn, positively relates to perceived trustworthiness of the ADS. A comprehensive analysis of qualitative feedback sheds light on people's desiderata for explanations, among which are (i) consistency (both with people's expectations and across different explanations), (ii) disclosure of monotonic relationships between features and outcome, and (iii) actionability of recommendations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge