Yuval Cassuto

Robust Regression with Ensembles Communicating over Noisy Channels

Aug 20, 2024Abstract:As machine-learning models grow in size, their implementation requirements cannot be met by a single computer system. This observation motivates distributed settings, in which intermediate computations are performed across a network of processing units, while the central node only aggregates their outputs. However, distributing inference tasks across low-precision or faulty edge devices, operating over a network of noisy communication channels, gives rise to serious reliability challenges. We study the problem of an ensemble of devices, implementing regression algorithms, that communicate through additive noisy channels in order to collaboratively perform a joint regression task. We define the problem formally, and develop methods for optimizing the aggregation coefficients for the parameters of the noise in the channels, which can potentially be correlated. Our results apply to the leading state-of-the-art ensemble regression methods: bagging and gradient boosting. We demonstrate the effectiveness of our algorithms on both synthetic and real-world datasets.

Boosting Classifiers with Noisy Inference

Sep 10, 2019

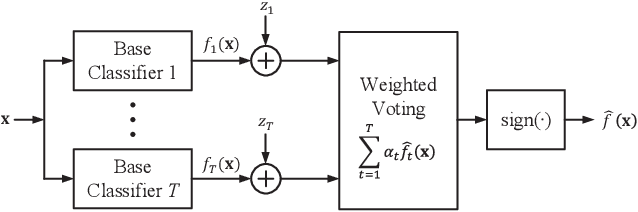

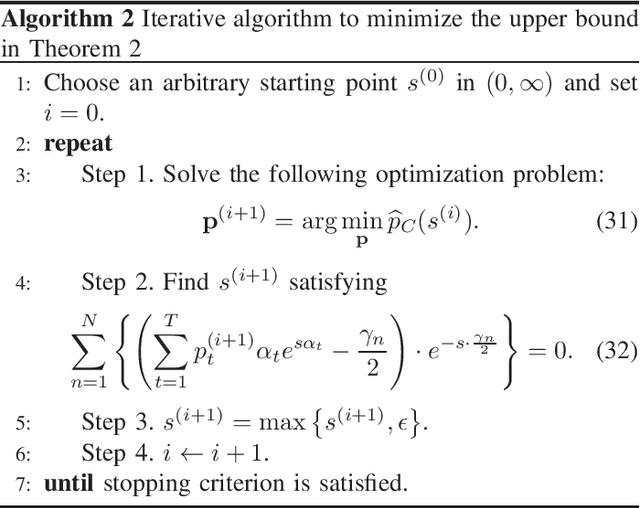

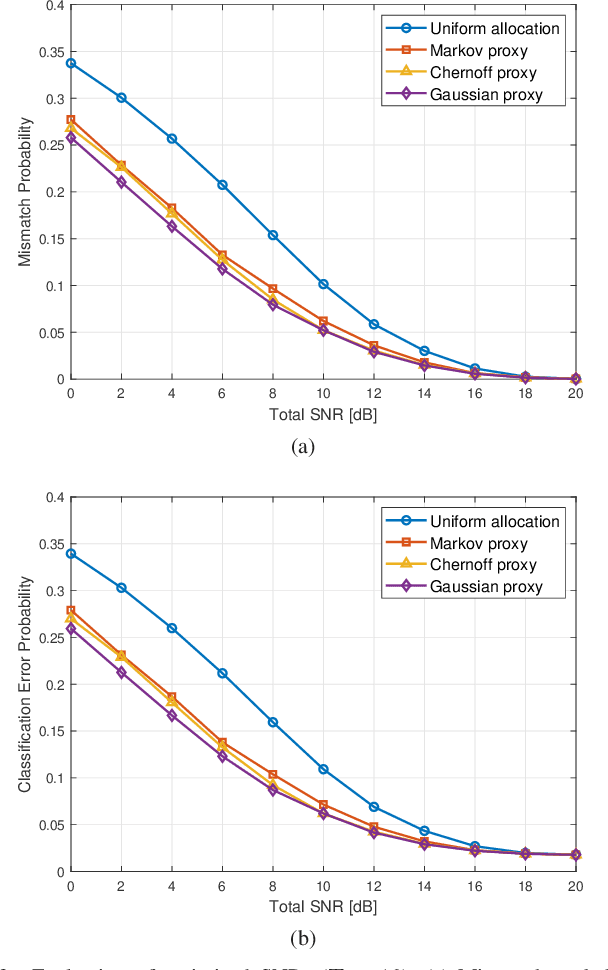

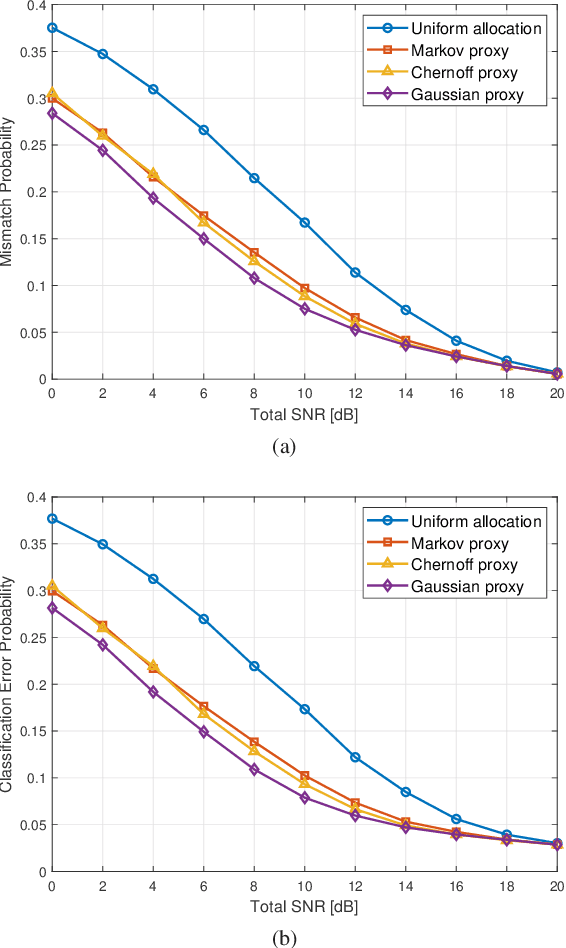

Abstract:We present a principled framework to address resource allocation for realizing boosting algorithms on substrates with communication or computation noise. Boosting classifiers (e.g., AdaBoost) make a final decision via a weighted vote from the outputs of many base classifiers (weak classifiers). Suppose that the base classifiers' outputs are noisy or communicated over noisy channels; these noisy outputs will degrade the final classification accuracy. We show that this degradation can be effectively reduced by allocating more system resources for more important base classifiers. We formulate resource optimization problems in terms of importance metrics for boosting. Moreover, we show that the optimized noisy boosting classifiers can be more robust than bagging for the noise during inference (test stage). We provide numerical evidence to demonstrate the benefits of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge