Yusuf Nasir

Multi-Asset Closed-Loop Reservoir Management Using Deep Reinforcement Learning

Jul 21, 2022

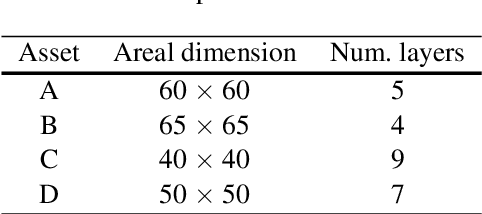

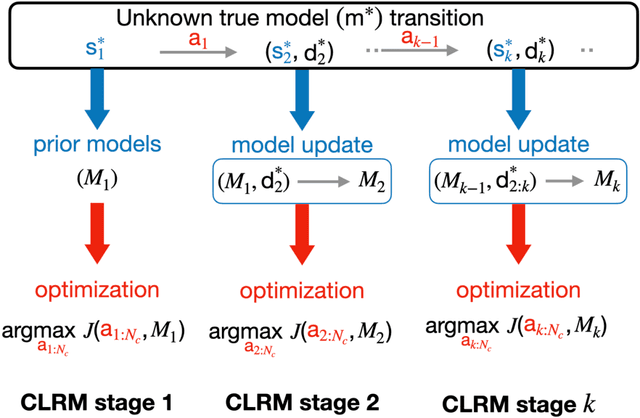

Abstract:Closed-loop reservoir management (CLRM), in which history matching and production optimization are performed multiple times over the life of an asset, can provide significant improvement in the specified objective. These procedures are computationally expensive due to the large number of flow simulations required for data assimilation and optimization. Existing CLRM procedures are applied asset by asset, without utilizing information that could be useful over a range assets. Here, we develop a CLRM framework for multiple assets with varying numbers of wells. We use deep reinforcement learning to train a single global control policy that is applicable for all assets considered. The new framework is an extension of a recently introduced control policy methodology for individual assets. Embedding layers are incorporated into the representation to handle the different numbers of decision variables that arise for the different assets. Because the global control policy learns a unified representation of useful features from multiple assets, it is less expensive to construct than asset-by-asset training (we observe about 3x speedup in our examples). The production optimization problem includes a relative-change constraint on the well settings, which renders the results suitable for practical use. We apply the multi-asset CLRM framework to 2D and 3D water-flooding examples. In both cases, four assets with different well counts, well configurations, and geostatistical descriptions are considered. Numerical experiments demonstrate that the global control policy provides objective function values, for both the 2D and 3D cases, that are nearly identical to those from control policies trained individually for each asset. This promising finding suggests that multi-asset CLRM may indeed represent a viable practical strategy.

Deep reinforcement learning for optimal well control in subsurface systems with uncertain geology

Mar 24, 2022

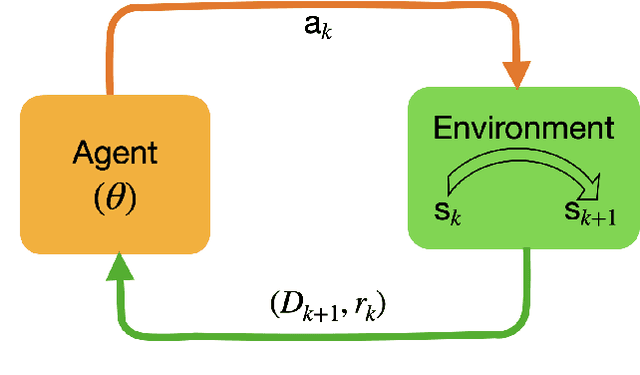

Abstract:A general control policy framework based on deep reinforcement learning (DRL) is introduced for closed-loop decision making in subsurface flow settings. Traditional closed-loop modeling workflows in this context involve the repeated application of data assimilation/history matching and robust optimization steps. Data assimilation can be particularly challenging in cases where both the geological style (scenario) and individual model realizations are uncertain. The closed-loop reservoir management (CLRM) problem is formulated here as a partially observable Markov decision process, with the associated optimization problem solved using a proximal policy optimization algorithm. This provides a control policy that instantaneously maps flow data observed at wells (as are available in practice) to optimal well pressure settings. The policy is represented by a temporal convolution and gated transformer blocks. Training is performed in a preprocessing step with an ensemble of prior geological models, which can be drawn from multiple geological scenarios. Example cases involving the production of oil via water injection, with both 2D and 3D geological models, are presented. The DRL-based methodology is shown to result in an NPV increase of 15% (for the 2D cases) and 33% (3D cases) relative to robust optimization over prior models, and to an average improvement of 4% in NPV relative to traditional CLRM. The solutions from the control policy are found to be comparable to those from deterministic optimization, in which the geological model is assumed to be known, even when multiple geological scenarios are considered. The control policy approach results in a 76% decrease in computational cost relative to traditional CLRM with the algorithms and parameter settings considered in this work.

Deep Reinforcement Learning for Constrained Field Development Optimization in Subsurface Two-phase Flow

Mar 31, 2021

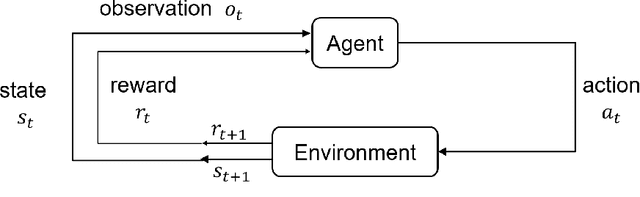

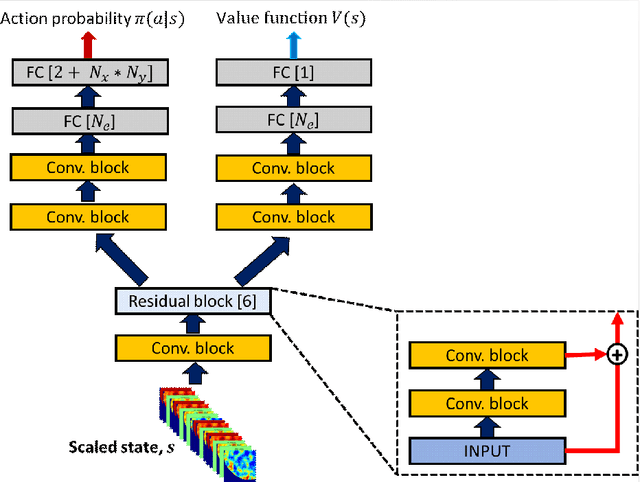

Abstract:We present a deep reinforcement learning-based artificial intelligence agent that could provide optimized development plans given a basic description of the reservoir and rock/fluid properties with minimal computational cost. This artificial intelligence agent, comprising of a convolutional neural network, provides a mapping from a given state of the reservoir model, constraints, and economic condition to the optimal decision (drill/do not drill and well location) to be taken in the next stage of the defined sequential field development planning process. The state of the reservoir model is defined using parameters that appear in the governing equations of the two-phase flow. A feedback loop training process referred to as deep reinforcement learning is used to train an artificial intelligence agent with such a capability. The training entails millions of flow simulations with varying reservoir model descriptions (structural, rock and fluid properties), operational constraints, and economic conditions. The parameters that define the reservoir model, operational constraints, and economic conditions are randomly sampled from a defined range of applicability. Several algorithmic treatments are introduced to enhance the training of the artificial intelligence agent. After appropriate training, the artificial intelligence agent provides an optimized field development plan instantly for new scenarios within the defined range of applicability. This approach has advantages over traditional optimization algorithms (e.g., particle swarm optimization, genetic algorithm) that are generally used to find a solution for a specific field development scenario and typically not generalizable to different scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge