Yuru Su

A Comprehensive Study on the Robustness of Image Classification and Object Detection in Remote Sensing: Surveying and Benchmarking

Jun 21, 2023

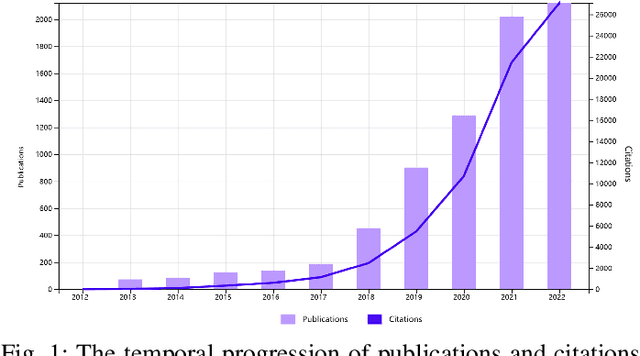

Abstract:Deep neural networks (DNNs) have found widespread applications in interpreting remote sensing (RS) imagery. However, it has been demonstrated in previous works that DNNs are vulnerable to different types of noises, particularly adversarial noises. Surprisingly, there has been a lack of comprehensive studies on the robustness of RS tasks, prompting us to undertake a thorough survey and benchmark on the robustness of image classification and object detection in RS. To our best knowledge, this study represents the first comprehensive examination of both natural robustness and adversarial robustness in RS tasks. Specifically, we have curated and made publicly available datasets that contain natural and adversarial noises. These datasets serve as valuable resources for evaluating the robustness of DNNs-based models. To provide a comprehensive assessment of model robustness, we conducted meticulous experiments with numerous different classifiers and detectors, encompassing a wide range of mainstream methods. Through rigorous evaluation, we have uncovered insightful and intriguing findings, which shed light on the relationship between adversarial noise crafting and model training, yielding a deeper understanding of the susceptibility and limitations of various models, and providing guidance for the development of more resilient and robust models

CBA: Contextual Background Attack against Optical Aerial Detection in the Physical World

Mar 20, 2023

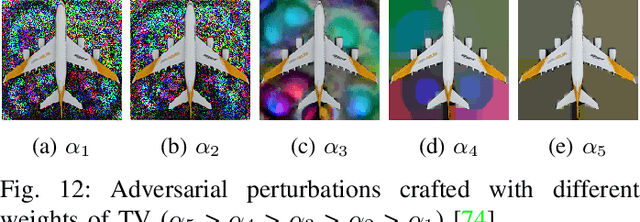

Abstract:Patch-based physical attacks have increasingly aroused concerns. However, most existing methods focus on obscuring targets captured on the ground, and some of these methods are simply extended to deceive aerial detectors. They smear the targeted objects in the physical world with the elaborated adversarial patches, which can only slightly sway the aerial detectors' prediction and with weak attack transferability. To address the above issues, we propose to perform Contextual Background Attack (CBA), a novel physical attack framework against aerial detection, which can achieve strong attack efficacy and transferability in the physical world even without smudging the interested objects at all. Specifically, the targets of interest, i.e. the aircraft in aerial images, are adopted to mask adversarial patches. The pixels outside the mask area are optimized to make the generated adversarial patches closely cover the critical contextual background area for detection, which contributes to gifting adversarial patches with more robust and transferable attack potency in the real world. To further strengthen the attack performance, the adversarial patches are forced to be outside targets during training, by which the detected objects of interest, both on and outside patches, benefit the accumulation of attack efficacy. Consequently, the sophisticatedly designed patches are gifted with solid fooling efficacy against objects both on and outside the adversarial patches simultaneously. Extensive proportionally scaled experiments are performed in physical scenarios, demonstrating the superiority and potential of the proposed framework for physical attacks. We expect that the proposed physical attack method will serve as a benchmark for assessing the adversarial robustness of diverse aerial detectors and defense methods.

Contextual adversarial attack against aerial detection in the physical world

Feb 27, 2023

Abstract:Deep Neural Networks (DNNs) have been extensively utilized in aerial detection. However, DNNs' sensitivity and vulnerability to maliciously elaborated adversarial examples have progressively garnered attention. Recently, physical attacks have gradually become a hot issue due to they are more practical in the real world, which poses great threats to some security-critical applications. In this paper, we take the first attempt to perform physical attacks in contextual form against aerial detection in the physical world. We propose an innovative contextual attack method against aerial detection in real scenarios, which achieves powerful attack performance and transfers well between various aerial object detectors without smearing or blocking the interested objects to hide. Based on the findings that the targets' contextual information plays an important role in aerial detection by observing the detectors' attention maps, we propose to make full use of the contextual area of the interested targets to elaborate contextual perturbations for the uncovered attacks in real scenarios. Extensive proportionally scaled experiments are conducted to evaluate the effectiveness of the proposed contextual attack method, which demonstrates the proposed method's superiority in both attack efficacy and physical practicality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge