Yurim Jeon

EVT: Efficient View Transformation for Multi-Modal 3D Object Detection

Nov 19, 2024

Abstract:Multi-modal sensor fusion in bird's-eye-view (BEV) representation has become the leading approach in 3D object detection. However, existing methods often rely on depth estimators or transformer encoders for view transformation, incurring substantial computational overhead. Furthermore, the lack of precise geometric correspondence between 2D and 3D spaces leads to spatial and ray-directional misalignments, restricting the effectiveness of BEV representations. To address these challenges, we propose a novel 3D object detector via efficient view transformation (EVT), which leverages a well-structured BEV representation to enhance accuracy and efficiency. EVT focuses on two main areas. First, it employs Adaptive Sampling and Adaptive Projection (ASAP), using LiDAR guidance to generate 3D sampling points and adaptive kernels. The generated points and kernels are then used to facilitate the transformation of image features into BEV space and refine the BEV features. Second, EVT includes an improved transformer-based detection framework, which contains a group-wise query initialization method and an enhanced query update framework. It is designed to effectively utilize the obtained multi-modal BEV features within the transformer decoder. By leveraging the geometric properties of object queries, this framework significantly enhances detection performance, especially in a multi-layer transformer decoder structure. EVT achieves state-of-the-art performance on the nuScenes test set with real-time inference speed.

Follow the Footprints: Self-supervised Traversability Estimation for Off-road Vehicle Navigation based on Geometric and Visual Cues

Feb 23, 2024

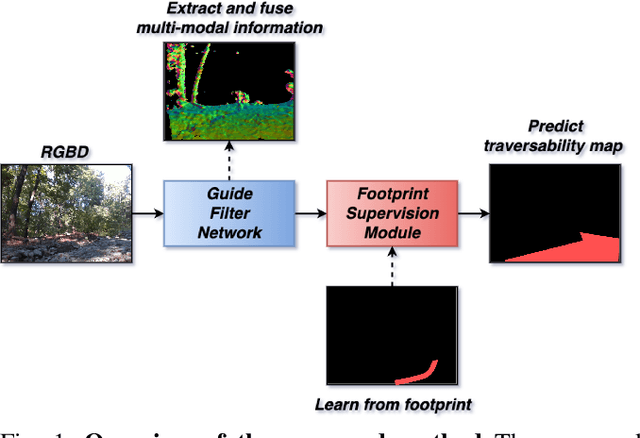

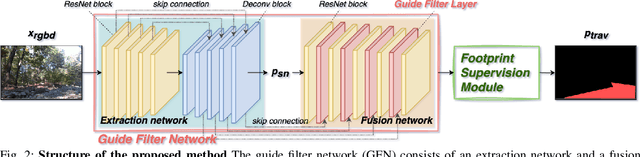

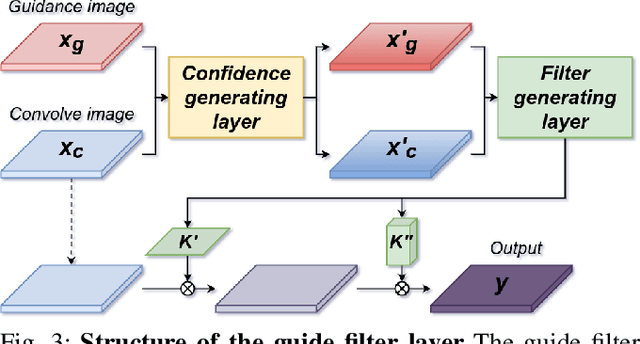

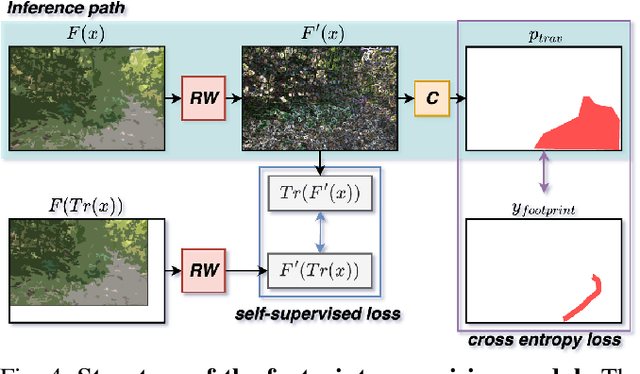

Abstract:In this study, we address the off-road traversability estimation problem, that predicts areas where a robot can navigate in off-road environments. An off-road environment is an unstructured environment comprising a combination of traversable and non-traversable spaces, which presents a challenge for estimating traversability. This study highlights three primary factors that affect a robot's traversability in an off-road environment: surface slope, semantic information, and robot platform. We present two strategies for estimating traversability, using a guide filter network (GFN) and footprint supervision module (FSM). The first strategy involves building a novel GFN using a newly designed guide filter layer. The GFN interprets the surface and semantic information from the input data and integrates them to extract features optimized for traversability estimation. The second strategy involves developing an FSM, which is a self-supervision module that utilizes the path traversed by the robot in pre-driving, also known as a footprint. This enables the prediction of traversability that reflects the characteristics of the robot platform. Based on these two strategies, the proposed method overcomes the limitations of existing methods, which require laborious human supervision and lack scalability. Extensive experiments in diverse conditions, including automobiles and unmanned ground vehicles, herbfields, woodlands, and farmlands, demonstrate that the proposed method is compatible for various robot platforms and adaptable to a range of terrains. Code is available at https://github.com/yurimjeon1892/FtFoot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge