Yun Ching Liu

Rakuten Data Release: A Large-Scale and Long-Term Reviews Corpus for Hotel Domain

Dec 18, 2025Abstract:This paper presents a large-scale corpus of Rakuten Travel Reviews. Our collection contains 7.3 million customer reviews for 16 years, ranging from 2009 to 2024. Each record in the dataset contains the review text, its response from an accommodation, an anonymized reviewer ID, review date, accommodation ID, plan ID, plan title, room type, room name, purpose, accompanying group, and user ratings from different aspect categories, as well as an overall score. We present statistical information about our corpus and provide insights into factors driving data drift between 2019 and 2024 using statistical approaches.

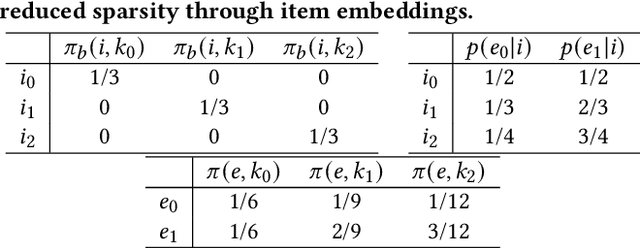

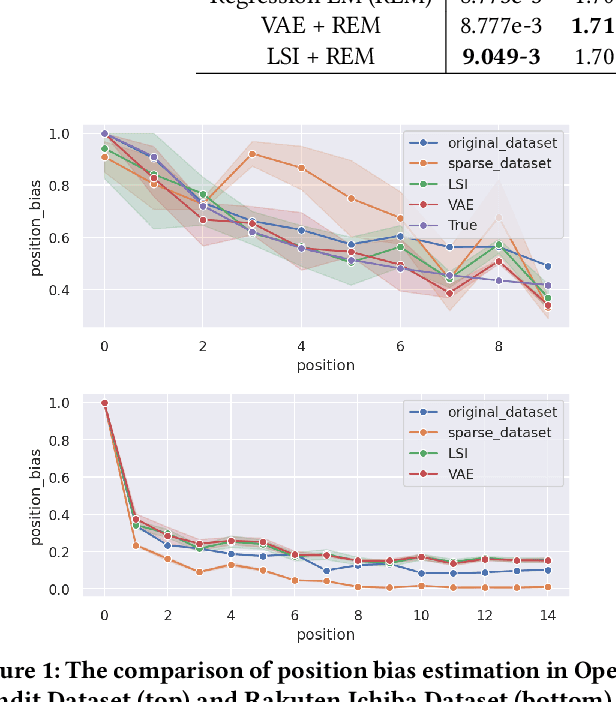

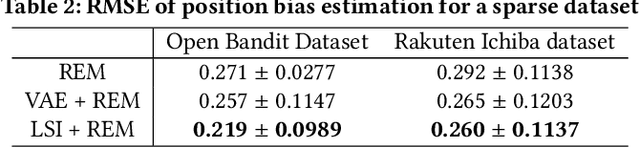

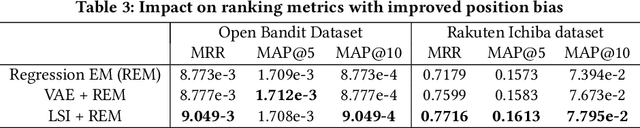

Improving position bias estimation against sparse and skewed dataset with item embedding

May 10, 2023

Abstract:Estimating position bias is a well-known challenge in Learning to rank (L2R). Click data in e-commerce applications, such as advertisement targeting and search engines, provides implicit but abundant feedback to improve personalized rankings. However, click data inherently include various biases like position bias. Click modeling is aimed at denoising biases in click data and extracting reliable signals. Result Randomization and Regression Expectation-maximization algorithm have been proposed to solve position bias. Both methods require various pairs of observations (item, position). However, in real cases of advertising, marketers frequently display advertisements in a fixed pre-determined order, and estimation suffers from it. We propose this sparsity of (item, position) in position bias estimation as a novel problem, and we propose a variant of the Regression EM algorithm which utilizes item embeddings to alleviate the issue of the sparsity. With a synthetic dataset, we first evaluate how the position bias estimation suffers from the sparsity and skewness of the logging dataset. Next, with a real-world dataset, we empirically show that item embedding with Latent Semantic Indexing (LSI) and Variational autoencoder (VAE) improves the estimation of position bias. Our result shows that the Regression EM algorithm with VAE improves RMSE relatively by 10.3% and EM with LSI improves RMSE relatively by 33.4%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge