Yuhan Song

StableToken: A Noise-Robust Semantic Speech Tokenizer for Resilient SpeechLLMs

Sep 26, 2025

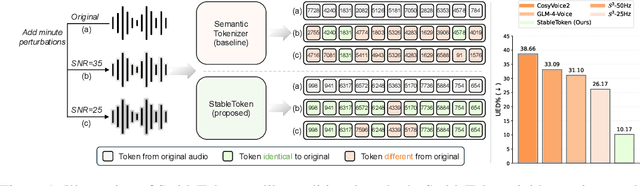

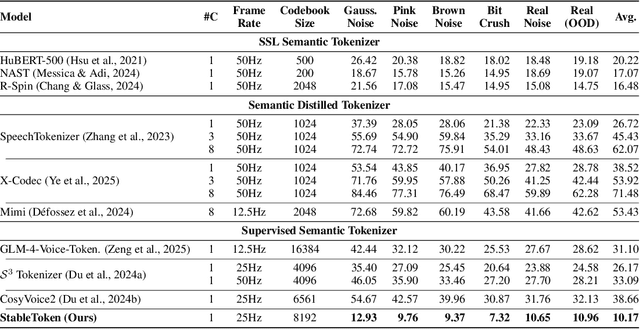

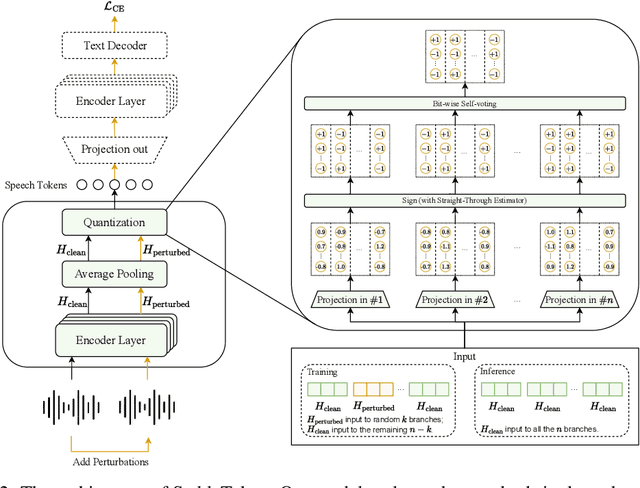

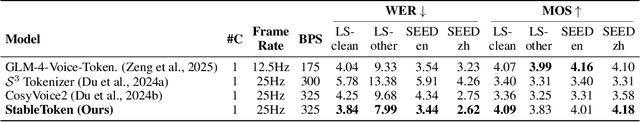

Abstract:Prevalent semantic speech tokenizers, designed to capture linguistic content, are surprisingly fragile. We find they are not robust to meaning-irrelevant acoustic perturbations; even at high Signal-to-Noise Ratios (SNRs) where speech is perfectly intelligible, their output token sequences can change drastically, increasing the learning burden for downstream LLMs. This instability stems from two flaws: a brittle single-path quantization architecture and a distant training signal indifferent to intermediate token stability. To address this, we introduce StableToken, a tokenizer that achieves stability through a consensus-driven mechanism. Its multi-branch architecture processes audio in parallel, and these representations are merged via a powerful bit-wise voting mechanism to form a single, stable token sequence. StableToken sets a new state-of-the-art in token stability, drastically reducing Unit Edit Distance (UED) under diverse noise conditions. This foundational stability translates directly to downstream benefits, significantly improving the robustness of SpeechLLMs on a variety of tasks.

S-CycleGAN: Semantic Segmentation Enhanced CT-Ultrasound Image-to-Image Translation for Robotic Ultrasonography

Jun 03, 2024Abstract:Ultrasound imaging is pivotal in various medical diagnoses due to its non-invasive nature and safety. In clinical practice, the accuracy and precision of ultrasound image analysis are critical. Recent advancements in deep learning are showing great capacity of processing medical images. However, the data hungry nature of deep learning and the shortage of high-quality ultrasound image training data suppress the development of deep learning based ultrasound analysis methods. To address these challenges, we introduce an advanced deep learning model, dubbed S-CycleGAN, which generates high-quality synthetic ultrasound images from computed tomography (CT) data. This model incorporates semantic discriminators within a CycleGAN framework to ensure that critical anatomical details are preserved during the style transfer process. The synthetic images produced are used to augment training datasets for semantic segmentation models and robot-assisted ultrasound scanning system development, enhancing their ability to accurately parse real ultrasound imagery.

Abdominal Multi-Organ Segmentation Based on Feature Pyramid Network and Spatial Recurrent Neural Network

Aug 29, 2023Abstract:As recent advances in AI are causing the decline of conventional diagnostic methods, the realization of end-to-end diagnosis is fast approaching. Ultrasound image segmentation is an important step in the diagnostic process. An accurate and robust segmentation model accelerates the process and reduces the burden of sonographers. In contrast to previous research, we take two inherent features of ultrasound images into consideration: (1) different organs and tissues vary in spatial sizes, (2) the anatomical structures inside human body form a relatively constant spatial relationship. Based on those two ideas, we propose a new image segmentation model combining Feature Pyramid Network (FPN) and Spatial Recurrent Neural Network (SRNN). We discuss why we use FPN to extract anatomical structures of different scales and how SRNN is implemented to extract the spatial context features in abdominal ultrasound images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge