Yuanzhi Su

Balanced Residual Distillation Learning for 3D Point Cloud Class-Incremental Semantic Segmentation

Aug 02, 2024

Abstract:Class-incremental learning (CIL) thrives due to its success in processing the influx of information by learning from continuously added new classes while preventing catastrophic forgetting about the old ones. It is essential for the performance breakthrough of CIL to effectively refine past knowledge from the base model and balance it with new learning. However, such an issue has not yet been considered in current research. In this work, we explore the potential of CIL from these perspectives and propose a novel balanced residual distillation framework (BRD-CIL) to push the performance bar of CIL to a new higher level. Specifically, BRD-CIL designs a residual distillation learning strategy, which can dynamically expand the network structure to capture the residuals between the base and target models, effectively refining the past knowledge. Furthermore, BRD-CIL designs a balanced pseudo-label learning strategy by generating a guidance mask to reduce the preference for old classes, ensuring balanced learning from new and old classes. We apply the proposed BRD-CIL to a challenging 3D point cloud semantic segmentation task where the data are unordered and unstructured. Extensive experimental results demonstrate that BRD-CIL sets a new benchmark with an outstanding balance capability in class-biased scenarios.

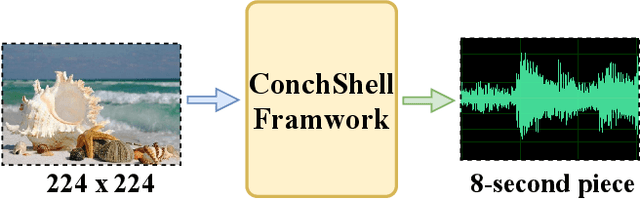

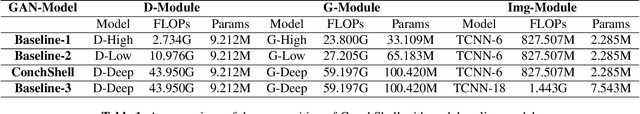

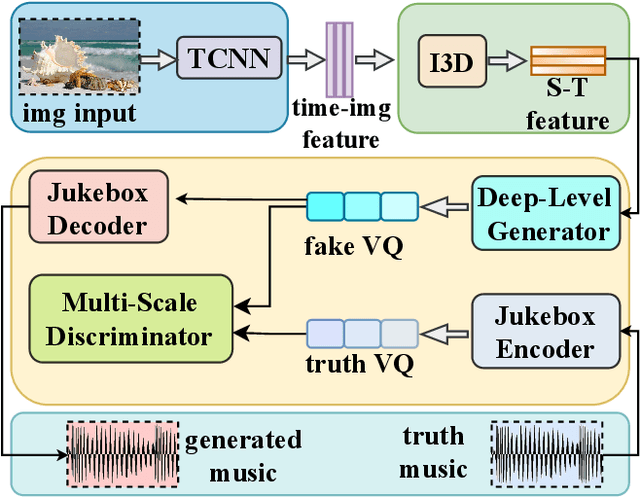

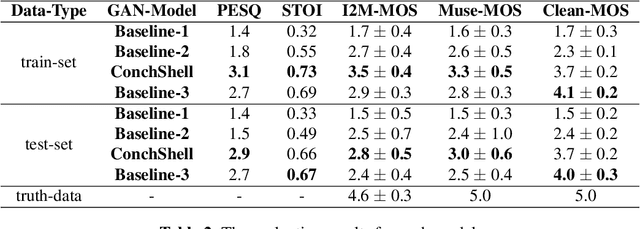

ConchShell: A Generative Adversarial Networks that Turns Pictures into Piano Music

Oct 11, 2022

Abstract:We present ConchShell, a multi-modal generative adversarial framework that takes pictures as input to the network and generates piano music samples that match the picture context. Inspired by I3D, we introduce a novel image feature representation method: time-convolutional neural network (TCNN), which is used to forge features for images in the temporal dimension. Although our image data consists of only six categories, our proposed framework will be innovative and commercially meaningful. The project will provide technical ideas for work such as 3D game voice overs, short-video soundtracks, and real-time generation of metaverse background music.We have also released a new dataset, the Beach-Ocean-Piano Dataset (BOPD) 1, which contains more than 3,000 images and more than 1,500 piano pieces. This dataset will support multimodal image-to-music research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge