Yu-Hsun Lin

BRIEF: Backward Reduction of CNNs with Information Flow Analysis

Nov 01, 2018

Abstract:This paper proposes BRIEF, a backward reduction algorithm that explores compact CNN-model designs from the information flow perspective. This algorithm can remove substantial non-zero weighting parameters (redundant neural channels) of a network by considering its dynamic behavior, which traditional model-compaction techniques cannot achieve. With the aid of our proposed algorithm, we achieve significant model reduction on ResNet-34 in the ImageNet scale (32.3% reduction), which is 3X better than the previous result (10.8%). Even for highly optimized models such as SqueezeNet and MobileNet, we can achieve additional 10.81% and 37.56% reduction, respectively, with negligible performance degradation.

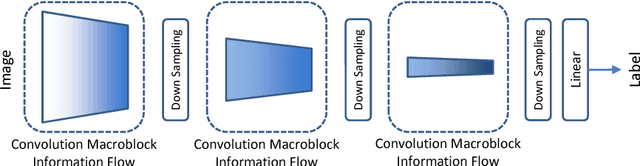

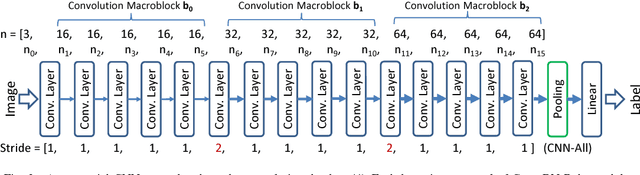

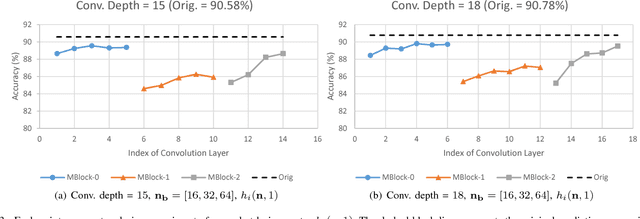

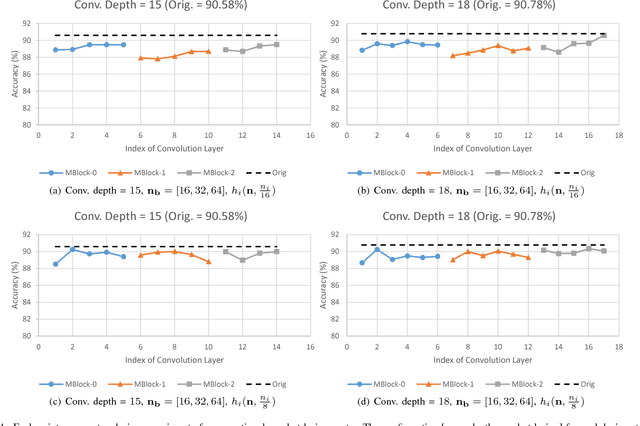

MBS: Macroblock Scaling for CNN Model Reduction

Sep 18, 2018

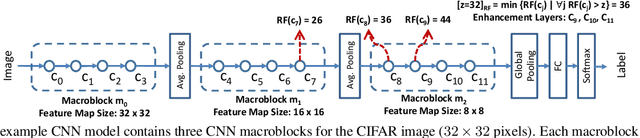

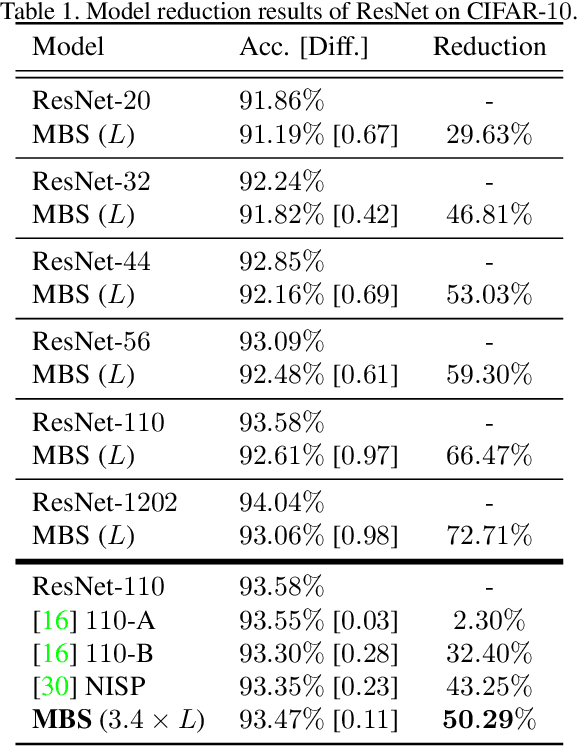

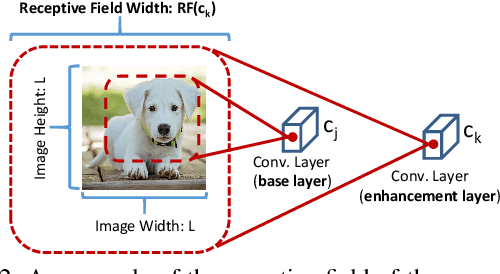

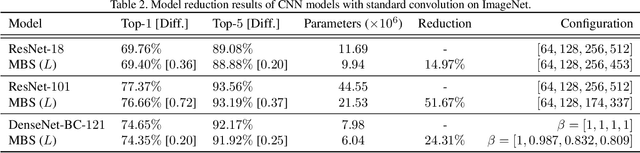

Abstract:We estimate the proper channel (width) scaling of Convolution Neural Networks (CNNs) for model reduction. Unlike the traditional scaling method that reduces every CNN channel width by the same scaling factor, we address each CNN macroblock adaptively depending on its information redundancy measured by our proposed effective flops. Our proposed macroblock scaling (MBS) algorithm can be applied to various CNN architectures to reduce their model size. These applicable models range from compact CNN models such as MobileNet (25.53% reduction, ImageNet) and ShuffleNet (20.74% reduction, ImageNet) to ultra-deep ones such as ResNet-101 (51.67% reduction, ImageNet) and ResNet-1202 (72.71% reduction, CIFAR-10) with negligible accuracy degradation. MBS also performs better reduction at a much lower cost than does the state-of-the-art optimization-based method. MBS's simplicity and efficiency, its flexibility to work with any CNN model, and its scalability to work with models of any depth makes it an attractive choice for CNN model size reduction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge