Yoonhan Lee

Generalizing RNN-Transducer to Out-Domain Audio via Sparse Self-Attention Layers

Aug 22, 2021

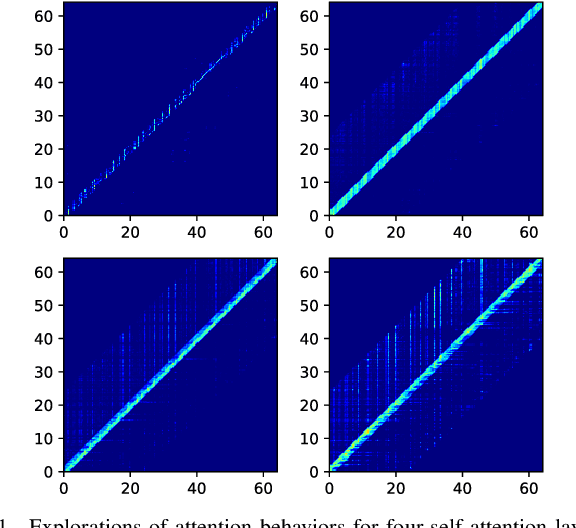

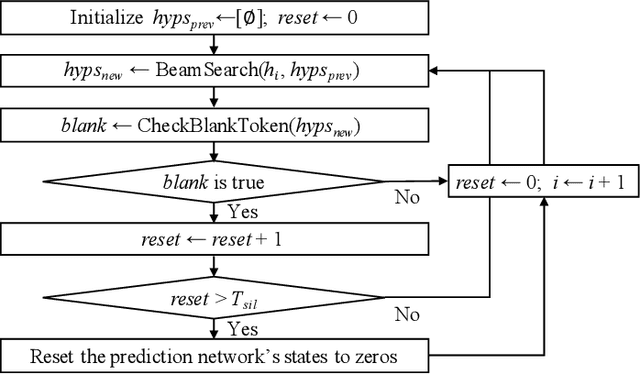

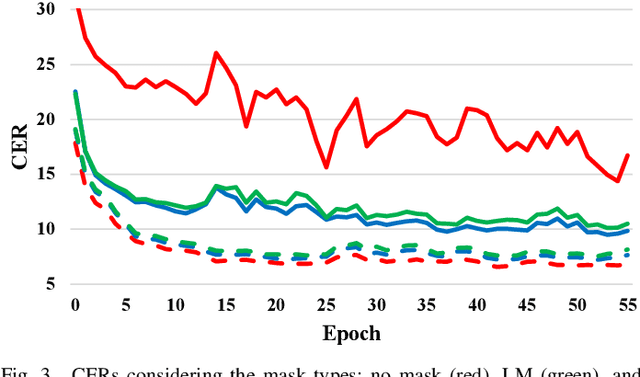

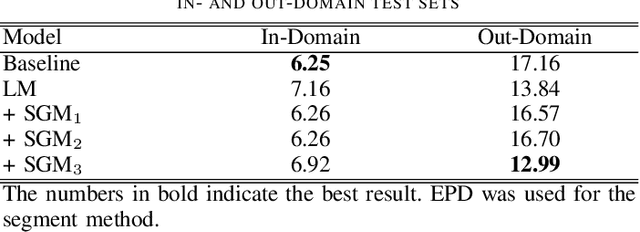

Abstract:Recurrent neural network transducers (RNN-T) are a promising end-to-end speech recognition framework that transduces input acoustic frames into a character sequence. The state-of-the-art encoder network for RNN-T is the Conformer, which can effectively model the local-global context information via its convolution and self-attention layers. Although Conformer RNN-T has shown outstanding performance (measured by word error rate (WER) in general), most studies have been verified in the setting where the train and test data are drawn from the same domain. The domain mismatch problem for Conformer RNN-T has not been intensively investigated yet, which is an important issue for the product-level speech recognition system. In this study, we identified that fully connected self-attention layers in the Conformer caused high deletion errors, specifically in the long-form out-domain utterances. To address this problem, we introduce sparse self-attention layers for Conformer-based encoder networks, which can exploit local and generalized global information by pruning most of the in-domain fitted global connections. Further, we propose a state reset method for the generalization of the prediction network to cope with long-form utterances. Applying proposed methods to an out-domain test, we obtained 24.6\% and 6.5\% relative character error rate (CER) reduction compared to the fully connected and local self-attention layer-based Conformers, respectively.

Accelerating RNN Transducer Inference via One-Step Constrained Beam Search

Feb 10, 2020

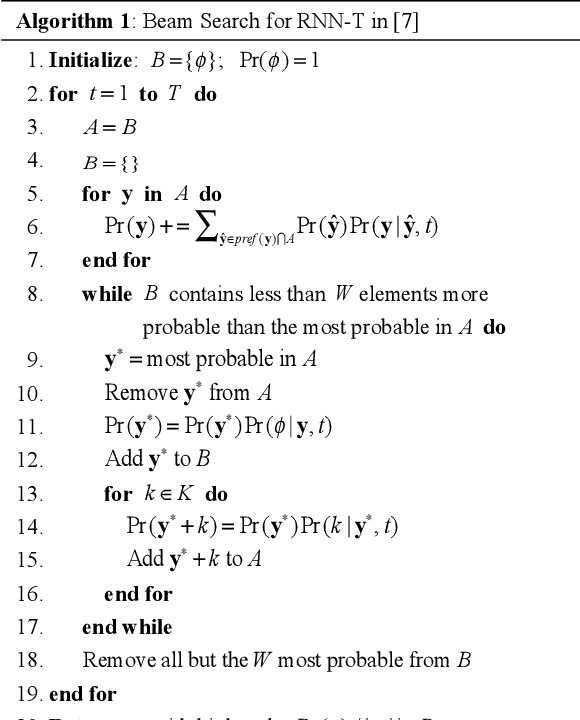

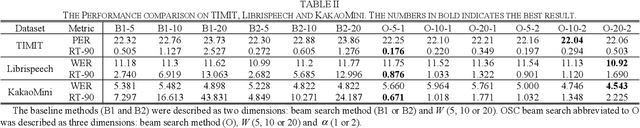

Abstract:We propose a one-step constrained (OSC) beam search to accelerate recurrent neural network (RNN) transducer (RNN-T) inference. The original RNN-T beam search has a while-loop leading to speed down of the decoding process. The OSC beam search eliminates this while-loop by vectorizing multiple hypotheses. This vectorization is nontrivial as the expansion of the hypotheses within the original RNN-T beam search can be different from each other. However, we found that the hypotheses expanded only once at each decoding step in most cases; thus, we constrained the maximum expansion number to one, thereby allowing vectorization of the hypotheses. For further acceleration, we assign constraints to the prefixes of the hypotheses to prune the redundant search space. In addition, OSC beam search has duplication check among hypotheses during the decoding process as duplication can undesirably shrink the search space. We achieved significant speedup compared with other RNN-T beam search methods with lower phoneme and word error rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge