Yong-Hyuk Moon

Object Re-identification via Spatial-temporal Fusion Networks and Causal Identity Matching

Aug 10, 2024

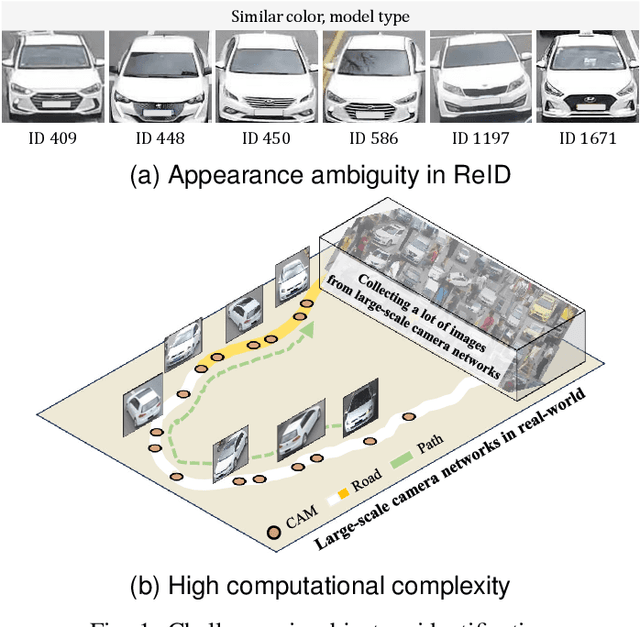

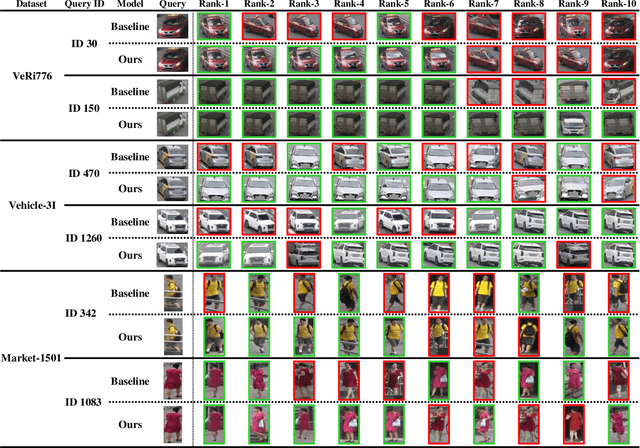

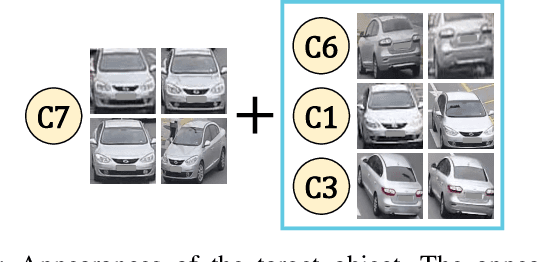

Abstract:Object re-identification (ReID) in large camera networks has many challenges. First, the similar appearances of objects degrade ReID performances. This challenge cannot be addressed by existing appearance-based ReID methods. Second, most ReID studies are performed in laboratory settings and do not consider ReID problems in real-world scenarios. To overcome these challenges, we introduce a novel ReID framework that leverages a spatial-temporal fusion network and causal identity matching (CIM). The framework estimates camera network topology using the proposed adaptive Parzen window and combines appearance features with spatial-temporal cue within the Fusion Network. It achieved outstanding performance across several datasets, including VeRi776, Vehicle-3I, and Market-1501, achieving up to 99.70% rank-1 accuracy and 95.5% mAP. Furthermore, the proposed CIM approach, which dynamically assigns gallery sets based on the camera network topology, further improved ReID accuracy and robustness in real-world settings, evidenced by a 94.95% mAP and 95.19% F1 score on the Vehicle-3I dataset. The experimental results support the effectiveness of incorporating spatial-temporal information and CIM for real-world ReID scenarios regardless of the data domain (e.g., vehicle, person).

Spatial-temporal Vehicle Re-identification

Sep 03, 2023Abstract:Vehicle re-identification (ReID) in a large-scale camera network is important in public safety, traffic control, and security. However, due to the appearance ambiguities of vehicle, the previous appearance-based ReID methods often fail to track vehicle across multiple cameras. To overcome the challenge, we propose a spatial-temporal vehicle ReID framework that estimates reliable camera network topology based on the adaptive Parzen window method and optimally combines the appearance and spatial-temporal similarities through the fusion network. Based on the proposed methods, we performed superior performance on the public dataset (VeRi776) by 99.64% of rank-1 accuracy. The experimental results support that utilizing spatial and temporal information for ReID can leverage the accuracy of appearance-based methods and effectively deal with appearance ambiguities.

Bespoke: A Block-Level Neural Network Optimization Framework for Low-Cost Deployment

Mar 03, 2023Abstract:As deep learning models become popular, there is a lot of need for deploying them to diverse device environments. Because it is costly to develop and optimize a neural network for every single environment, there is a line of research to search neural networks for multiple target environments efficiently. However, existing works for such a situation still suffer from requiring many GPUs and expensive costs. Motivated by this, we propose a novel neural network optimization framework named Bespoke for low-cost deployment. Our framework searches for a lightweight model by replacing parts of an original model with randomly selected alternatives, each of which comes from a pretrained neural network or the original model. In the practical sense, Bespoke has two significant merits. One is that it requires near zero cost for designing the search space of neural networks. The other merit is that it exploits the sub-networks of public pretrained neural networks, so the total cost is minimal compared to the existing works. We conduct experiments exploring Bespoke's the merits, and the results show that it finds efficient models for multiple targets with meager cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge