Yizhao Jin

Robust Behavior Cloning Via Global Lipschitz Regularization

Jun 24, 2025Abstract:Behavior Cloning (BC) is an effective imitation learning technique and has even been adopted in some safety-critical domains such as autonomous vehicles. BC trains a policy to mimic the behavior of an expert by using a dataset composed of only state-action pairs demonstrated by the expert, without any additional interaction with the environment. However, During deployment, the policy observations may contain measurement errors or adversarial disturbances. Since the observations may deviate from the true states, they can mislead the agent into making sub-optimal actions. In this work, we use a global Lipschitz regularization approach to enhance the robustness of the learned policy network. We then show that the resulting global Lipschitz property provides a robustness certificate to the policy with respect to different bounded norm perturbations. Then, we propose a way to construct a Lipschitz neural network that ensures the policy robustness. We empirically validate our theory across various environments in Gymnasium. Keywords: Robust Reinforcement Learning; Behavior Cloning; Lipschitz Neural Network

ADAPTER-RL: Adaptation of Any Agent using Reinforcement Learning

Nov 20, 2023

Abstract:Deep Reinforcement Learning (DRL) agents frequently face challenges in adapting to tasks outside their training distribution, including issues with over-fitting, catastrophic forgetting and sample inefficiency. Although the application of adapters has proven effective in supervised learning contexts such as natural language processing and computer vision, their potential within the DRL domain remains largely unexplored. This paper delves into the integration of adapters in reinforcement learning, presenting an innovative adaptation strategy that demonstrates enhanced training efficiency and improvement of the base-agent, experimentally in the nanoRTS environment, a real-time strategy (RTS) game simulation. Our proposed universal approach is not only compatible with pre-trained neural networks but also with rule-based agents, offering a means to integrate human expertise.

Joint action loss for proximal policy optimization

Jan 26, 2023

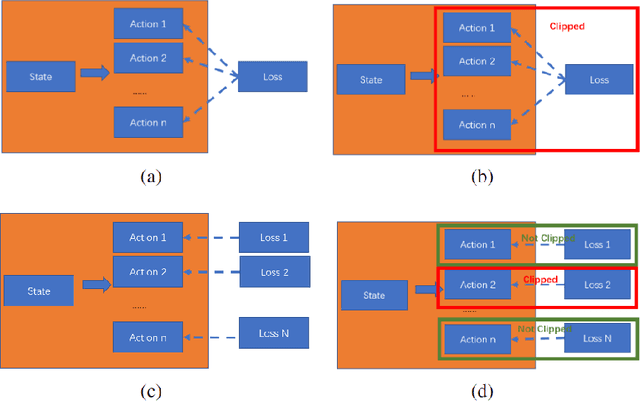

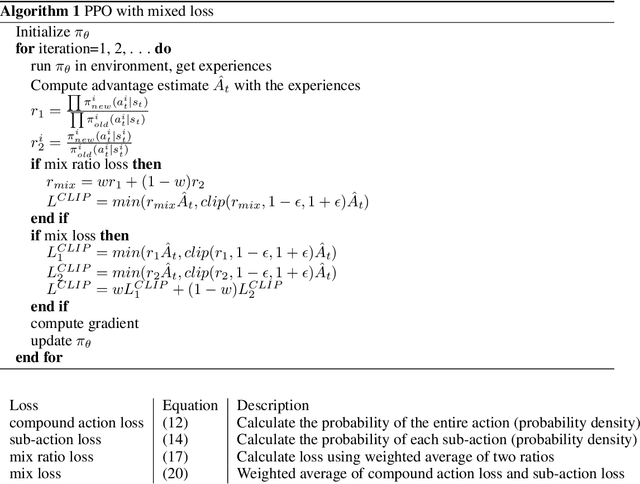

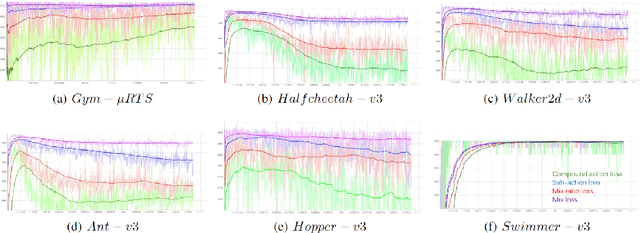

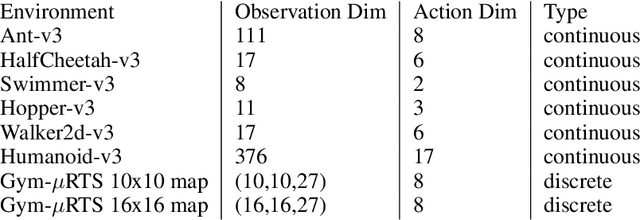

Abstract:PPO (Proximal Policy Optimization) is a state-of-the-art policy gradient algorithm that has been successfully applied to complex computer games such as Dota 2 and Honor of Kings. In these environments, an agent makes compound actions consisting of multiple sub-actions. PPO uses clipping to restrict policy updates. Although clipping is simple and effective, it is not efficient in its sample use. For compound actions, most PPO implementations consider the joint probability (density) of sub-actions, which means that if the ratio of a sample (state compound-action pair) exceeds the range, the gradient the sample produces is zero. Instead, for each sub-action we calculate the loss separately, which is less prone to clipping during updates thereby making better use of samples. Further, we propose a multi-action mixed loss that combines joint and separate probabilities. We perform experiments in Gym-$\mu$RTS and MuJoCo. Our hybrid model improves performance by more than 50\% in different MuJoCo environments compared to OpenAI's PPO benchmark results. And in Gym-$\mu$RTS, we find the sub-action loss outperforms the standard PPO approach, especially when the clip range is large. Our findings suggest this method can better balance the use-efficiency and quality of samples.

Partial advantage estimator for proximal policy optimization

Jan 26, 2023Abstract:Estimation of value in policy gradient methods is a fundamental problem. Generalized Advantage Estimation (GAE) is an exponentially-weighted estimator of an advantage function similar to $\lambda$-return. It substantially reduces the variance of policy gradient estimates at the expense of bias. In practical applications, a truncated GAE is used due to the incompleteness of the trajectory, which results in a large bias during estimation. To address this challenge, instead of using the entire truncated GAE, we propose to take a part of it when calculating updates, which significantly reduces the bias resulting from the incomplete trajectory. We perform experiments in MuJoCo and $\mu$RTS to investigate the effect of different partial coefficient and sampling lengths. We show that our partial GAE approach yields better empirical results in both environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge