Yinzheng Gu

2nd Place Solution to ECCV 2020 VIPriors Object Detection Challenge

Jul 17, 2020

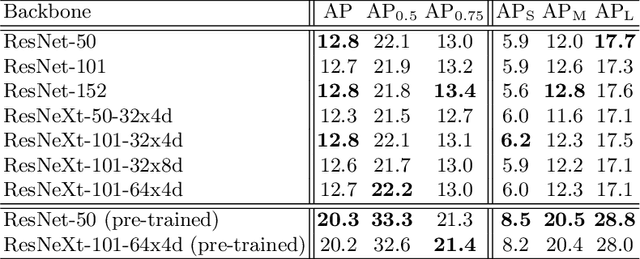

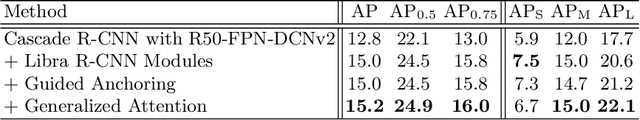

Abstract:In this report, we descibe our approach to the ECCV 2020 VIPriors Object Detection Challenge which took place from March to July in 2020. We show that by using state-of-the-art data augmentation strategies, model designs, and post-processing ensemble methods, it is possible to overcome the difficulty of data shortage and obtain competitive results. Notably, our overall detection system achieves 36.6$\%$ AP on the COCO 2017 validation set using only 10K training images without any pre-training or transfer learning weights ranking us 2nd place in the challenge.

Team JL Solution to Google Landmark Recognition 2019

Jun 10, 2019

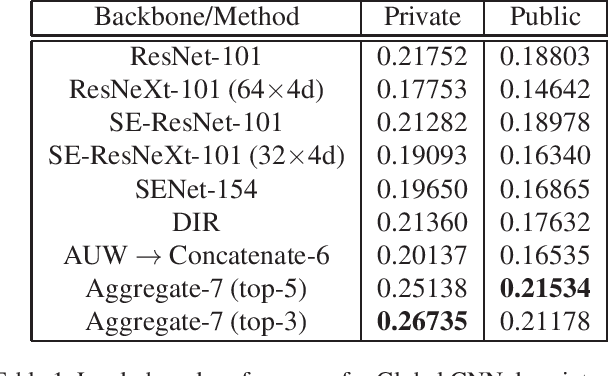

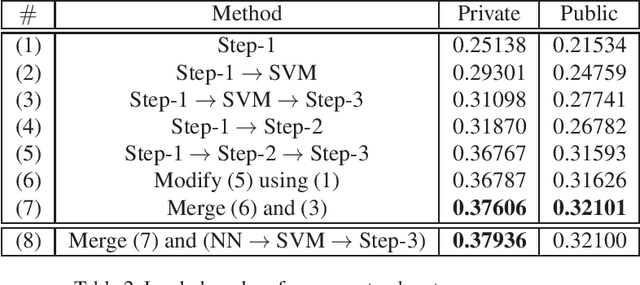

Abstract:In this paper, we describe our solution to the Google Landmark Recognition 2019 Challenge held on Kaggle. Due to the large number of classes, noisy data, imbalanced class sizes, and the presence of a significant amount of distractors in the test set, our method is based mainly on retrieval techniques with both global and local CNN approaches. Our full pipeline, after ensembling the models and applying several steps of re-ranking strategies, scores 0.37606 GAP on the private leaderboard which won the 1st place in the competition.

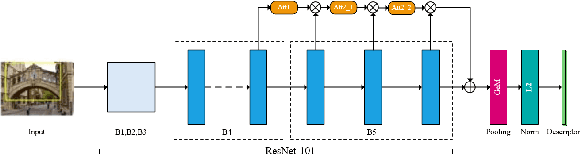

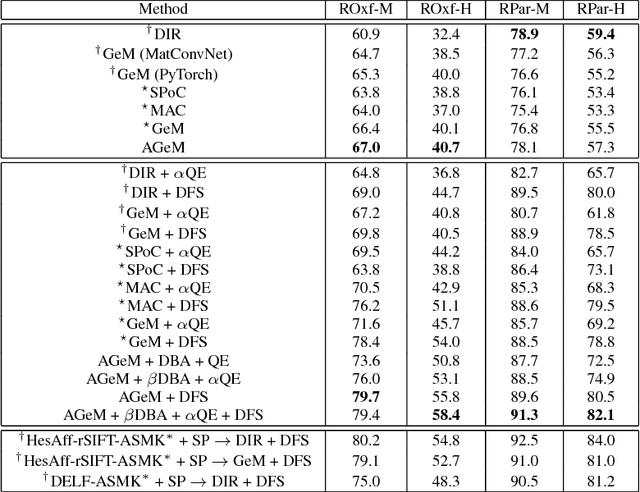

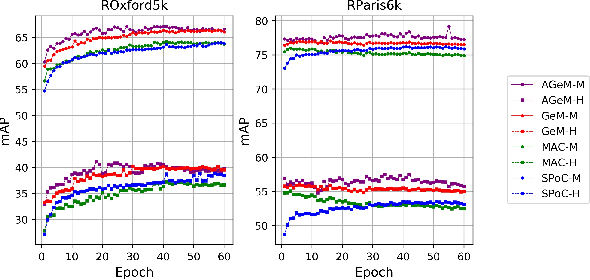

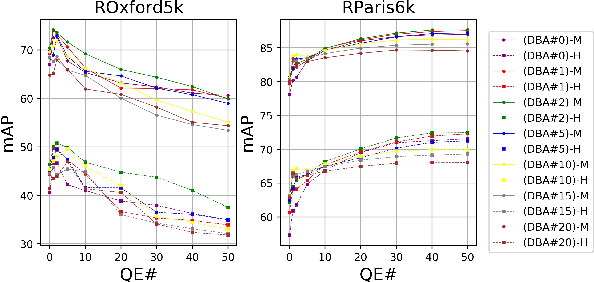

Attention-aware Generalized Mean Pooling for Image Retrieval

Nov 01, 2018

Abstract:It has been shown that image descriptors extracted by convolutional neural networks (CNNs) achieve remarkable results for retrieval problems. In this paper, we apply attention mechanism to CNN, which aims at enhancing more relevant features that correspond to important keypoints in the input image. The generated attention-aware features are then aggregated by the previous state-of-the-art generalized mean (GeM) pooling followed by normalization to produce a compact global descriptor, which can be efficiently compared to other image descriptors by the dot product. An extensive comparison of our proposed approach with state-of-the-art methods is performed on the new challenging ROxford5k and RParis6k retrieval benchmarks. Results indicate significant improvement over previous work. In particular, our attention-aware GeM (AGeM) descriptor outperforms state-of-the-art method on ROxford5k under the `Hard' evaluation protocal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge