Jinbin Xie

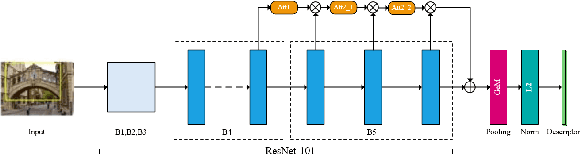

Attention-aware Generalized Mean Pooling for Image Retrieval

Nov 01, 2018

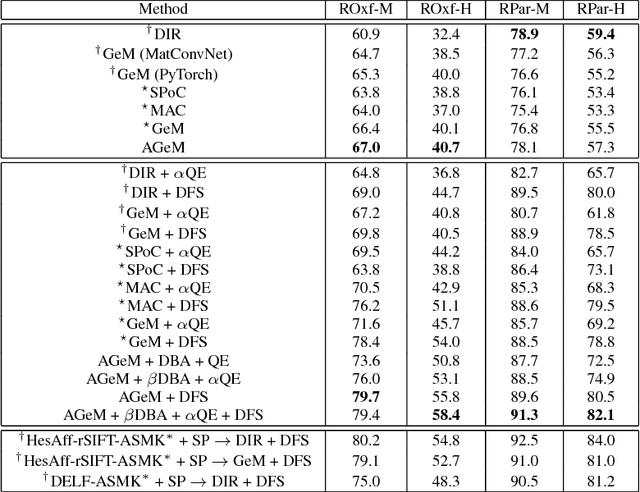

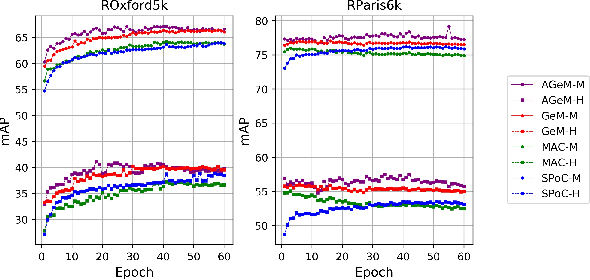

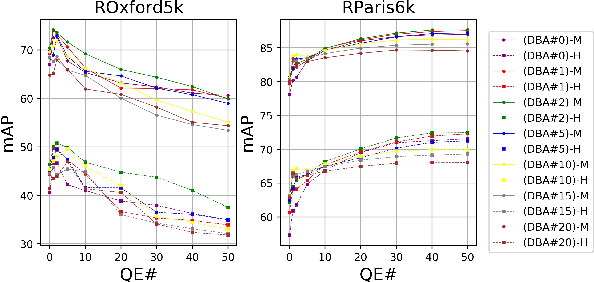

Abstract:It has been shown that image descriptors extracted by convolutional neural networks (CNNs) achieve remarkable results for retrieval problems. In this paper, we apply attention mechanism to CNN, which aims at enhancing more relevant features that correspond to important keypoints in the input image. The generated attention-aware features are then aggregated by the previous state-of-the-art generalized mean (GeM) pooling followed by normalization to produce a compact global descriptor, which can be efficiently compared to other image descriptors by the dot product. An extensive comparison of our proposed approach with state-of-the-art methods is performed on the new challenging ROxford5k and RParis6k retrieval benchmarks. Results indicate significant improvement over previous work. In particular, our attention-aware GeM (AGeM) descriptor outperforms state-of-the-art method on ROxford5k under the `Hard' evaluation protocal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge