Yichuan Chen

Reinforcement Learning Based Optimal Camera Placement for Depth Observation of Indoor Scenes

Oct 21, 2021

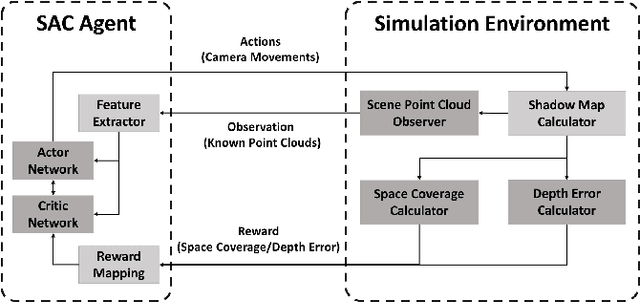

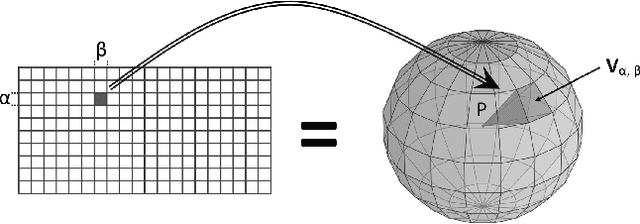

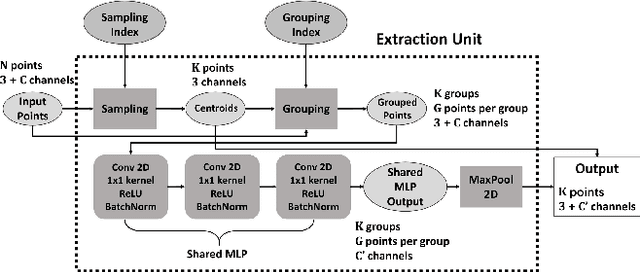

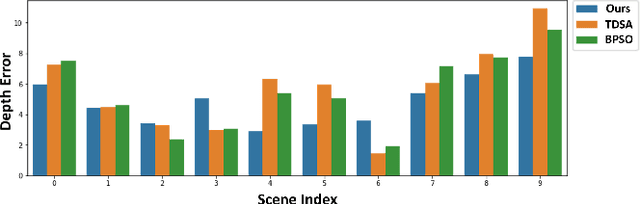

Abstract:Exploring the most task-friendly camera setting -- optimal camera placement (OCP) problem -- in tasks that use multiple cameras is of great importance. However, few existing OCP solutions specialize in depth observation of indoor scenes, and most versatile solutions work offline. To this problem, an OCP online solution to depth observation of indoor scenes based on reinforcement learning is proposed in this paper. The proposed solution comprises a simulation environment that implements scene observation and reward estimation using shadow maps and an agent network containing a soft actor-critic (SAC)-based reinforcement learning backbone and a feature extractor to extract features from the observed point cloud layer-by-layer. Comparative experiments with two state-of-the-art optimization-based offline methods are conducted. The experimental results indicate that the proposed system outperforms seven out of ten test scenes in obtaining lower depth observation error. The total error in all test scenes is also less than 90% of the baseline ones. Therefore, the proposed system is more competent for depth camera placement in scenarios where there is no prior knowledge of the scenes or where a lower depth observation error is the main objective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge