Yi'an Chen

Generative Latent Flow: A Framework for Non-adversarial Image Generation

May 24, 2019

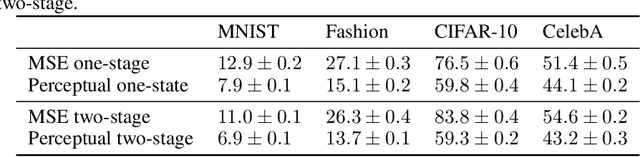

Abstract:Generative Adversarial Networks (GANs) have been shown to outperform non-adversarial generative models in terms of the image generation quality by a large margin. Recently, researchers have looked into improving non-adversarial alternatives that can close the gap of generation quality while avoiding some common issues of GANs, such as unstable training and mode collapse. Examples in this direction include Two-stage VAE and Generative Latent Nearest Neighbors. However, a major drawback of these models is that they are slow to train, and in particular, they require two training stages. To address this, we propose Generative Latent Flow (GLF), which uses an auto-encoder to learn the mapping to and from the latent space, and an invertible flow to map the distribution in the latent space to simple i.i.d noise. The advantages of our method include a simple conceptual framework, single stage training and fast convergence. Quantitatively, the generation quality of our model significantly outperforms that of VAEs, and is competitive with GANs' benchmark on commonly used datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge