Yashasvi Agrawal

Online Self-supervised Scene Segmentation for Micro Aerial Vehicles

Jun 13, 2018

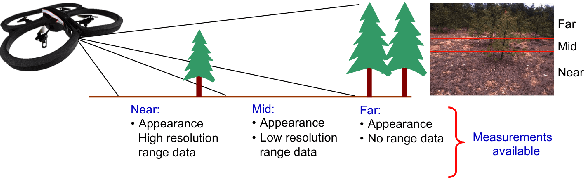

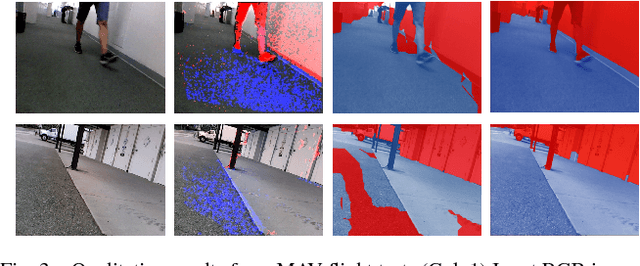

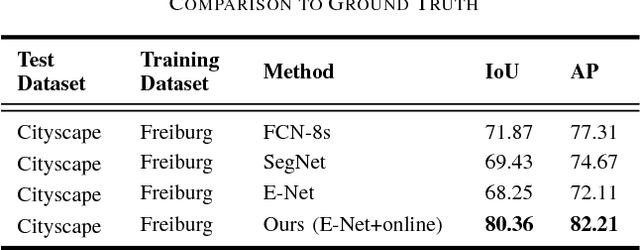

Abstract:Recently, there have been numerous advances in the development of payload and power constrained lightweight Micro Aerial Vehicles (MAVs). As these robots aspire for high-speed autonomous flights in complex dynamic environments, robust scene understanding at long-range becomes critical. The problem is heavily characterized by either the limitations imposed by sensor capabilities for geometry-based methods, or the need for large-amounts of manually annotated training data required by data-driven methods. This motivates the need to build systems that have the capability to alleviate these problems by exploiting the complimentary strengths of both geometry and data-driven methods. In this paper, we take a step in this direction and propose a generic framework for adaptive scene segmentation using self-supervised online learning. We present this in the context of vision-based autonomous MAV flight, and demonstrate the efficacy of our proposed system through extensive experiments on benchmark datasets and real-world field tests.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge