Yapeng Gao

Optimal Stroke Learning with Policy Gradient Approach for Robotic Table Tennis

Sep 07, 2021

Abstract:Learning to play table tennis is a challenging task for robots, due to the variety of the strokes required. Current advances in deep Reinforcement Learning (RL) have shown potential in learning the optimal strokes. However, the large amount of exploration still limits the applicability when utilizing RL in real scenarios. In this paper, we first propose a realistic simulation environment where several models are built for the ball's dynamics and the robot's kinematics. Instead of training an end-to-end RL model, we decompose it into two stages: the ball's hitting state prediction and consequently learning the racket strokes from it. A novel policy gradient approach with TD3 backbone is proposed for the second stage. In the experiments, we show that the proposed approach significantly outperforms the existing RL methods in simulation. To cross the domain from simulation to reality, we develop an efficient retraining method and test in three real scenarios with a success rate of 98%.

Sample-efficient Reinforcement Learning in Robotic Table Tennis

Nov 11, 2020

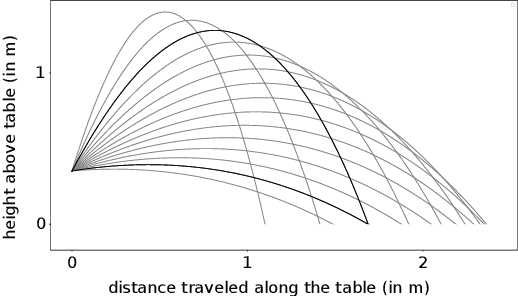

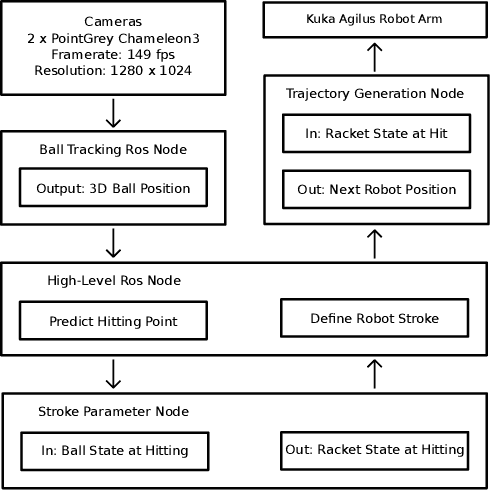

Abstract:Reinforcement learning (RL) has recently shown impressive success in various computer games and simulations. Most of these successes are based on numerous episodes to be learned from. For typical robotic applications, however, the number of feasible attempts is very limited. In this paper we present a sample-efficient RL algorithm applied to the example of a table tennis robot. In table tennis every stroke is different, of varying placement, speed and spin. Therefore, an accurate return has be found depending on a high-dimensional continuous state space. To make learning in few trials possible the method is embedded into our robot system. In this way we can use a one-step environment. The state space depends on the ball at hitting time (position, velocity, spin) and the action is the racket state (orientation, velocity) at hitting. An actor-critic based deterministic policy gradient algorithm was developed for accelerated learning. Our approach shows competitive performance in both simulation and on the real robot in different challenging scenarios. Accurate results are always obtained within under 200 episodes of training. The video presenting our experiments is available at https://youtu.be/uRAtdoL6Wpw.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge