Yangzheng Wu

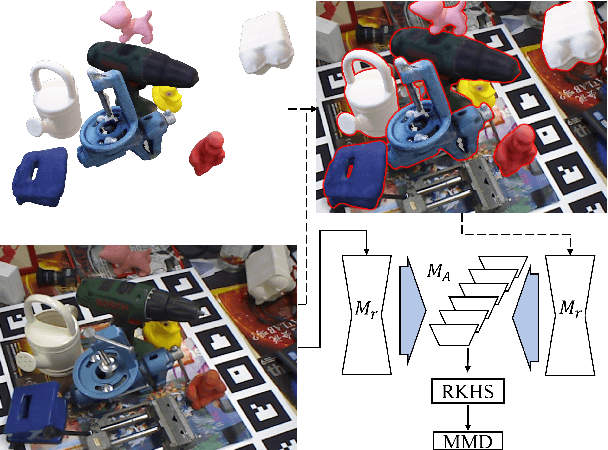

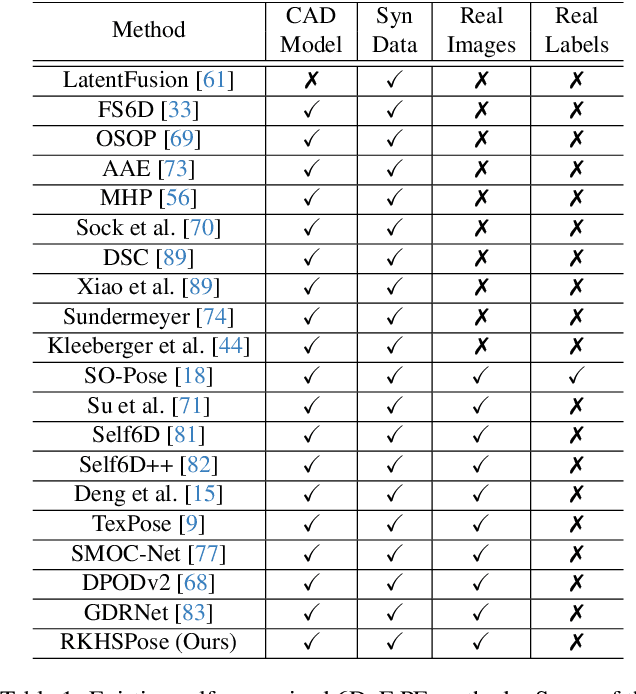

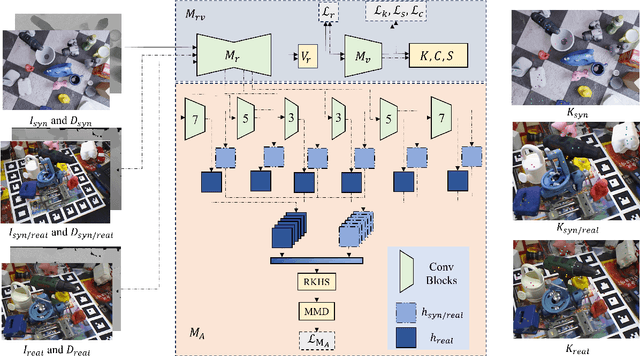

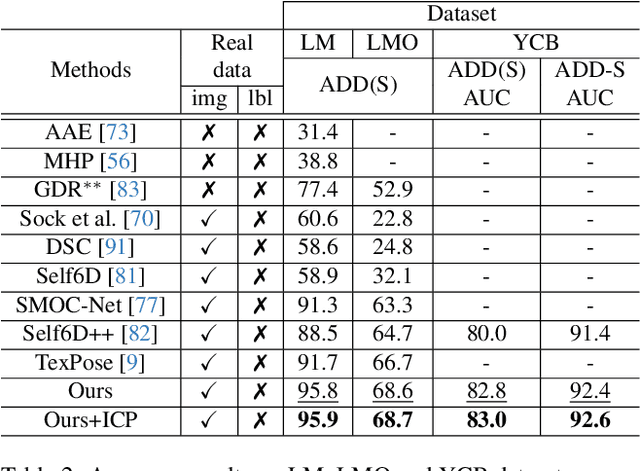

Pseudo-keypoint RKHS Learning for Self-supervised 6DoF Pose Estimation

Nov 18, 2023

Abstract:This paper addresses the simulation-to-real domain gap in 6DoF PE, and proposes a novel self-supervised keypoint radial voting-based 6DoF PE framework, effectively narrowing this gap using a learnable kernel in RKHS. We formulate this domain gap as a distance in high-dimensional feature space, distinct from previous iterative matching methods. We propose an adapter network, which evolves the network parameters from the source domain, which has been massively trained on synthetic data with synthetic poses, to the target domain, which is trained on real data. Importantly, the real data training only uses pseudo-poses estimated by pseudo-keypoints, and thereby requires no real groundtruth data annotations. RKHSPose achieves state-of-the-art performance on three commonly used 6DoF PE datasets including LINEMOD (+4.2%), Occlusion LINEMOD (+2%), and YCB-Video (+3%). It also compares favorably to fully supervised methods on all six applicable BOP core datasets, achieving within -10.8% to -0.3% of the top fully supervised results.

Learning Better Keypoints for Multi-Object 6DoF Pose Estimation

Aug 15, 2023

Abstract:We investigate the impact of pre-defined keypoints for pose estimation, and found that accuracy and efficiency can be improved by training a graph network to select a set of disperse keypoints with similarly distributed votes. These votes, learned by a regression network to accumulate evidence for the keypoint locations, can be regressed more accurately compared to previous heuristic keypoint algorithms. The proposed KeyGNet, supervised by a combined loss measuring both Wassserstein distance and dispersion, learns the color and geometry features of the target objects to estimate optimal keypoint locations. Experiments demonstrate the keypoints selected by KeyGNet improved the accuracy for all evaluation metrics of all seven datasets tested, for three keypoint voting methods. The challenging Occlusion LINEMOD dataset notably improved ADD(S) by +16.4% on PVN3D, and all core BOP datasets showed an AR improvement for all objects, of between +1% and +21.5%. There was also a notable increase in performance when transitioning from single object to multiple object training using KeyGNet keypoints, essentially eliminating the SISO-MIMO gap for Occlusion LINEMOD.

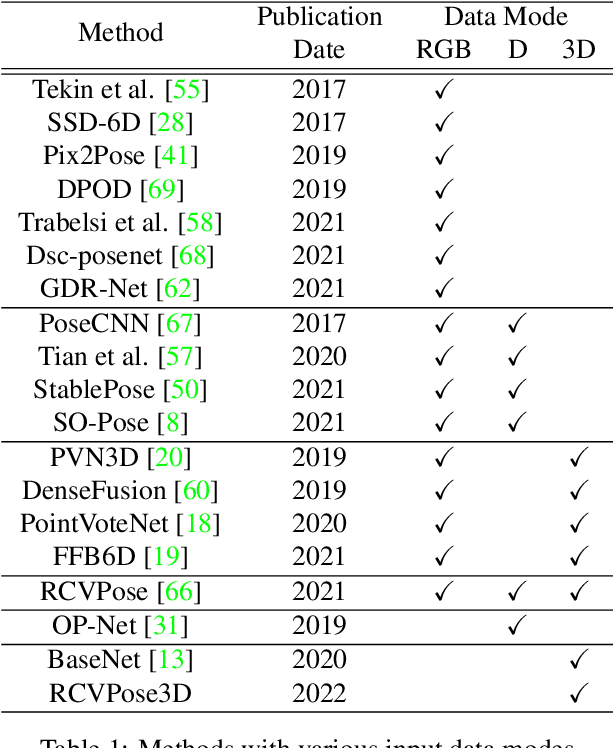

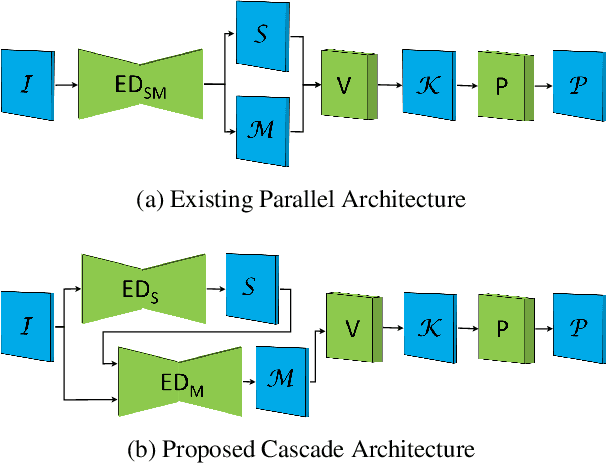

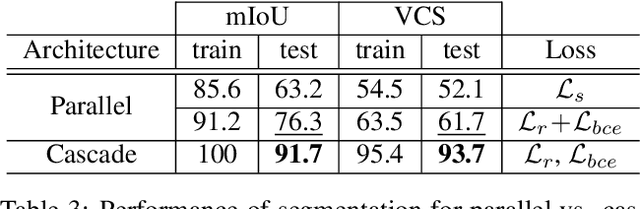

Keypoint Cascade Voting for Point Cloud Based 6DoF Pose Estimation

Oct 14, 2022

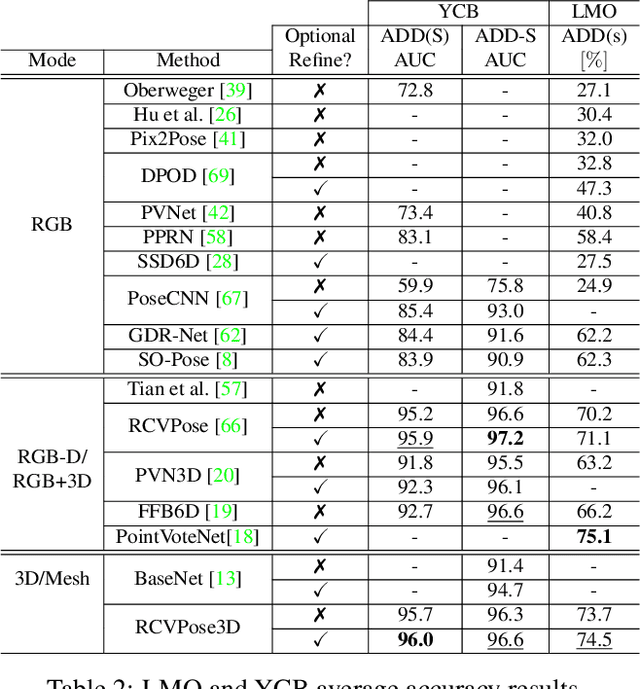

Abstract:We propose a novel keypoint voting 6DoF object pose estimation method, which takes pure unordered point cloud geometry as input without RGB information. The proposed cascaded keypoint voting method, called RCVPose3D, is based upon a novel architecture which separates the task of semantic segmentation from that of keypoint regression, thereby increasing the effectiveness of both and improving the ultimate performance. The method also introduces a pairwise constraint in between different keypoints to the loss function when regressing the quantity for keypoint estimation, which is shown to be effective, as well as a novel Voter Confident Score which enhances both the learning and inference stages. Our proposed RCVPose3D achieves state-of-the-art performance on the Occlusion LINEMOD (74.5%) and YCB-Video (96.9%) datasets, outperforming existing pure RGB and RGB-D based methods, as well as being competitive with RGB plus point cloud methods.

Vote from the Center: 6 DoF Pose Estimation in RGB-D Images by Radial Keypoint Voting

Apr 07, 2021

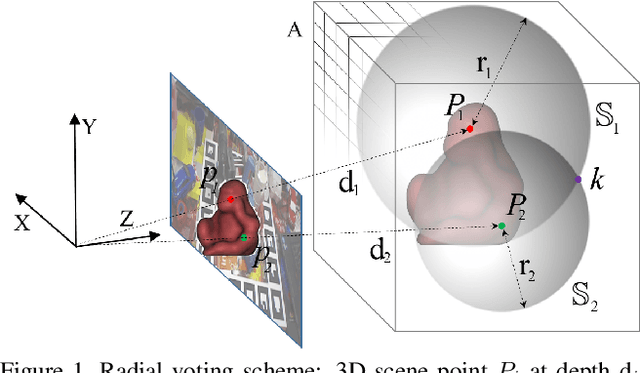

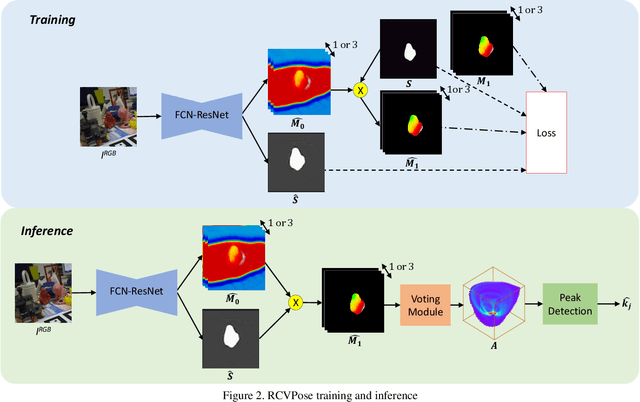

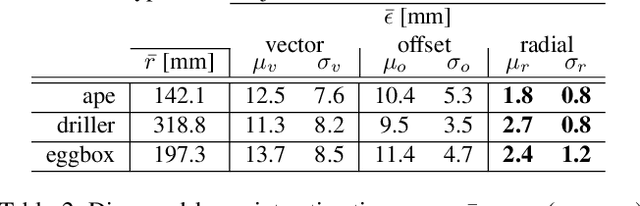

Abstract:We propose a novel keypoint voting scheme based on intersecting spheres, that is more accurate than existing schemes and allows for a smaller set of more disperse keypoints. The scheme forms the basis of the proposed RCVPose method for 6 DoF pose estimation of 3D objects in RGB-D data, which is particularly effective at handling occlusions. A CNN is trained to estimate the distance between the 3D point corresponding to the depth mode of each RGB pixel, and a set of 3 disperse keypoints defined in the object frame. At inference, a sphere of radius equal to this estimated distance is generated, centered at each 3D point. The surface of these spheres votes to increment a 3D accumulator space, the peaks of which indicate keypoint locations. The proposed radial voting scheme is more accurate than previous vector or offset schemes, and robust to disperse keypoints. Experiments demonstrate RCVPose to be highly accurate and competitive, achieving state-of-the-art results on LINEMOD 99.7%, YCB-Video 97.2% datasets, and notably scoring +7.9% higher than previous methods on the challenging Occlusion LINEMOD 71.1% dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge