Yangshuo He

A PAC-Bayesian Analysis of Channel-Induced Degradation in Edge Inference

Jan 16, 2026Abstract:In the emerging paradigm of edge inference, neural networks (NNs) are partitioned across distributed edge devices that collaboratively perform inference via wireless transmission. However, standard NNs are generally trained in a noiseless environment, creating a mismatch with the noisy channels during edge deployment. In this paper, we address this issue by characterizing the channel-induced performance deterioration as a generalization error against unseen channels. We introduce an augmented NN model that incorporates channel statistics directly into the weight space, allowing us to derive PAC-Bayesian generalization bounds that explicitly quantifies the impact of wireless distortion. We further provide closed-form expressions for practical channels to demonstrate the tractability of these bounds. Inspired by the theoretical results, we propose a channel-aware training algorithm that minimizes a surrogate objective based on the derived bound. Simulations show that the proposed algorithm can effectively improve inference accuracy by leveraging channel statistics, without end-to-end re-training.

Robustness in Wireless Distributed Learning: An Information-Theoretic Analysis

Jan 31, 2024

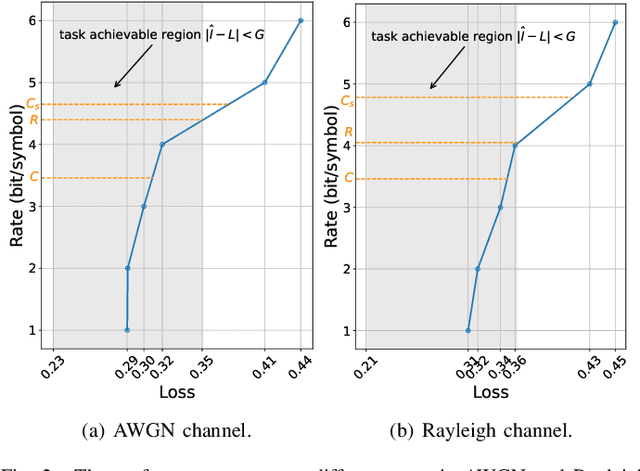

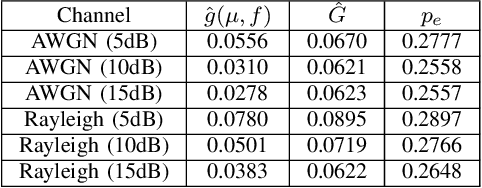

Abstract:In this paper, we take an information-theoretic approach to understand the robustness in wireless distributed learning. Upon measuring the difference in loss functions, we provide an upper bound of the performance deterioration due to imperfect wireless channels. Moreover, we characterize the transmission rate under task performance guarantees and propose the channel capacity gain resulting from the inherent robustness in wireless distributed learning. An efficient algorithm for approximating the derived upper bound is established for practical use. The effectiveness of our results is illustrated by the numerical simulations.

Rate-Adaptive Coding Mechanism for Semantic Communications With Multi-Modal Data

May 18, 2023

Abstract:Recently, the ever-increasing demand for bandwidth in multi-modal communication systems requires a paradigm shift. Powered by deep learning, semantic communications are applied to multi-modal scenarios to boost communication efficiency and save communication resources. However, the existing end-to-end neural network (NN) based framework without the channel encoder/decoder is incompatible with modern digital communication systems. Moreover, most end-to-end designs are task-specific and require re-design and re-training for new tasks, which limits their applications. In this paper, we propose a distributed multi-modal semantic communication framework incorporating the conventional channel encoder/decoder. We adopt NN-based semantic encoder and decoder to extract correlated semantic information contained in different modalities, including speech, text, and image. Based on the proposed framework, we further establish a general rate-adaptive coding mechanism for various types of multi-modal semantic tasks. In particular, we utilize unequal error protection based on semantic importance, which is derived by evaluating the distortion bound of each modality. We further formulate and solve an optimization problem that aims at minimizing inference delay while maintaining inference accuracy for semantic tasks. Numerical results show that the proposed mechanism fares better than both conventional communication and existing semantic communication systems in terms of task performance, inference delay, and deployment complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge