Yan Ni

Learning Agent Communication under Limited Bandwidth by Message Pruning

Dec 03, 2019

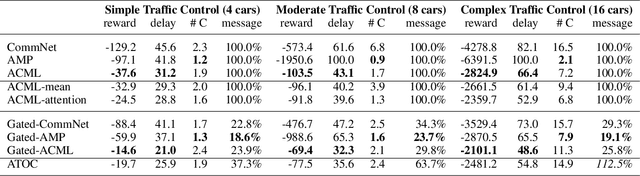

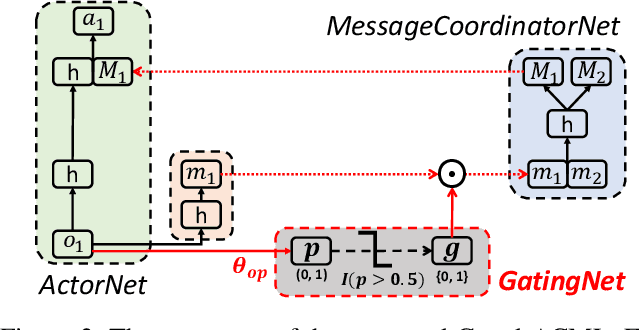

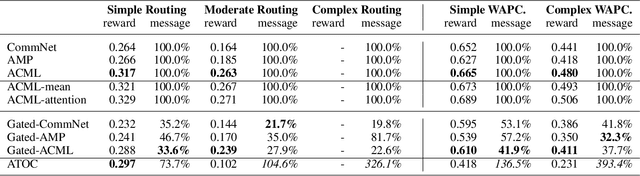

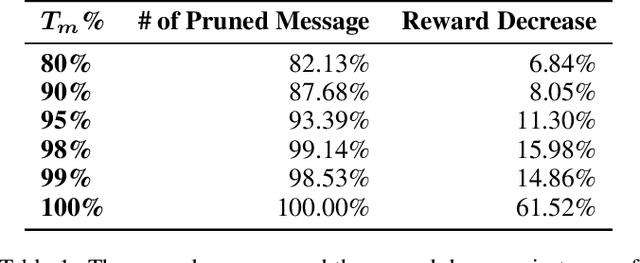

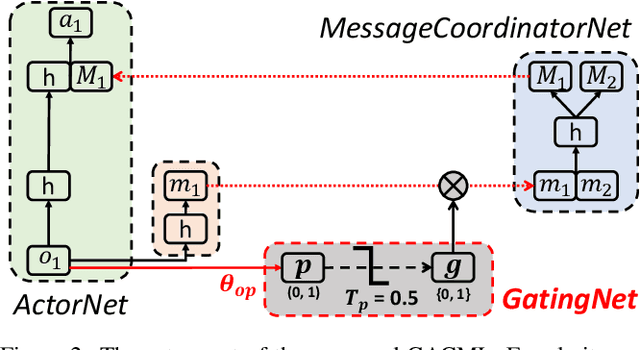

Abstract:Communication is a crucial factor for the big multi-agent world to stay organized and productive. Recently, Deep Reinforcement Learning (DRL) has been applied to learn the communication strategy and the control policy for multiple agents. However, the practical \emph{\textbf{limited bandwidth}} in multi-agent communication has been largely ignored by the existing DRL methods. Specifically, many methods keep sending messages incessantly, which consumes too much bandwidth. As a result, they are inapplicable to multi-agent systems with limited bandwidth. To handle this problem, we propose a gating mechanism to adaptively prune less beneficial messages. We evaluate the gating mechanism on several tasks. Experiments demonstrate that it can prune a lot of messages with little impact on performance. In fact, the performance may be greatly improved by pruning redundant messages. Moreover, the proposed gating mechanism is applicable to several previous methods, equipping them the ability to address bandwidth restricted settings.

Learning Multi-agent Communication under Limited-bandwidth Restriction for Internet Packet Routing

Feb 26, 2019

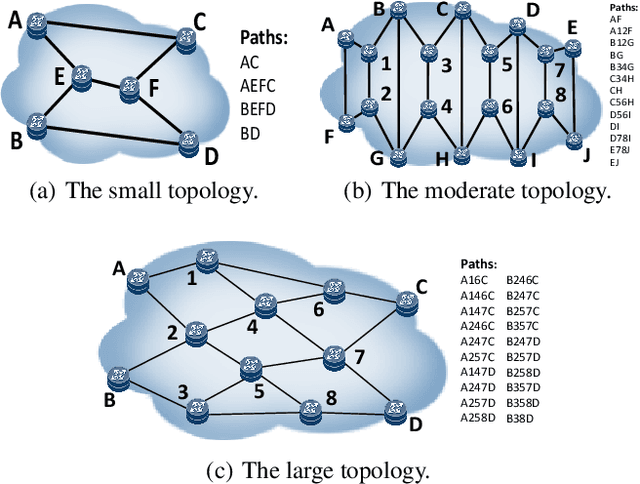

Abstract:Communication is an important factor for the big multi-agent world to stay organized and productive. Recently, the AI community has applied the Deep Reinforcement Learning (DRL) to learn the communication strategy and the control policy for multiple agents. However, when implementing the communication for real-world multi-agent applications, there is a more practical limited-bandwidth restriction, which has been largely ignored by the existing DRL-based methods. Specifically, agents trained by most previous methods keep sending messages incessantly in every control cycle; due to emitting too many messages, these methods are unsuitable to be applied to the real-world systems that have a limited bandwidth to transmit the messages. To handle this problem, we propose a gating mechanism to adaptively prune unprofitable messages. Results show that the gating mechanism can prune more than 80% messages with little damage to the performance. Moreover, our method outperforms several state-of-the-art DRL-based and rule-based methods by a large margin in both the real-world packet routing tasks and four benchmark tasks.

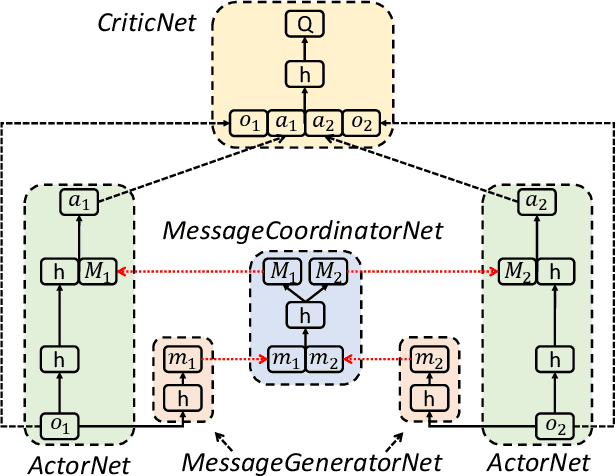

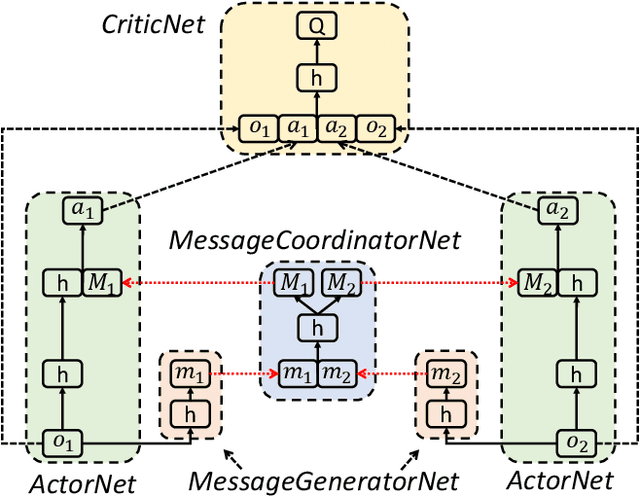

ACCNet: Actor-Coordinator-Critic Net for "Learning-to-Communicate" with Deep Multi-agent Reinforcement Learning

Oct 29, 2017

Abstract:Communication is a critical factor for the big multi-agent world to stay organized and productive. Typically, most previous multi-agent "learning-to-communicate" studies try to predefine the communication protocols or use technologies such as tabular reinforcement learning and evolutionary algorithm, which can not generalize to changing environment or large collection of agents. In this paper, we propose an Actor-Coordinator-Critic Net (ACCNet) framework for solving "learning-to-communicate" problem. The ACCNet naturally combines the powerful actor-critic reinforcement learning technology with deep learning technology. It can efficiently learn the communication protocols even from scratch under partially observable environment. We demonstrate that the ACCNet can achieve better results than several baselines under both continuous and discrete action space environments. We also analyse the learned protocols and discuss some design considerations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge