Yagiz Nalcakan

RASMD: RGB And SWIR Multispectral Driving Dataset for Robust Perception in Adverse Conditions

Apr 10, 2025Abstract:Current autonomous driving algorithms heavily rely on the visible spectrum, which is prone to performance degradation in adverse conditions like fog, rain, snow, glare, and high contrast. Although other spectral bands like near-infrared (NIR) and long-wave infrared (LWIR) can enhance vision perception in such situations, they have limitations and lack large-scale datasets and benchmarks. Short-wave infrared (SWIR) imaging offers several advantages over NIR and LWIR. However, no publicly available large-scale datasets currently incorporate SWIR data for autonomous driving. To address this gap, we introduce the RGB and SWIR Multispectral Driving (RASMD) dataset, which comprises 100,000 synchronized and spatially aligned RGB-SWIR image pairs collected across diverse locations, lighting, and weather conditions. In addition, we provide a subset for RGB-SWIR translation and object detection annotations for a subset of challenging traffic scenarios to demonstrate the utility of SWIR imaging through experiments on both object detection and RGB-to-SWIR image translation. Our experiments show that combining RGB and SWIR data in an ensemble framework significantly improves detection accuracy compared to RGB-only approaches, particularly in conditions where visible-spectrum sensors struggle. We anticipate that the RASMD dataset will advance research in multispectral imaging for autonomous driving and robust perception systems.

Pix2Next: Leveraging Vision Foundation Models for RGB to NIR Image Translation

Sep 25, 2024Abstract:This paper proposes Pix2Next, a novel image-to-image translation framework designed to address the challenge of generating high-quality Near-Infrared (NIR) images from RGB inputs. Our approach leverages a state-of-the-art Vision Foundation Model (VFM) within an encoder-decoder architecture, incorporating cross-attention mechanisms to enhance feature integration. This design captures detailed global representations and preserves essential spectral characteristics, treating RGB-to-NIR translation as more than a simple domain transfer problem. A multi-scale PatchGAN discriminator ensures realistic image generation at various detail levels, while carefully designed loss functions couple global context understanding with local feature preservation. We performed experiments on the RANUS dataset to demonstrate Pix2Next's advantages in quantitative metrics and visual quality, improving the FID score by 34.81% compared to existing methods. Furthermore, we demonstrate the practical utility of Pix2Next by showing improved performance on a downstream object detection task using generated NIR data to augment limited real NIR datasets. The proposed approach enables the scaling up of NIR datasets without additional data acquisition or annotation efforts, potentially accelerating advancements in NIR-based computer vision applications.

Monocular Vision-based Prediction of Cut-in Maneuvers with LSTM Networks

Mar 21, 2022

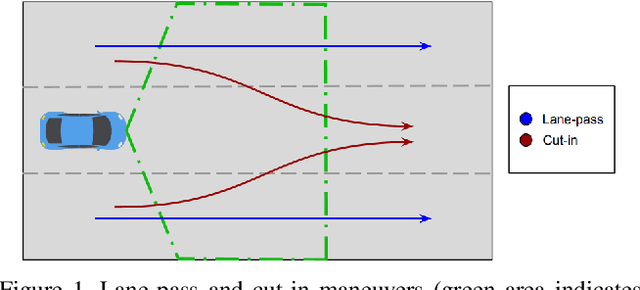

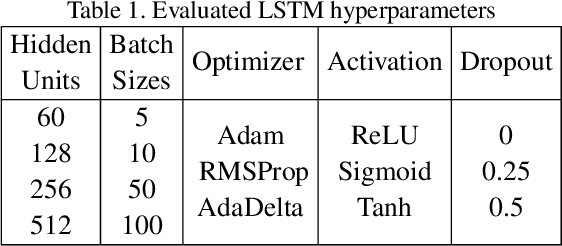

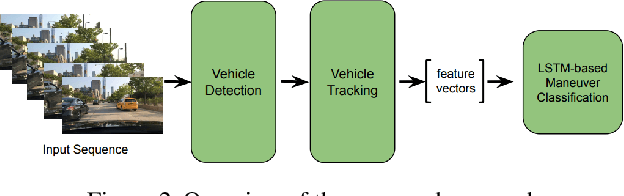

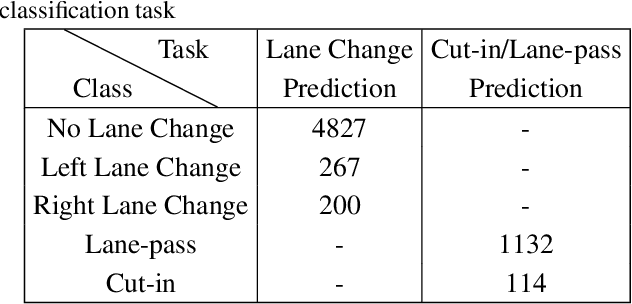

Abstract:Advanced driver assistance and automated driving systems should be capable of predicting and avoiding dangerous situations. This study proposes a method to predict potentially dangerous cut-in maneuvers happening in the ego lane. We follow a computer vision-based approach that only employs a single in-vehicle RGB camera, and we classify the target vehicle's maneuver based on the recent video frames. Our algorithm consists of a CNN-based vehicle detection and tracking step and an LSTM-based maneuver classification step. It is more computationally efficient than other vision-based methods since it exploits a small number of features for the classification step rather than feeding CNNs with RGB frames. We evaluated our approach on a publicly available driving dataset and a lane change detection dataset. We obtained 0.9585 accuracy with side-aware two-class (cut-in vs. lane-pass) classification models. Experiment results also reveal that our approach outperforms state-of-the-art approaches when used for lane change detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge