Xin-Jian Xu

Overlap-aware meta-learning attention to enhance hypergraph neural networks for node classification

Mar 11, 2025

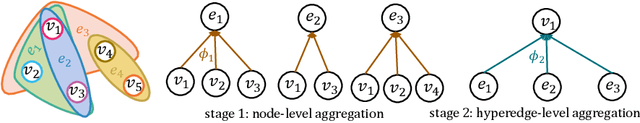

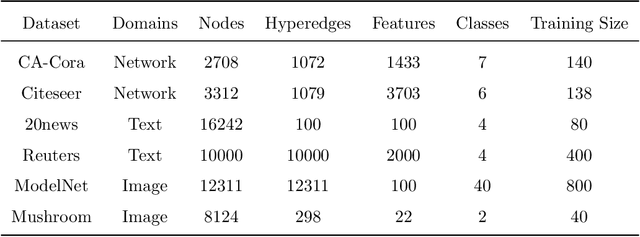

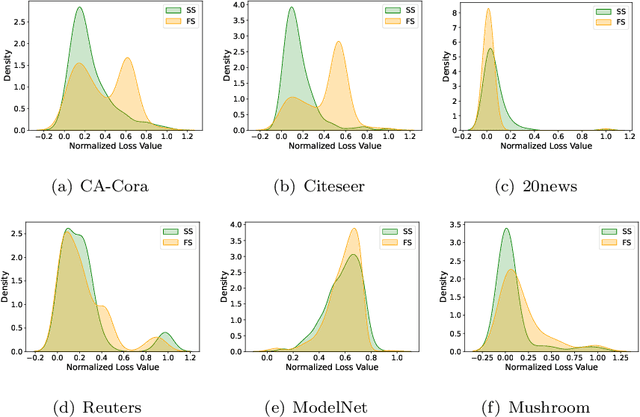

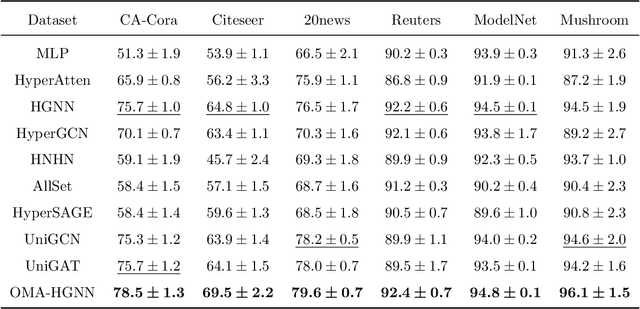

Abstract:Although hypergraph neural networks (HGNNs) have emerged as a powerful framework for analyzing complex datasets, their practical performance often remains limited. On one hand, existing networks typically employ a single type of attention mechanism, focusing on either structural or feature similarities during message passing. On the other hand, assuming that all nodes in current hypergraph models have the same level of overlap may lead to suboptimal generalization. To overcome these limitations, we propose a novel framework, overlap-aware meta-learning attention for hypergraph neural networks (OMA-HGNN). First, we introduce a hypergraph attention mechanism that integrates both structural and feature similarities. Specifically, we linearly combine their respective losses with weighted factors for the HGNN model. Second, we partition nodes into different tasks based on their diverse overlap levels and develop a multi-task Meta-Weight-Net (MWN) to determine the corresponding weighted factors. Third, we jointly train the internal MWN model with the losses from the external HGNN model and train the external model with the weighted factors from the internal model. To evaluate the effectiveness of OMA-HGNN, we conducted experiments on six real-world datasets and benchmarked its perfor-mance against nine state-of-the-art methods for node classification. The results demonstrate that OMA-HGNN excels in learning superior node representations and outperforms these baselines.

Recent Advances in Hypergraph Neural Networks

Mar 11, 2025

Abstract:The growing interest in hypergraph neural networks (HGNNs) is driven by their capacity to capture the complex relationships and patterns within hypergraph structured data across various domains, including computer vision, complex networks, and natural language processing. This paper comprehensively reviews recent advances in HGNNs and presents a taxonomy of mainstream models based on their architectures: hypergraph convolutional networks (HGCNs), hypergraph attention networks (HGATs), hypergraph autoencoders (HGAEs), hypergraph recurrent networks (HGRNs), and deep hypergraph generative models (DHGGMs). For each category, we delve into its practical applications, mathematical mechanisms, literature contributions, and open problems. Finally, we discuss some common challenges and promising research directions.This paper aspires to be a helpful resource that provides guidance for future research and applications of HGNNs.

Multi-view Spectral Clustering on the Grassmannian Manifold With Hypergraph Representation

Mar 08, 2025Abstract:Graph-based multi-view spectral clustering methods have achieved notable progress recently, yet they often fall short in either oversimplifying pairwise relationships or struggling with inefficient spectral decompositions in high-dimensional Euclidean spaces. In this paper, we introduce a novel approach that begins to generate hypergraphs by leveraging sparse representation learning from data points. Based on the generated hypergraph, we propose an optimization function with orthogonality constraints for multi-view hypergraph spectral clustering, which incorporates spectral clustering for each view and ensures consistency across different views. In Euclidean space, solving the orthogonality-constrained optimization problem may yield local maxima and approximation errors. Innovately, we transform this problem into an unconstrained form on the Grassmannian manifold. Finally, we devise an alternating iterative Riemannian optimization algorithm to solve the problem. To validate the effectiveness of the proposed algorithm, we test it on four real-world multi-view datasets and compare its performance with seven state-of-the-art multi-view clustering algorithms. The experimental results demonstrate that our method outperforms the baselines in terms of clustering performance due to its superior low-dimensional and resilient feature representation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge