Xilian Li

Visual Question Rewriting for Increasing Response Rate

Jun 04, 2021

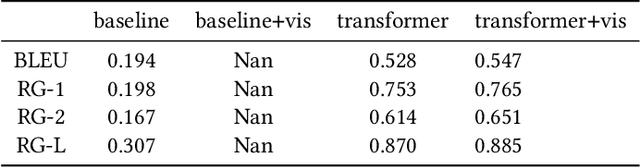

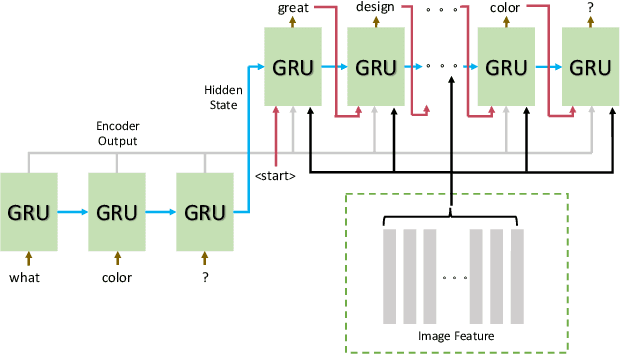

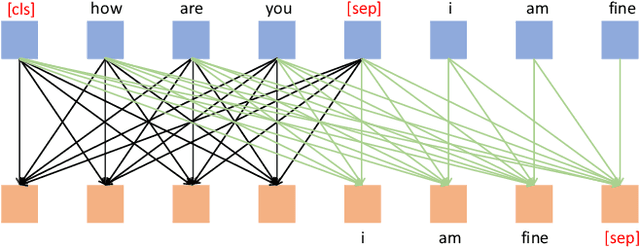

Abstract:When a human asks questions online, or when a conversational virtual agent asks human questions, questions triggering emotions or with details might more likely to get responses or answers. we explore how to automatically rewrite natural language questions to improve the response rate from people. In particular, a new task of Visual Question Rewriting(VQR) task is introduced to explore how visual information can be used to improve the new questions. A data set containing around 4K bland questions, attractive questions and images triples is collected. We developed some baseline sequence to sequence models and more advanced transformer based models, which take a bland question and a related image as input and output a rewritten question that is expected to be more attractive. Offline experiments and mechanical Turk based evaluations show that it is possible to rewrite bland questions in a more detailed and attractive way to increase the response rate, and images can be helpful.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge