Xiatao Kang

A pruning method based on the dissimilarity of angle among channels and filters

Oct 29, 2022

Abstract:Convolutional Neural Network (CNN) is more and more widely used in various fileds, and its computation and memory-demand are also increasing significantly. In order to make it applicable to limited conditions such as embedded application, network compression comes out. Among them, researchers pay more attention to network pruning. In this paper, we encode the convolution network to obtain the similarity of different encoding nodes, and evaluate the connectivity-power among convolutional kernels on the basis of the similarity. Then impose different level of penalty according to different connectivity-power. Meanwhile, we propose Channel Pruning base on the Dissimilarity of Angle (DACP). Firstly, we train a sparse model by GL penalty, and impose an angle dissimilarity constraint on the channels and filters of convolutional network to obtain a more sparse structure. Eventually, the effectiveness of our method is demonstrated in the section of experiment. On CIFAR-10, we reduce 66.86% FLOPs on VGG-16 with 93.31% accuracy after pruning, where FLOPs represents the number of floating-point operations per second of the model. Moreover, on ResNet-32, we reduce FLOPs by 58.46%, which makes the accuracy after pruning reach 91.76%.

Neural Network Panning: Screening the Optimal Sparse Network Before Training

Sep 27, 2022

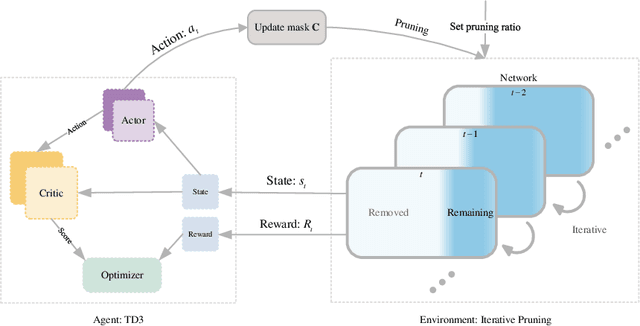

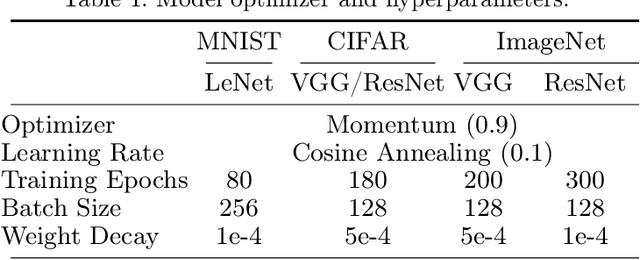

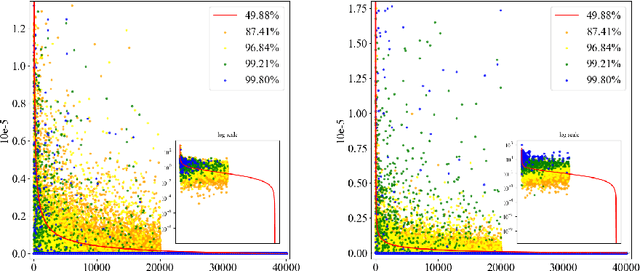

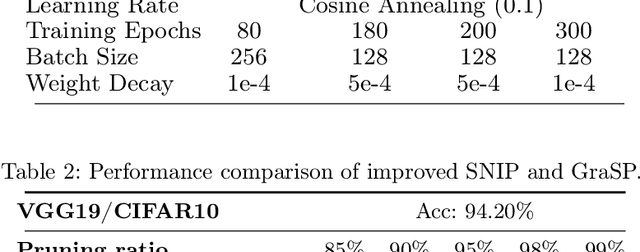

Abstract:Pruning on neural networks before training not only compresses the original models, but also accelerates the network training phase, which has substantial application value. The current work focuses on fine-grained pruning, which uses metrics to calculate weight scores for weight screening, and extends from the initial single-order pruning to iterative pruning. Through these works, we argue that network pruning can be summarized as an expressive force transfer process of weights, where the reserved weights will take on the expressive force from the removed ones for the purpose of maintaining the performance of original networks. In order to achieve optimal expressive force scheduling, we propose a pruning scheme before training called Neural Network Panning which guides expressive force transfer through multi-index and multi-process steps, and designs a kind of panning agent based on reinforcement learning to automate processes. Experimental results show that Panning performs better than various available pruning before training methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge