Xiaoxuan Xu

A Quantum Tunneling and Bio-Phototactic Driven Enhanced Dwarf Mongoose Optimizer for UAV Trajectory Planning and Engineering Problem

Nov 12, 2025Abstract:With the widespread adoption of unmanned aerial vehicles (UAV), effective path planning has become increasingly important. Although traditional search methods have been extensively applied, metaheuristic algorithms have gained popularity due to their efficiency and problem-specific heuristics. However, challenges such as premature convergence and lack of solution diversity still hinder their performance in complex scenarios. To address these issues, this paper proposes an Enhanced Multi-Strategy Dwarf Mongoose Optimization (EDMO) algorithm, tailored for three-dimensional UAV trajectory planning in dynamic and obstacle-rich environments. EDMO integrates three novel strategies: (1) a Dynamic Quantum Tunneling Optimization Strategy (DQTOS) to enable particles to probabilistically escape local optima; (2) a Bio-phototactic Dynamic Focusing Search Strategy (BDFSS) inspired by microbial phototaxis for adaptive local refinement; and (3) an Orthogonal Lens Opposition-Based Learning (OLOBL) strategy to enhance global exploration through structured dimensional recombination. EDMO is benchmarked on 39 standard test functions from CEC2017 and CEC2020, outperforming 14 advanced algorithms in convergence speed, robustness, and optimization accuracy. Furthermore, real-world validations on UAV three-dimensional path planning and three engineering design tasks confirm its practical applicability and effectiveness in field robotics missions requiring intelligent, adaptive, and time-efficient planning.

Towards Context-aware Convolutional Network for Image Restoration

Dec 15, 2024

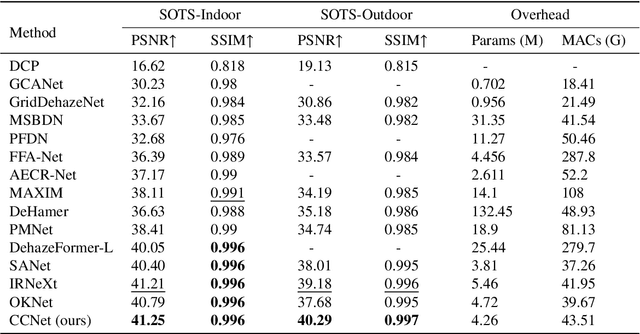

Abstract:Image restoration (IR) is a long-standing task to recover a high-quality image from its corrupted observation. Recently, transformer-based algorithms and some attention-based convolutional neural networks (CNNs) have presented promising results on several IR tasks. However, existing convolutional residual building modules for IR encounter limited ability to map inputs into high-dimensional and non-linear feature spaces, and their local receptive fields have difficulty in capturing long-range context information like Transformer. Besides, CNN-based attention modules for IR either face static abundant parameters or have limited receptive fields. To address the first issue, we propose an efficient residual star module (ERSM) that includes context-aware "star operation" (element-wise multiplication) to contextually map features into exceedingly high-dimensional and non-linear feature spaces, which greatly enhances representation learning. To further boost the extraction of contextual information, as for the second issue, we propose a large dynamic integration module (LDIM) which possesses an extremely large receptive field. Thus, LDIM can dynamically and efficiently integrate more contextual information that helps to further significantly improve the reconstruction performance. Integrating ERSM and LDIM into an U-shaped backbone, we propose a context-aware convolutional network (CCNet) with powerful learning ability for contextual high-dimensional mapping and abundant contextual information. Extensive experiments show that our CCNet with low model complexity achieves superior performance compared to other state-of-the-art IR methods on several IR tasks, including image dehazing, image motion deblurring, and image desnowing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge