X. Flora Meng

Nonstochastic Bandits with Infinitely Many Experts

Feb 09, 2021

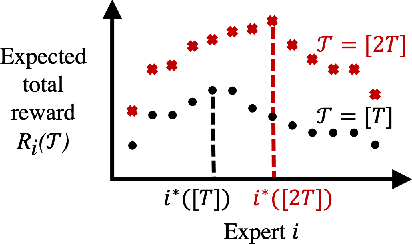

Abstract:We study the problem of nonstochastic bandits with infinitely many experts: A learner aims to maximize the total reward by taking actions sequentially based on bandit feedback while benchmarking against a countably infinite set of experts. We propose a variant of Exp4.P that, for finitely many experts, enables inference of correct expert rankings while preserving the order of the regret upper bound. We then incorporate the variant into a meta-algorithm that works on infinitely many experts. We prove a high-probability upper bound of $\tilde{\mathcal{O}} \big( i^*K + \sqrt{KT} \big)$ on the regret, up to polylog factors, where $i^*$ is the unknown position of the best expert, $K$ is the number of actions, and $T$ is the time horizon. We also provide an example of structured experts and discuss how to expedite learning in such case. Our meta-learning algorithm achieves the tightest regret upper bound for the setting considered when $i^* = \tilde{\mathcal{O}} \big( \sqrt{T/K} \big)$. If a prior distribution is assumed to exist for $i^*$, the probability of satisfying a tight regret bound increases with $T$, the rate of which can be fast.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge