Wu-Sheng Lu

Adaptive Nesterov Accelerated Distributional Deep Hedging for Efficient Volatility Risk Management

Feb 25, 2025

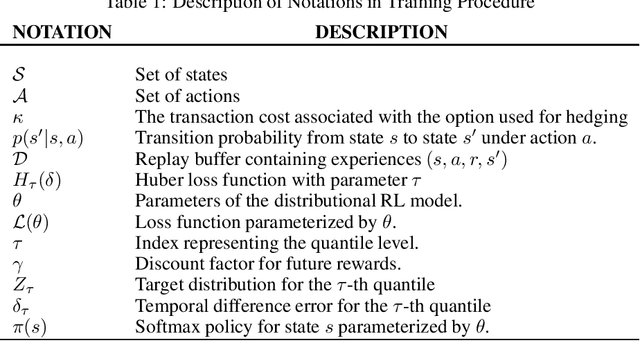

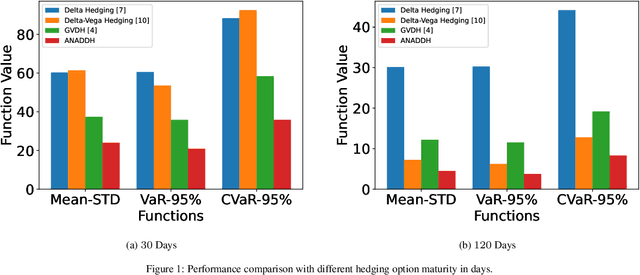

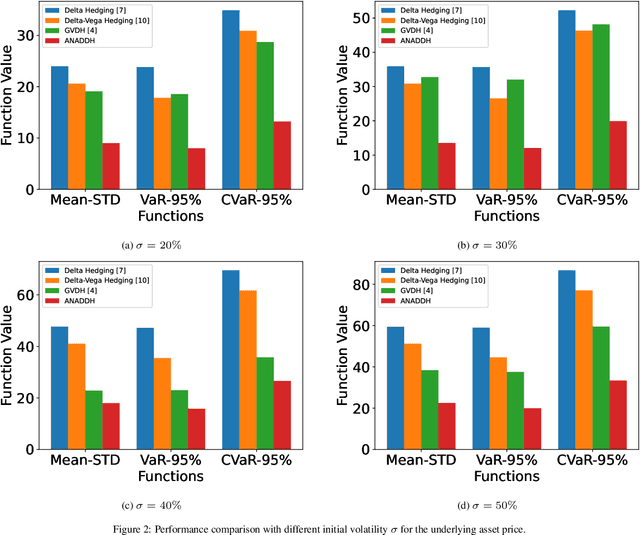

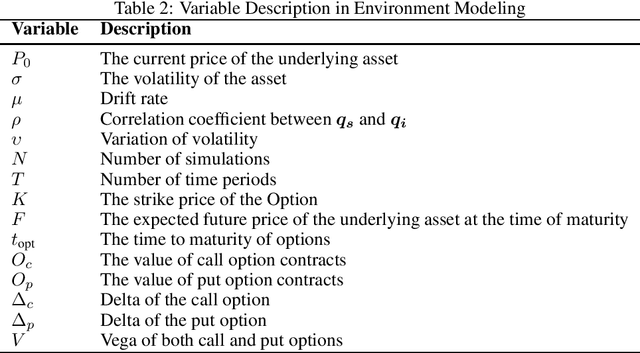

Abstract:In the field of financial derivatives trading, managing volatility risk is crucial for protecting investment portfolios from market changes. Traditional Vega hedging strategies, which often rely on basic and rule-based models, are hard to adapt well to rapidly changing market conditions. We introduce a new framework for dynamic Vega hedging, the Adaptive Nesterov Accelerated Distributional Deep Hedging (ANADDH), which combines distributional reinforcement learning with a tailored design based on adaptive Nesterov acceleration. This approach improves the learning process in complex financial environments by modeling the hedging efficiency distribution, providing a more accurate and responsive hedging strategy. The design of adaptive Nesterov acceleration refines gradient momentum adjustments, significantly enhancing the stability and speed of convergence of the model. Through empirical analysis and comparisons, our method demonstrates substantial performance gains over existing hedging techniques. Our results confirm that this innovative combination of distributional reinforcement learning with the proposed optimization techniques improves financial risk management and highlights the practical benefits of implementing advanced neural network architectures in the finance sector.

Robust Federated Learning with Global Sensitivity Estimation for Financial Risk Management

Feb 24, 2025

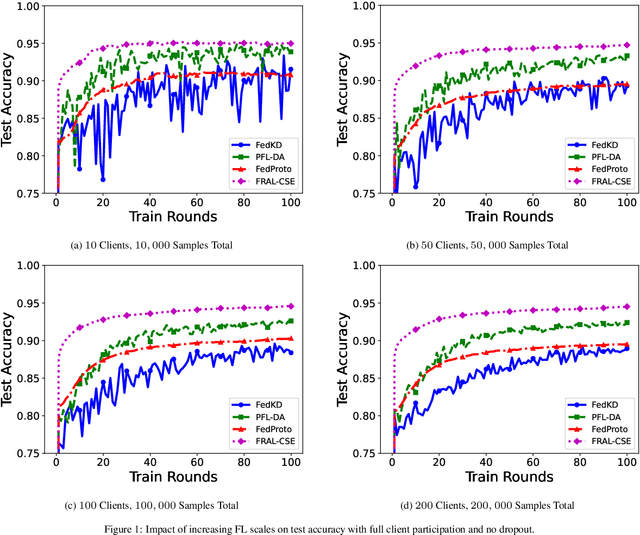

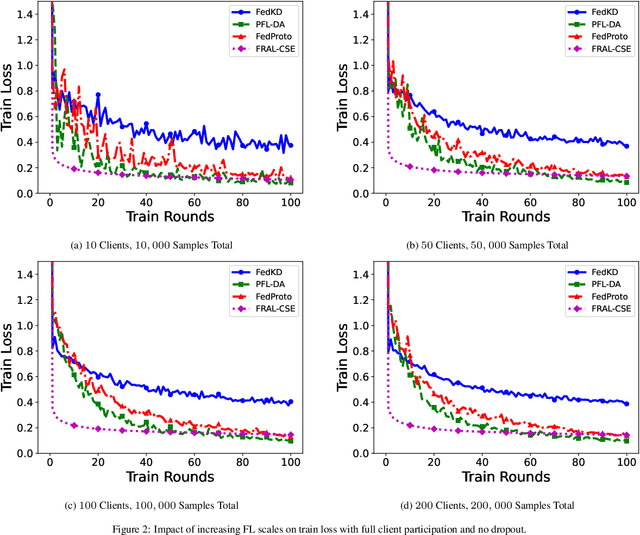

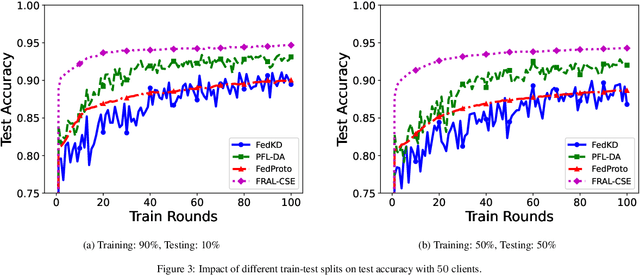

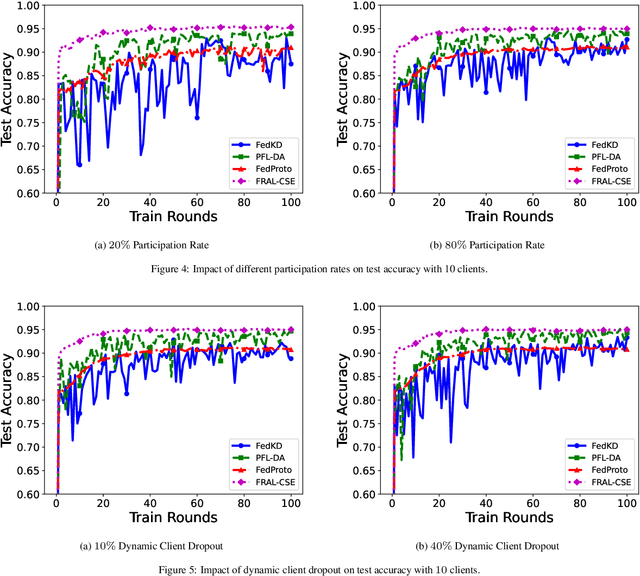

Abstract:In decentralized financial systems, robust and efficient Federated Learning (FL) is promising to handle diverse client environments and ensure resilience to systemic risks. We propose Federated Risk-Aware Learning with Central Sensitivity Estimation (FRAL-CSE), an innovative FL framework designed to enhance scalability, stability, and robustness in collaborative financial decision-making. The framework's core innovation lies in a central acceleration mechanism, guided by a quadratic sensitivity-based approximation of global model dynamics. By leveraging local sensitivity information derived from robust risk measurements, FRAL-CSE performs a curvature-informed global update that efficiently incorporates second-order information without requiring repeated local re-evaluations, thereby enhancing training efficiency and improving optimization stability. Additionally, distortion risk measures are embedded into the training objectives to capture tail risks and ensure robustness against extreme scenarios. Extensive experiments validate the effectiveness of FRAL-CSE in accelerating convergence and improving resilience across heterogeneous datasets compared to state-of-the-art baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge