Woo-Sung Jung

Unsupervised embedding of trajectories captures the latent structure of mobility

Dec 04, 2020

Abstract:Human mobility and migration drive major societal phenomena such as the growth and evolution of cities, epidemics, economies, and innovation. Historically, human mobility has been strongly constrained by physical separation -- geographic distance. However, geographic distance is becoming less relevant in the increasingly-globalized world in which physical barriers are shrinking while linguistic, cultural, and historical relationships are becoming more important. As understanding mobility is becoming critical for contemporary society, finding frameworks that can capture this complexity is of paramount importance. Here, using three distinct human trajectory datasets, we demonstrate that a neural embedding model can encode nuanced relationships between locations into a vector-space, providing an effective measure of distance that reflects the multi-faceted structure of human mobility. Focusing on the case of scientific mobility, we show that embeddings of scientific organizations uncover cultural and linguistic relations, and even academic prestige, at multiple levels of granularity. Furthermore, the embedding vectors reveal universal relationships between organizational characteristics and their place in the global landscape of scientific mobility. The ability to learn scalable, dense, and meaningful representations of mobility directly from the data can open up a new avenue of studying mobility across domains.

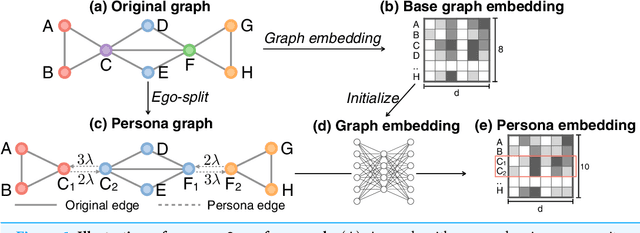

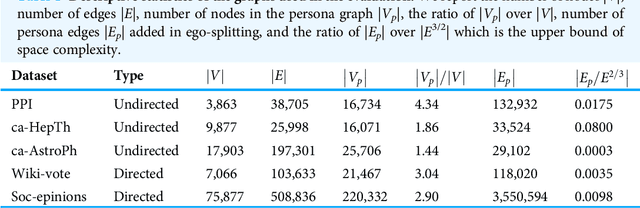

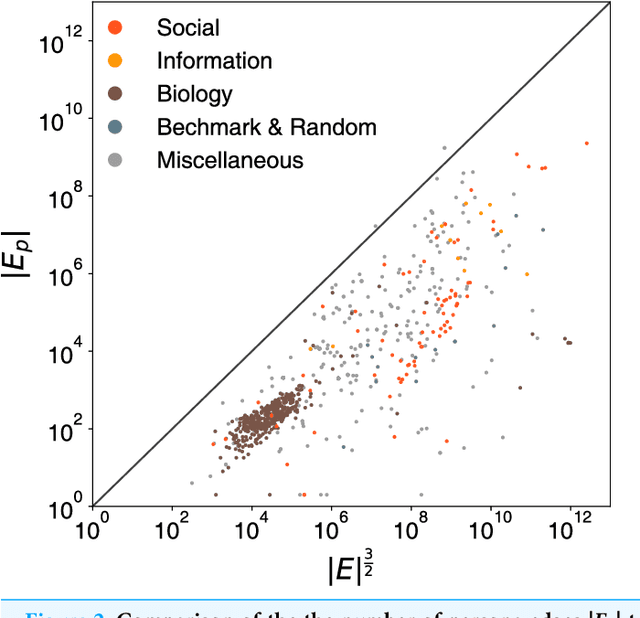

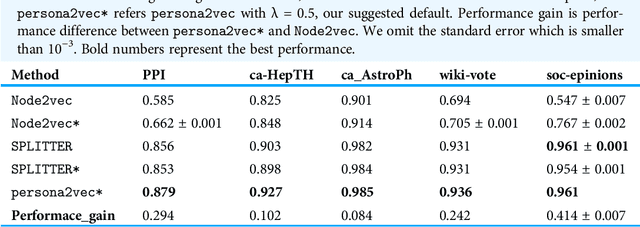

Persona2vec: A Flexible Multi-role Representations Learning Framework for Graphs

Jun 04, 2020

Abstract:Graph embedding techniques, which learn low-dimensional representations of a graph, are achieving state-of-the-art performance in many graph mining tasks. Most existing embedding algorithms assign a single vector to each node, implicitly assuming that a single representation is enough to capture all characteristics of the node. However, across many domains, it is common to observe pervasively overlapping community structure, where most nodes belong to multiple communities, playing different roles depending on the contexts. Here, we propose persona2vec, a graph embedding framework that efficiently learns multiple representations of nodes based on their structural contexts. Using link prediction-based evaluation, we show that our framework is significantly faster than the existing state-of-the-art model while achieving better performance. Graph embedding techniques, which learn low-dimensional representations of a graph, are achieving state-of-the-art performance in many graph mining tasks. Most existing embedding algorithms assign a single vector to each node, implicitly assuming that a single representation is enough to capture all characteristics of the node. However, across many domains, it is common to observe pervasively overlapping community structure, where most nodes belong to multiple communities, playing different roles depending on the contexts. Here, we propose persona2vec, a graph embedding framework that efficiently learns multiple representations of nodes based on their structural contexts. Using link prediction-based evaluation, we show that our framework is significantly faster than the existing state-of-the-art model while achieving better performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge