Wojciech Dudek

A framework for training and benchmarking algorithms that schedule robot tasks

Aug 29, 2024Abstract:Service robots work in a changing environment habited by exogenous agents like humans. In the service robotics domain, lots of uncertainties result from exogenous actions and inaccurate localisation of objects and the robot itself. This makes the robot task scheduling problem incredibly challenging. In this article, we propose a benchmarking system for systematically assessing the performance of algorithms scheduling robot tasks. The robot environment incorporates a room map, furniture, transportable objects, and moving humans; the system defines interfaces for the algorithms, tasks to be executed, and evaluation methods. The system consists of several tools, easing testing scenario generation for training AI-based scheduling algorithms and statistical testing. For benchmarking purposes, a set of scenarios is chosen, and the performance of several scheduling algorithms is assessed. The system source is published to serve the community for tuning and comparable assessment of robot task scheduling algorithms for service robots.

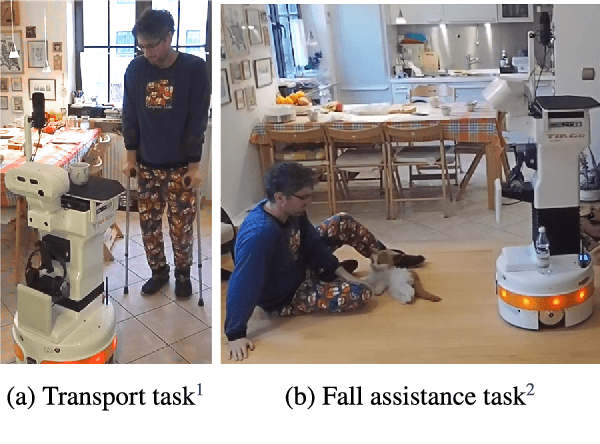

Rico: extended TIAGo robot towards up-to-date social and assistive robot usage scenarios

Jul 31, 2024Abstract:Social and assistive robotics have vastly increased in popularity in recent years. Due to the wide range of usage, robots executing such tasks must be highly reliable and possess enough functions to satisfy multiple scenarios. This article describes a mobile, artificial intelligence-driven, robotic platform Rico. Its prior usage in similar scenarios, the number of its capabilities, and the experiments it presented should qualify it as a proper arm-less platform for social and assistive circumstances.

Interpreting and learning voice commands with a Large Language Model for a robot system

Jul 31, 2024Abstract:Robots are increasingly common in industry and daily life, such as in nursing homes where they can assist staff. A key challenge is developing intuitive interfaces for easy communication. The use of Large Language Models (LLMs) like GPT-4 has enhanced robot capabilities, allowing for real-time interaction and decision-making. This integration improves robots' adaptability and functionality. This project focuses on merging LLMs with databases to improve decision-making and enable knowledge acquisition for request interpretation problems.

SPSysML: A meta-model for quantitative evaluation of Simulation-Physical Systems

Mar 17, 2023

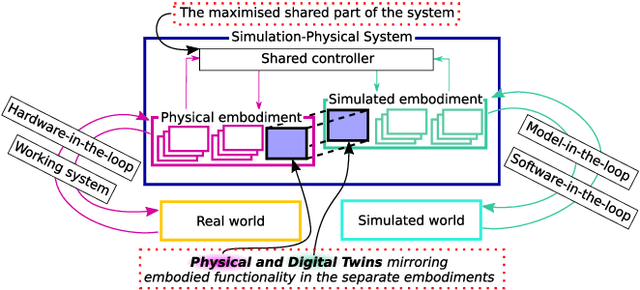

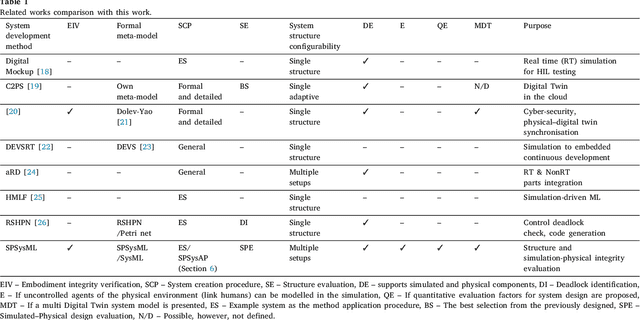

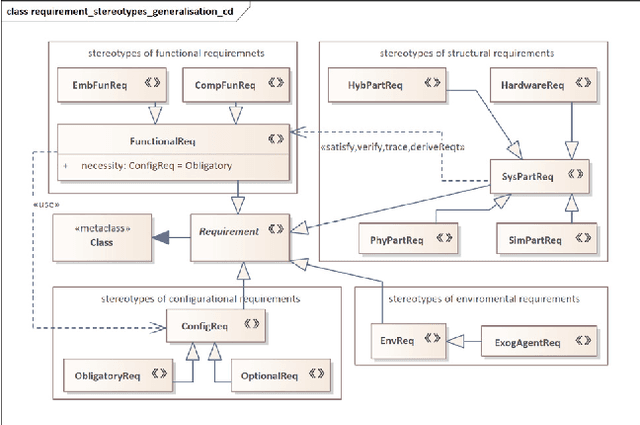

Abstract:Robotic systems are complex cyber-physical systems (CPS) commonly equipped with multiple sensors and effectors. Recent simulation methods enable the Digital Twin (DT) concept realisation. However, DT employment in robotic system development, e.g. in-development testing, is unclear. During the system development, its parts evolve from simulated mockups to physical parts which run software deployed on the actual hardware. Therefore, a design tool and a flexible development procedure ensuring the integrity of the simulated and physical parts are required. We aim to maximise the integration between a CPS's simulated and physical parts in various setups. The better integration, the better simulation-based testing coverage of the physical part (hardware and software). We propose a Domain Specification Language (DSL) based on Systems Modeling Language (SysML) that we refer to as SPSysML (Simulation-Physical System Modeling Language). SPSysML defines the taxonomy of a Simulation-Physical System (SPSys), being a CPS consisting of at least a physical or simulated part. In particular, the simulated ones can be DTs. We propose a SPSys Development Procedure (SPSysDP) that enables the maximisation of the simulation-physical integrity of SPSys by evaluating the proposed factors. SPSysDP is used to develop a complex robotic system for the INCARE project. In subsequent iterations of SPSysDP, the simulation-physical integrity of the system is maximised. As a result, the system model consists of fewer components, and a greater fraction of the system components are shared between various system setups. We implement and test the system with popular frameworks, Robot Operating System (ROS) and Gazebo simulator. SPSysML with SPSysDP enables the design of SPSys (including DT and CPS), multi-setup system development featuring maximised integrity between simulation and physical parts in its setups.

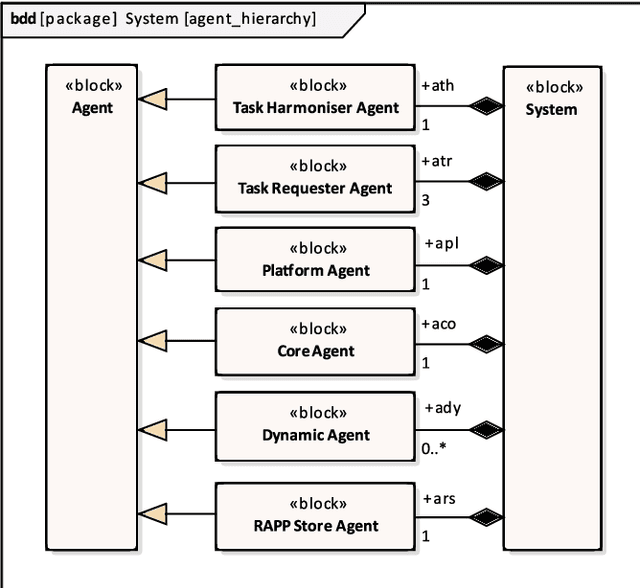

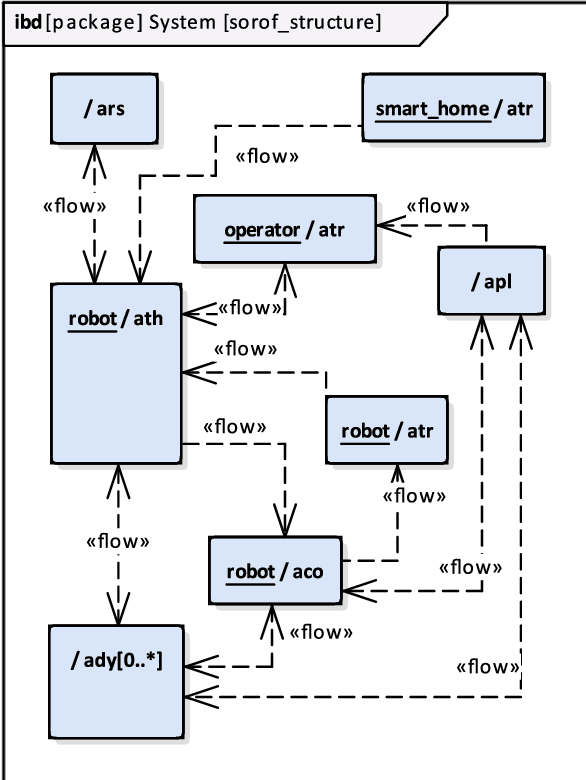

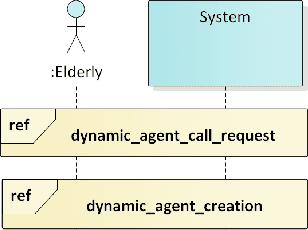

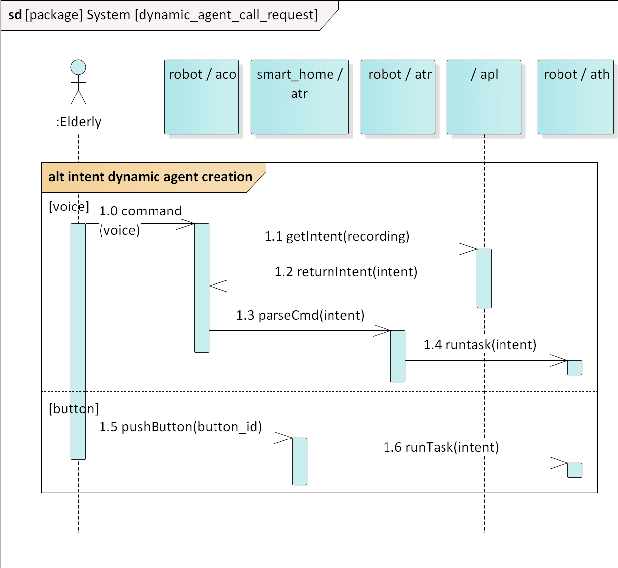

An intent-based approach for creating assistive robots' control systems

May 25, 2020

Abstract:The current research standards in robotics demand general approaches to robots' controllers development. In the assistive robotics domain, the human-machine interaction plays a~substantial role. Especially, the humans generate intents that affect robot control system. In the article an approach is presented for creating control systems for assistive robots, which reacts to users' intents delivered by voice commands, buttons, or an operator console. The whole approach was applied to the real system consisting of customised \tiago{} robot and additional hardware components. The exemplary experiments performed on the platform illustrate the motivation for diversification of human-machine interfaces in assistive robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge