Winfried Decking

Deep Learning-Based Autoencoder for Data-Driven Modeling of an RF Photoinjector

Feb 18, 2021

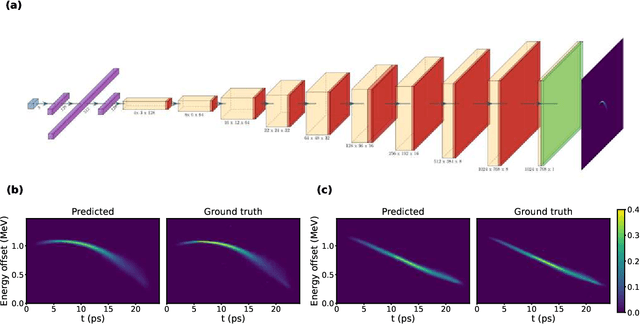

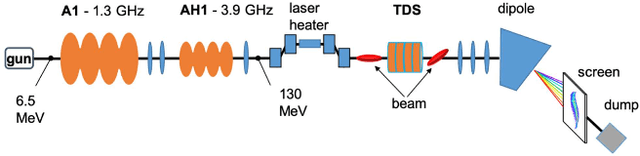

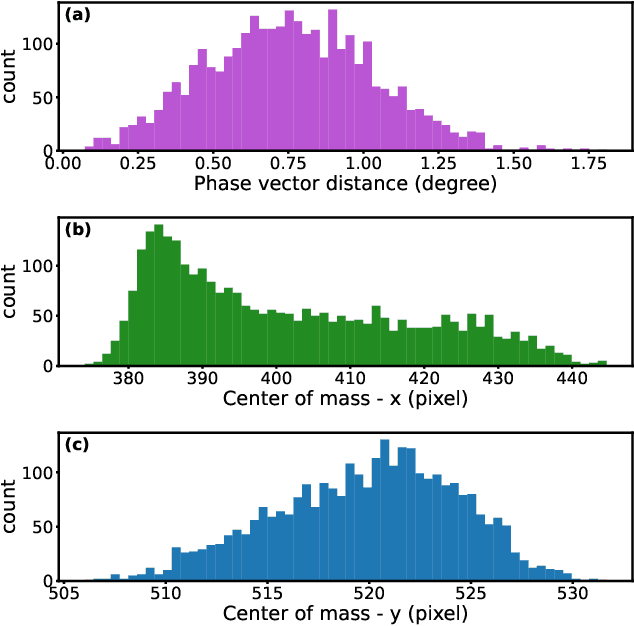

Abstract:We adopt a data-driven approach to model the longitudinal phase-space diagnostic beamline at the European XFEL photoinjector. A deep convolutional neural network (decoder) is used to build a 2D distribution from a small feature space learned by another neural network (encoder). We demonstrate that the autoencoder trained on experimental data can make very high-quality predictions of megapixel images for the longitudinal phase-space measurement. The prediction significantly outperforms existing methods. We also show the explicability of the autoencoder by sharing the same decoder with more than one encoder used for different setups of the photoinjector. This opens the door to a new way of accurately modeling a photoinjector using neural networks. The approach can possibly be extended to the whole accelerator and even the photon beamlines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge