Will Sawin

HNHN: Hypergraph Networks with Hyperedge Neurons

Jun 22, 2020

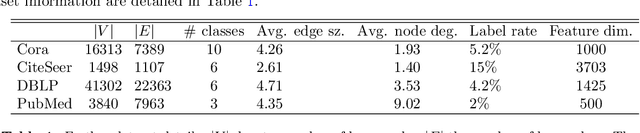

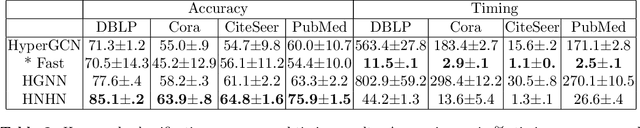

Abstract:Hypergraphs provide a natural representation for many real world datasets. We propose a novel framework, HNHN, for hypergraph representation learning. HNHN is a hypergraph convolution network with nonlinear activation functions applied to both hypernodes and hyperedges, combined with a normalization scheme that can flexibly adjust the importance of high-cardinality hyperedges and high-degree vertices depending on the dataset. We demonstrate improved performance of HNHN in both classification accuracy and speed on real world datasets when compared to state of the art methods.

COPT: Coordinated Optimal Transport on Graphs

Mar 09, 2020

Abstract:We introduce COPT, a novel distance metric between graphs defined via an optimization routine, computing a coordinated pair of optimal transport maps simultaneously. This is an unsupervised way to learn general-purpose graph representations, it can be used for both graph sketching and graph comparison. COPT involves simultaneously optimizing dual transport plans, one between the vertices of two graphs, and another between graph signal probability distributions. We show both theoretically and empirically that our method preserves important global structural information on graphs, in particular spectral information, making it well-suited for tasks on graphs including retrieval, classification, summarization, and visualization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge