Wided Souidene

Hybrid BYOL-ViT: Efficient approach to deal with small datasets

Nov 15, 2021

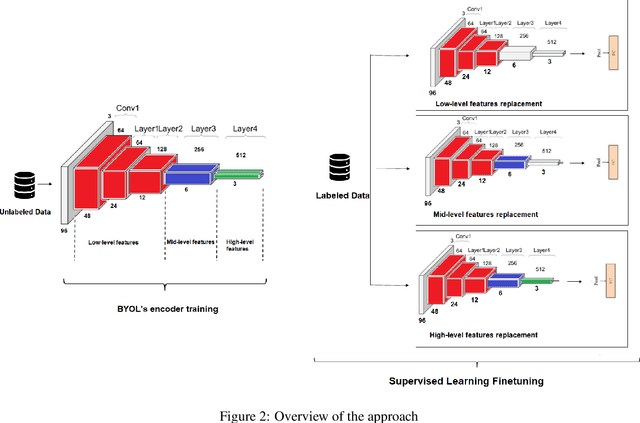

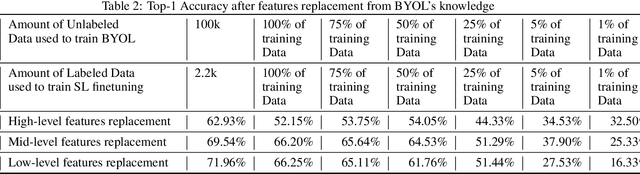

Abstract:Supervised learning can learn large representational spaces, which are crucial for handling difficult learning tasks. However, due to the design of the model, classical image classification approaches struggle to generalize to new problems and new situations when dealing with small datasets. In fact, supervised learning can lose the location of image features which leads to supervision collapse in very deep architectures. In this paper, we investigate how self-supervision with strong and sufficient augmentation of unlabeled data can train effectively the first layers of a neural network even better than supervised learning, with no need for millions of labeled data. The main goal is to disconnect pixel data from annotation by getting generic task-agnostic low-level features. Furthermore, we look into Vision Transformers (ViT) and show that the low-level features derived from a self-supervised architecture can improve the robustness and the overall performance of this emergent architecture. We evaluated our method on one of the smallest open-source datasets STL-10 and we obtained a significant boost of performance from 41.66% to 83.25% when inputting low-level features from a self-supervised learning architecture to the ViT instead of the raw images.

An original framework for Wheat Head Detection using Deep, Semi-supervised and Ensemble Learning within Global Wheat Head Detection (GWHD) Dataset

Sep 24, 2020

Abstract:In this paper, we propose an original object detection methodology applied to Global Wheat Head Detection (GWHD) Dataset. We have been through two major architectures of object detection which are FasterRCNN and EfficientDet, in order to design a novel and robust wheat head detection model. We emphasize on optimizing the performance of our proposed final architectures. Furthermore, we have been through an extensive exploratory data analysis and adapted best data augmentation techniques to our context. We use semi supervised learning to boost previous supervised models of object detection. Moreover, we put much effort on ensemble to achieve higher performance. Finally we use specific post-processing techniques to optimize our wheat head detection results. Our results have been submitted to solve a research challenge launched on the GWHD Dataset which is led by nine research institutes from seven countries. Our proposed method was ranked within the top 6% in the above mentioned challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge